In a swift, eye-popping special address at SIGGRAPH, NVIDIA execs described the forces driving the next era in graphics, and the company’s expanding range of tools to accelerate them.

“The combination of AI and computer graphics will power the metaverse, the next evolution of the internet,” said Jensen Huang, founder and CEO of NVIDIA, kicking off the 45-minute talk.

It will be home to connected virtual worlds and digital twins, a place for real work as well as play. And, Huang said, it will be vibrant with what will become one of the most popular forms of robots: digital human avatars.

With 45 demos and slides, five NVIDIA speakers announced:

- A new platform for creating avatars, NVIDIA Omniverse Avatar Cloud Engine (ACE).

- Plans to build out Universal Scene Description (USD), the language of the metaverse.

- Major extensions to NVIDIA Omniverse, the computing platform for creating virtual worlds and digital twins.

- Tools to supercharge graphics workflows with machine learning.

“The announcements we made today further advance the metaverse, a new computing platform with new programming models, new architectures and new standards,” he said.

Metaverse applications are already here.

Huang pointed to consumers trying out virtual 3D products with augmented reality, telcos creating digital twins of their radio networks to optimize and deploy radio towers and companies creating digital twins of warehouses and factories to optimize their layout and logistics.

Enter the Avatars

The metaverse will come alive with virtual assistants, avatars we interact with as naturally as talking to another person. They’ll work in digital factories, play in online games and provide customer service for e-tailers.

“There will be billions of avatars,” said Huang, calling them “one of the most widely used kinds of robots” that will be designed, trained and operated in Omniverse.

Digital humans and avatars require natural language processing, computer vision, complex facial and body animations and more. To move and speak in realistic ways, this suite of complex technologies must be synced to the millisecond.

It’s hard work that NVIDIA aims to simplify and accelerate with Omniverse Avatar Cloud Engine. ACE is a collection of AI models and services that build on NVIDIA’s work spanning everything from conversational AI to animation tools like Audio2Face and Audio2Emotion.

“With Omniverse ACE, developers can build, configure and deploy their avatar application across any engine in any public or private cloud,” said Simon Yuen, a senior director of graphics and AI at NVIDIA. “We want to democratize building interactive avatars for every platform.”

ACE will be available early next year, running on embedded systems and all major cloud services.

Yuen also demonstrated the latest version of Omniverse Audio2Face, an AI model that can create facial animation directly from voices.

“We just added more features to analyze and automatically transfer your emotions to your avatar,” he said.

Future versions of Audio2Face will create avatars from a single photo, applying textures automatically and generating animation-ready 3D meshes. They’ll sport high-fidelity simulations of muscle movements an AI can learn from watching a video — even lifelike hair that responds as expected to virtual grooming.

USD, a Foundation for the 3D Internet

Many superpowers of the metaverse will be grounded in USD, a foundation for the 3D internet.

The metaverse “needs a standard way of describing all things within 3D worlds,” said Rev Lebaredian, vice president of Omniverse and simulation technology at NVIDIA.

“We believe Universal Scene Description, invented and open sourced by Pixar, is the standard scene description for the next era of the internet,” he added, comparing USD to HTML in the 2D web.

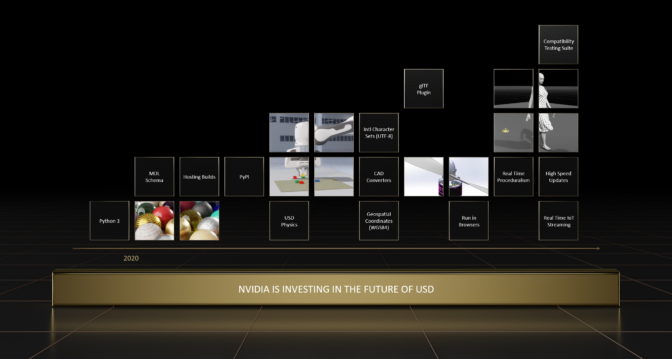

Lebaredian described NVIDIA’s vision for USD as a key to opening even more opportunities than those in the physical world.

“Our next milestones aim to make USD performant for real-time, large-scale virtual worlds and industrial digital twins,” he said, noting NVIDIA’s plans to help build out support in USD for international character sets, geospatial coordinates and real-time streaming of IoT data.

To further accelerate USD adoption, NVIDIA will release a compatibility testing and certification suite for USD. It lets developers know their custom USD components produce an expected result.

In addition, NVIDIA announced a set of simulation-ready USD assets, designed for use in industrial digital twins and AI training workflows. They join a wealth of USD resources available online for free including USD-ready scenes, on-demand tutorials, documentation and instructor-led courses.

“We want everyone to help build and advance USD,” said Lebaredian.

Omniverse Expands Its Palette

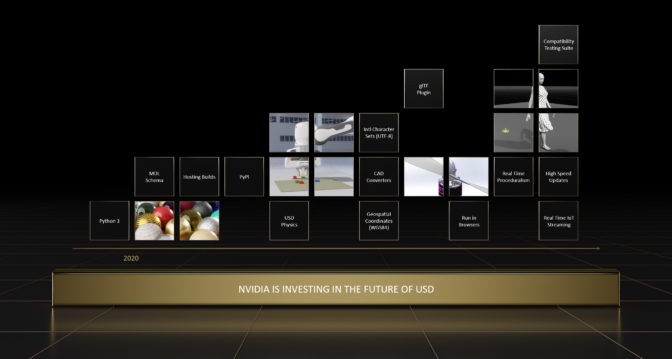

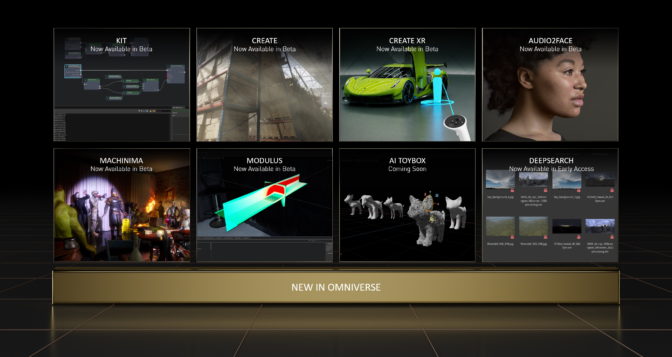

One of the biggest announcements of the special address was a major new release of NVIDIA Omniverse, a platform that’s been downloaded nearly 200,000 times.

Huang called Omniverse “a USD platform, a toolkit for building metaverse applications, and a compute engine to run virtual worlds.”

The latest version packs several upgraded core technologies and more connections to popular tools.

The links, called Omniverse Connectors, are now in development for Unity, Blender, Autodesk Alias, Siemens JT, SimScale, the Open Geospatial Consortium and more. Connectors are now available in beta for PTC Creo, Visual Components and SideFX Houdini. These new developments join Siemens Xcelerator, now part of the Omniverse network, welcoming more industrial customers into the era of digital twins.

Like the internet itself, Omniverse is “a network of networks,” connecting users across industries and disciplines, said Steve Parker, NVIDIA’s vice president of professional graphics.

Nearly a dozen leading companies will showcase Omniverse capabilities at SIGGRAPH, including hardware, software and cloud-service vendors ranging from AWS and Adobe to Dell, Epic and Microsoft. A half dozen companies will conduct NVIDIA-powered sessions on topics such as AI and virtual worlds.

Speeding Physics, Animating Animals

Parker detailed several technology upgrades in Omniverse. They span enhancements for simulating physically accurate materials with the Material Definition Language (MDL), real-time physics with PhysX and the hybrid rendering and AI system, RTX.

“These core technology pillars are powered by NVIDIA high performance computing from the edge to the cloud,” Parker said.

For example, PhysX now supports soft-body and particle-cloth simulation, bringing more physical accuracy to virtual worlds in real time. And NVIDIA is fully open sourcing MDL so it can readily support graphics API standards like OpenGL or Vulkan, making the materials standard more broadly available to developers.

Omniverse also will include neural graphics capabilities developed by NVIDIA Research that combine RTX graphics and AI. For example:

- Animal Modelers let artists iterate on an animal’s form with point clouds, then automatically generate a 3D mesh.

- GauGAN360, the next evolution of NVIDIA GauGAN, generates 8K, 360-degree panoramas that can easily be loaded into an Omniverse scene.

- Instant NeRF creates 3D objects and scenes from 2D images.

An Omniverse Extension for NVIDIA Modulus, a machine learning framework, will let developers use AI to speed simulations of real-world physics up to 100,000x, so the metaverse looks and feels like the physical world.

In addition, Omniverse Machinima — subject of a lively contest at SIGGRAPH — now sports content from Post Scriptum, Beyond the Wire and Shadow Warrior 3 as well as new AI animation tools like Audio2Gesture.

A demo from Industrial Light & Magic showed another new feature. Omniverse DeepSearch uses AI to help teams intuitively search through massive databases of untagged assets, bringing up accurate results for terms even when they’re not specifically listed in metadata.

Graphics Get Smart

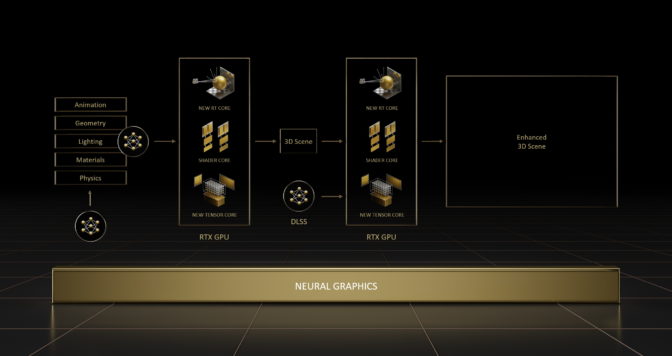

One of the essential pillars of the emerging metaverse is neural graphics. It’s a hybrid discipline that harnesses neural network models to accelerate and enhance computer graphics.

“Neural graphics intertwines AI and graphics, paving the way for a future graphics pipeline that is amenable to learning from data,” said Sanja Fidler, vice president of AI at NVIDIA. “Neural graphics will redefine how virtual worlds are created, simulated and experienced by users,” she added.

AI will help artists spawn the massive amount of 3D content needed to create the metaverse. For example, they can use neural graphics to capture objects and behaviors in the physical world quickly.

Fidler described NVIDIA software to do just that, Instant NeRF, a tool to create a 3D object or scene from 2D images. It’s the subject of one of NVIDIA’s two best paper awards at SIGGRAPH.

In the other best paper award, neural graphics powers a model that can predict and reduce reaction latencies in esports and AR/VR applications. The two best papers are among 16 total that NVIDIA researchers are presenting this week at SIGGRAPH.

Designers and researchers can apply neural graphics and other techniques to create their own award-winning work using new software development kits NVIDIA unveiled at the event.

Fidler described one of them, Kaolin Wisp, a suite of tools to create neural fields — AI models that represent a 3D scene or object — with just a few lines of code.

Separately, NVIDIA announced NeuralVDB, the next evolution of the open-sourced standard OpenVDB that industries from visual effects to scientific computing use to simulate and render water, fire, smoke and clouds.

NeuralVDB uses neural models and GPU optimization to dramatically reduce memory requirements so users can interact with extremely large and complex datasets in real time and share them more efficiently.

“AI, the most powerful technology force of our time, will revolutionize every field of computer science, including computer graphics, and NVIDIA RTX is the engine of neural graphics,” Huang said.

Watch the full special address at NVIDIA’s SIGGRAPH event site. That’s where you’ll also find details of labs, presentations and the debut of a behind-the-scenes documentary on how we created our latest GTC keynote.