Editor’s note: This is part of a series profiling people advancing science with high performance computing.

Ryan Coffee makes movies of molecules. Their impacts are huge.

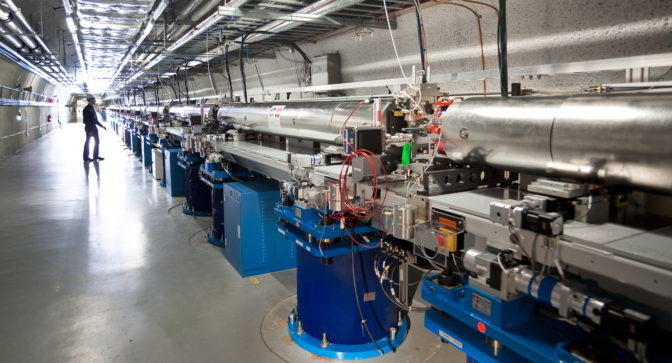

The senior scientist at the SLAC National Accelerator Laboratory (above) says these visualizations could unlock the secrets of photosynthesis. They’ve already shown how sunlight can cause skin cancer.

Long term, they may help chemists engineer life-saving drugs and batteries that let electric cars go farther on a charge.

To make films that inspire that kind of work, Coffee’s team needs high-performance computers, AI and an excellent projector.

A Brighter Light

The projector is called the Linac Coherent Light Source (LCLS). It uses a linear accelerator a kilometer long to pulse X-rays up to 120 times per second.

That’s good enough for a Hollywood flick, but not fast enough for Coffee’s movies.

“We need to see how electron clouds move like soap bubbles around molecules, how you can squeeze them in certain ways and energy comes out,” said Coffee, a specialist in the physics at the intersection of atoms, molecules and optics.

So, an upgrade next year will let the giant instrument take 100,000 frames per second. In two years, another enhancement, called LCLS II, will push that to a million frames a second.

Sorting the frames that flash by that fast — in random order — is a job for the combination of high performance computing (HPC) and AI.

AIs in the Audience

Coffee’s goal is to sit an AI model in front of the LCLS II. It will watch the ultrafast movies to learn an atomic dance no human eyes could follow.

The work will require inference on the fastest GPUs available running next to the instrument in Menlo Park, Calif. Meanwhile, data streaming off LCLS II will be used to constantly retrain the model on a bank of NVIDIA A100 Tensor Core GPUs at the Argonne National Laboratory outside Chicago.

It’s a textbook case for HPC at the edge, and one that’s increasingly common in an era of giant scientific instruments that peer up at stars and down into atoms.

So far, Coffee’s team has been able to retrain an autoencoder model every 10-20 minutes while it makes inferences 100,000 times a second.

“We’re already in the realm of attosecond pulses where I can watch the electron bubbles slosh back and forth,” said Coffee, a core member of SLAC’s overall AI initiative.

A Broader AI Collaboration

The next step is even bigger.

Data from Coffee’s work on molecular movies will be securely shared with data from Argonne’s Advanced Proton Source, a kind of ultra-high-resolution still camera.

“We can use secure, federated machine learning to pull these two datasets together, creating a powerful, shared transformer model,” said Coffee, who’s collaborating with multiple organizations to make it happen.

The transformer will let scientists generate synthetic data for many data-starved applications such as research on fusion reactors.

It’s an effort specific to science that parallels work in federated learning in healthcare. Both want to build powerful AI models for their fields while preserving data privacy and security.

“We know people get the best results from large language models trained on many languages,” he said. “So, we want to do that in science by taking diverse views of the same things to create better models,” he said.

The Quantum Future

The atomic forces that Coffee studies may power tomorrow’s computers, the scientist explains.

“Imagine a stack of electron bubbles all in the same quantum state, so it’s a superconductor,” he said. “When I add one electron at the bottom, one pops to the top instantaneously because there’s no resistance.”

The concept, called entanglement in quantum computing, means two particles can switch states in lock step even if they’re on opposite sides of the planet.

That would give researchers like Coffee instant connections between powerful instruments like LCLS II and remote HPC centers training powerful AI models in real time.

Sounds like science fiction? Maybe not.

Coffee foresees a time when his experiments will outrun today’s computers, a time that will require alternative architectures and AIs. It’s the kind of big-picture thinking that excites him.

“I love the counterintuitiveness of quantum mechanics, especially when it has real, measurable results humans can apply — that’s the fun stuff.”