Ouster streamlines lidar data labeling with new partners Playment and Scale.AI

![]() Angus PacalaBlockedUnblockFollowFollowingDec 14

Angus PacalaBlockedUnblockFollowFollowingDec 14

Robotics is hard. Getting labeled data shouldn’t be.

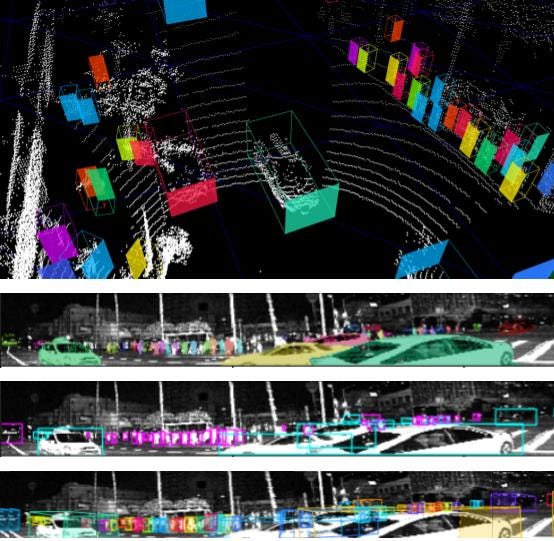

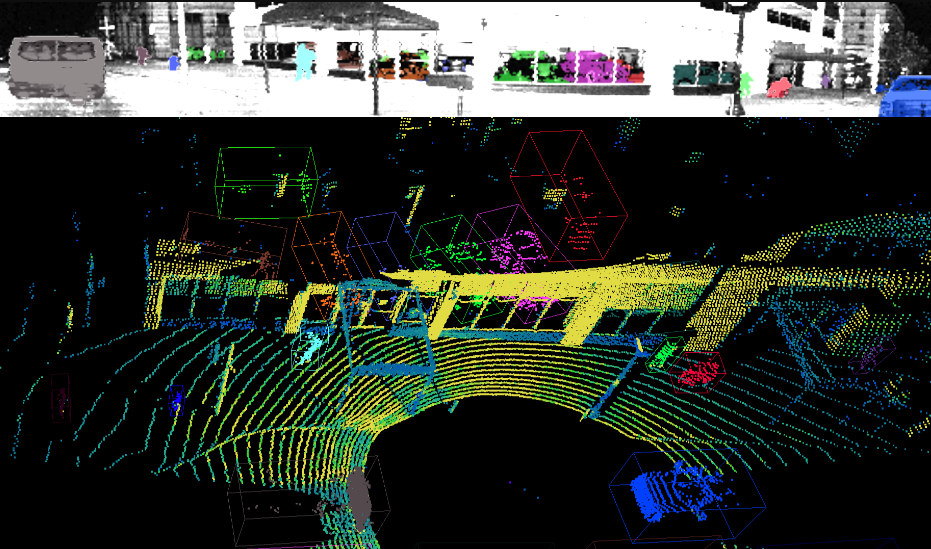

Visualization of pixelwise semantic masks perfectly correlated between 2D and 3D projections.

Visualization of pixelwise semantic masks perfectly correlated between 2D and 3D projections.

Last week, we announced a labeling partner program in conjunction with Playment and Scale.AI to help streamline lidar data labeling for Ouster’s rapidly growing customer base (more than 300 customers and counting!). We want to use this post to expand on why this will be an important step not just for Ouster’s customers, but for the machine learning industry at large.

For the past several months, we’ve worked with Playment and Scale.AI to develop new labeling tools that take advantage of the 2D-3D nature of Ouster’s lidar data to reduce costs by up to 50% and provide you with higher accuracy labels, faster labeling, more labeling options, and a streamlined process from the time of data capture to the time you begin to train your models.

The partner program also helps guarantee that a high standard of quality and a common set of labeling capabilities are available to all Ouster customers. Visit our website to see the most up-to-date list of labeling companies that are part of the program, as we intend to add more in the future.

We’ve also developed an open format for lidar data that makes it simpler to record, store, transfer, load, and label lidar data for customers and labelers alike. We’re open sourcing this format in the hopes that the rest of the industry standardizes around the work we’ve started (although it only supports structured lidar data). The lidar format will be included in an update to Ouster’s open source driver and enables customers to record OS-1 sensor data directly into this format so that it’s ready to be transferred to Playment, Scale, and others for labeling. More on this in January!

Read more about Playment’s integration with Ouster

Read more about Scale.AI integration with Ouster

The advantages of structured lidar data

Visualizing structured lidar data from an OS-1 sensor.

Visualizing structured lidar data from an OS-1 sensor.

Traditionally, lidars have been either spinning units or beam scanning ones. Typical spinning lidars tend to spin at a non-constant speed, causing the points to be spaced unevenly on every frame. Meanwhile, MEMS or scanning lidars tend to have a few beams scanning in a nonlinear, S-shaped curve. Neither of these are conducive to storing lidar data in a fixed grid for 2D deep learning methods, compressed data formats, and ease of labelling.

Thanks to our multi-beam flash lidar design, the Ouster OS-1 has the ability to output structured lidar data where horizontal and vertical angular spacing are always kept constant — just like a camera. This allows the lidar to output a fixed-size 2048 by 64 pixel depth map as well as intensity and ambient light images on every frame, allowing the use of convolutional neural networks, as well as massively easing the implementation of image storage and labeling workflows.

![]()

While RGB-D cameras and traditional flash lidars are also capable of outputting structured range data, neither class of sensors has comparable range, range resolution, field of view, or robustness in outdoor environments, compared to the Ouster OS-1. However, these shorter-range structured 3D cameras can still benefit from the work we’re doing, and we encourage manufacturers of these products to consider our approach.

A labeling workflow for structured lidar data

We’ve worked with our labeling partners to take advantage of our structured data in their labeling tools in order to minimize the cost of labeling, increase their capabilities, and improve the accuracy of the annotation significantly. Some examples include:

Providing synchronized 2D and 3D views to the annotator as an intuitive visual confirmation of the current task.Annotating a pixel-wise mask in the 2D lidar image and using the 3D point cloud to check the mask accuracy. Refining the mask within the 3D point cloud by adding or removing individual points or selecting clusters of points and having the 2D mask update.Using 3D bounding boxes to automatically generate 100% accurate 2D masks (semantic or instance segmentation), cuboids, or a bounding boxes in the 2D image.

Once you see this labeling flow in action, it’s clear how much more efficient and accurate it is. The alternative would be to duplicate this work for the 2D and 3D data:

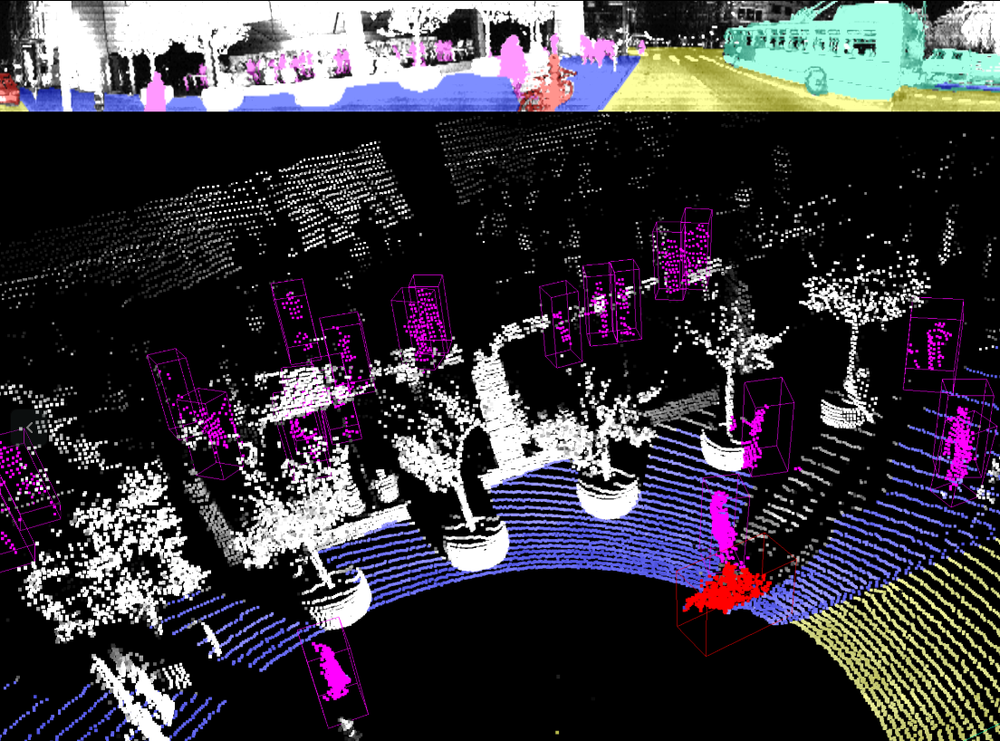

Perfect 2D pixel-wise masks (lower left image) generated automatically from 3D bounding boxes, and 2D masks are used to refine 3D bounding box accuracy.

Perfect 2D pixel-wise masks (lower left image) generated automatically from 3D bounding boxes, and 2D masks are used to refine 3D bounding box accuracy.

This approach yields the largest family of labels from 2D bounding boxes, semantic and instance masks to 3D bounding boxes (in 2D or 3D), point cloud semantic and instance segmentation with minimum effort and maximum accuracy possible.

Here we show hand labeled 3D bounding boxes in the top image and the automatically generated pixel-wise masks, bounding boxes, and cuboids in the 2D frames.

Here we show hand labeled 3D bounding boxes in the top image and the automatically generated pixel-wise masks, bounding boxes, and cuboids in the 2D frames.

What does this all mean?

10–50% Lower Costs and Faster Labeling

By eliminating the need to label both 2D and 3D data sets independently, our work reduces the amount of annotation needed by the annotators by up to 2x and ultimately reduces the cost to the customer.

By eliminating the need to label both 2D and 3D data sets independently, our work reduces the amount of annotation needed by the annotators by up to 2x and ultimately reduces the cost to the customer.

Smaller File Sizes

Our open source structured data format reduces file sizes and associated transfer costs by up to 97%. Data volumes are already so high that some customers are physically shipping hard drives to their labeling partners. This must stop!

Improved Accuracy of Labels

The accuracy of both 2D and 3D labels are improved by the transferability of the labels between 2D and 3D format providing more visual aids to annotators and 100% accurate 2D semantic masks. There are no more border issues in semantic segmentation. Even pedestrians and cars within shops and galleries are easily and accurately labeled! More accurate labels also means we can generate more accurate, measurable, and meaningful metrics when testing algorithms.

Notice the window frames of this car dealership are excluded from the vehicle masks automatically.

Notice the window frames of this car dealership are excluded from the vehicle masks automatically. Here, the customers in this retail store are perfectly segmented in the 2D image despite the trees, windows and furniture in front of, behind, and distributed among the people.

Here, the customers in this retail store are perfectly segmented in the 2D image despite the trees, windows and furniture in front of, behind, and distributed among the people.

Rotoscoping and green screens

If this all sounds slightly familiar, that may be because it is. There is another massive industry that has been investing for decades in technology and tools for generating pixel accurate masks in large 2D image sets: the film industry.

Rotoscoping and green screen are long established, often highly manual (far less so for green screen), methods of segmenting actors and other objects of interest from a camera scene for compositing. And just like the robotics industry, they have recently been developing automatic rotoscoping technology that leverages 3D cameras and deep learning.

As a final note, I wanted to point out many people at Ouster worked on this partnership and contributed to this post. If anything I’m just the messenger here. You won’t find a sharper, nicer team of people to work with, and I encourage you to take a look at our job openings as we’re hiring across the board.

Honestly, we could do this all day long.

Honestly, we could do this all day long.

Originally published at www.ouster.io.