By: Alan Agon, Product Manager; Sammy Omari, Engineering Manager; and Sameer Qureshi, Director, Product Management

The current benchmark for driving

Imagine you’re driving down the freeway, and you see a car cutting across multiple lanes to make the upcoming exit. You move your foot from the accelerator to the brake and slow down slightly in anticipation. The car just makes the exit, and you continue on your uneventful way to work forgetting about the event a few minutes later.

Humans have a knack for making complicated and ambiguous situations like this seem trivial. In an AV, this ambiguity is handled by the planner, the main decision-making center that takes input from the perception system and sits in the middle of the AV stack. The planner:

- Predicts how the scene will evolve over time (prediction);

- Simultaneously reasons about a set of potential actions for the AV (behavior planning); and,

- Generates the most appropriate AV trajectory (trajectory generation).

On top of ambiguity, the example above increases in complexity as we add more inputs to the planner such as weather, mood of the other driver, and countless other contextual parameters. This increase in complexity results in an explosion of possible system states resulting in a phenomenon known as the “curse of dimensionality” — and it doesn’t stop there. Each of these inputs can be represented in increasing degrees of fidelity, such as the various types of weather and number of possible driver moods. Combine this complexity and ambiguity, and introduce probability into the mix, and a new issue emerges: the “long tail” of possible rare events. These are a vast set of possible events, all with a non-zero probability of occurring while driving.

Much of the difficulty in building an autonomous vehicle (AV) is due to the ambiguity around what behaviors a human should adopt. While AVs have the potential to completely disrupt the transportation sector, as of today, humans are still the benchmark for driving.

Human-inspired driving

The DMV handbook and local rules of the road don’t tell us how much to slow down for a car crossing multiple lanes. Designing an autonomous vehicle to operate on our roads is complicated because there are often contradictory rules and references to ambiguous terms — like “right of way” and “until safe to proceed” — that can’t be formally defined. To further complicate this, the “measure of success” for an AV’s planner in any of these scenarios is subjective and a function of multiple factors, such as state and local traffic laws and drivers’ and passengers’ individual preferences.

While it’s clear that the driver from the scenario in the opening paragraph shouldn’t slam on the brakes on a freeway, it’s also clear they shouldn’t floor the accelerator either. So what is the appropriate response? Humans have calibrated their driving behavior to other human drivers. So, deciding what is an appropriate amount of braking or acceleration to apply in a particular situation typically depends on what we’ve learned from past driving experiences.

Example: Applying human-inspired driving to AV planning

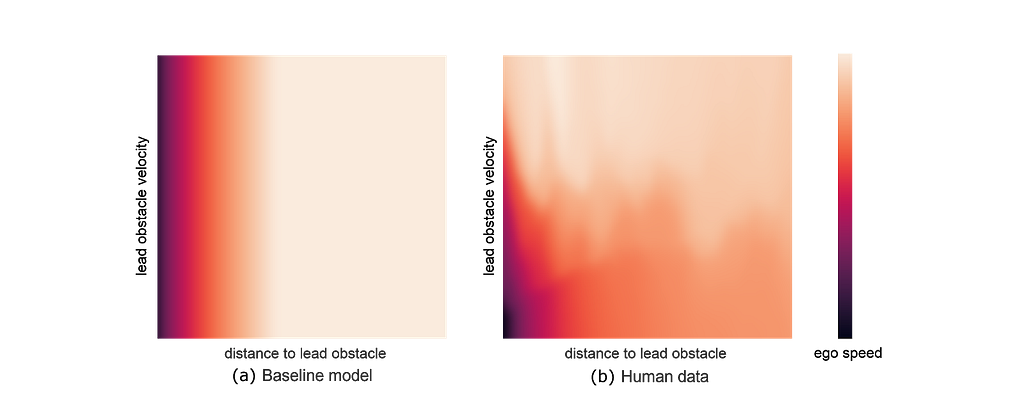

To define what is an appropriate speed for the example above, we took inspiration from humans. The two boxes below show two model iterations of how we shape the speed of the AV (ego) as a function of the distance and speed of the vehicle cutting in ahead of us (lead obstacle). Starting with a simplistic baseline model (a) that didn’t account for the velocity of the lead obstacle, we improved the model by naturally learning from human driving data (b) that the AV should only marginally slow down for a vehicle that was performing a cut-in with a high velocity. Ultimately, this blended approach resulted in a more comfortable and natural ride that was tuned to human preferences.

The hierarchy of needs for AV behavior

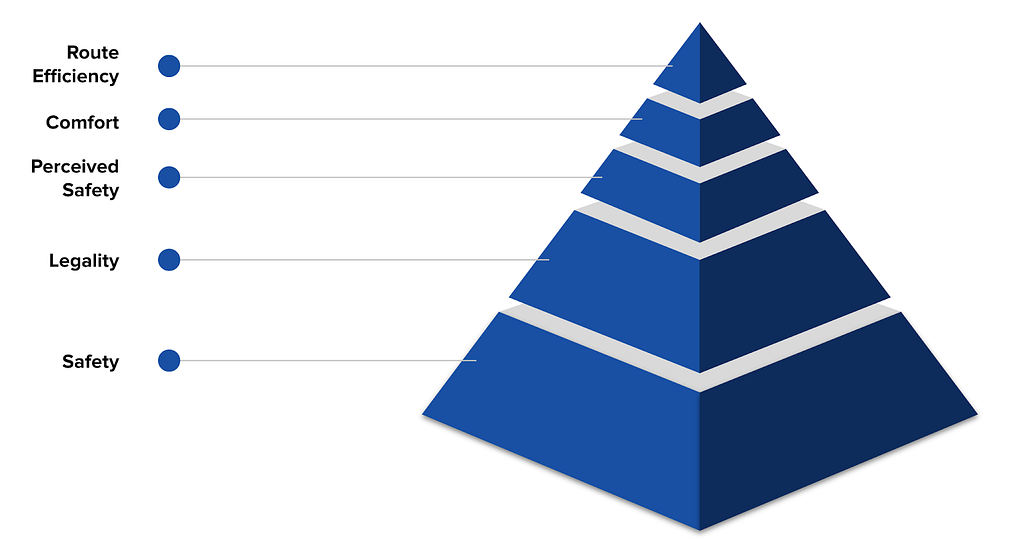

At Level 5, we recognized the need to generalize the approach for how to optimize the planner for everyone involved, including passengers and people near the AV. We took inspiration from Maslow’s hierarchy of needs and Asimov’s three laws of robotics to develop a hierarchy of needs for an AV’s behavior.

The most basic requirement of the hierarchy is safety. When presented with a myriad of options to choose from, the most fundamental factor we always optimize for first is safety. However, if it were only safety that we were optimizing for, the solution would be easy: drive very slowly and cautiously, and slow down for even the slightest source of uncertainty. Clearly that wouldn’t provide a product with much utility, so we needed to add layers to the hierarchy.

Once we’ve ensured that the planned behavior is safe, we then ensure that the behavior is legal as defined by the driving laws in the jurisdiction where we’re operating. Next, we consider the subjective notion of perceived safety, which is related to minimizing the passenger or other road agents’ perceptions of being unsafe, even when actual safety is not at significant risk. For example, this might involve increasing the distance to a lead car or ensuring we are not getting too close to a lane divider, even when there’s little to no actual safety risk. The fourth parameter to optimize for is comfort, which is related to aspects of the ride like g-forces, which might make the passenger feel nauseous or uncomfortable. Assuming we’ve optimized for everything above, the final parameter we consider is route efficiency, which is about ensuring we get to the destination in the quickest and most efficient way possible — an important factor for a transportation-as-a-service business.

A continuum of approaches to address the hierarchy

Planning in traditional robotics systems is usually based on expert system implementations. Traditional expert system-based planning software provides the determinism and interpretability that is necessary to enable reasoning about the base layers in our hierarchy, such as safety and legality. However, in the human braking chart above, the underlying function is unclear so we can’t use an expert system approach alone. We need a technique like machine learning, which can infer a mathematical basis of human behavior. When applied well, machine learning can lead to far more human-like driving.

We believe using a combination of rule-based systems, learning-based systems, and human driving data will result in an overall system-level solution that exhibits the best of all worlds. Using this hybrid approach in scenarios such as cut-ins allows us to keep safety as our number one priority — and enables us to also cater to the more subjective layers in our hierarchy.

Building a better decision-making center for AVs

Whether it’s the common scenario of being cut off on a freeway or one of the rare long tail events, an AV’s planner needs to correctly and consistently choose the right behavior. While there is no objective definition of the “right” behavior, utilizing the AV hierarchy of needs and then applying a mix of systems to address these levels of needs helps disambiguate this complexity. Paired with a rigorous testing process, using this mixture of expert and learning-based systems has the potential to ultimately introduce a new benchmark for safety.

There are many ways to think about solving problems like this. If you find these types of challenges exciting, Level 5 is hiring. Check out our open roles, and be sure to follow our blog for more technical content.

Designing the Decision-making Center of an Autonomous Vehicle was originally published in Lyft Level 5 on Medium, where people are continuing the conversation by highlighting and responding to this story.