By Peter Ondruska, Head of AV Research and Sammy Omari, Head of Motion Planning, Prediction, and Software Controls

Over the last few years, machine learning has become an integral part of self-driving development. It has unlocked significant progress in perception, but it does remain limited in its use across the rest of the autonomy stack, particularly in planning. Unlocking significant progress in behavior planning will trigger the next major wave of success for autonomy and we see that the wise use of machine learning for behavior planning is the key to achieving it. Such machine learning approaches need large behavioral datasets, but where will these datasets come from?

Employing machine learning across the self-driving stack

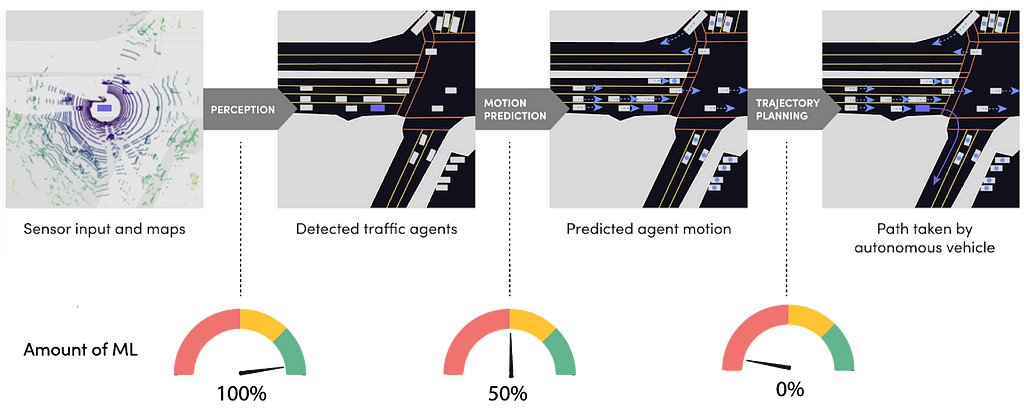

First, let’s examine where machine learning is or isn’t being used successfully to address the fundamental questions for all modern self-driving systems:

Perception: What’s around the car? What’s the position of other cars, pedestrians, and cyclists? AV developers have successfully taught self-driving vehicles to see by applying the same machine learning approach that ImageNet pioneered to train convolutional deep learning neural networks for image classification. By leveraging a large dataset of human-labelled visual examples, AVs can see cars and pedestrians via a stream of camera and lidar data. For this application of machine learning, developers just need a large-enough dataset of human-labelled data.

Motion Prediction: What will likely happen next? Teaching an AV to anticipate how a situation may develop is much more nuanced than teaching it to see. For a time, this was solved using simple approaches, like extrapolating future positions based on current velocity. Of late, it’s proving more effective to use historical data in machine learning systems to predict the future. To go deeper on prediction, see our Motion Prediction competition.

Trajectory Planning: What should an AV do? Trajectory planning is how an AV decides what to do at any given moment. It’s the most difficult remaining engineering challenge in self-driving, and one where, so far, very little machine learning is used across the industry. Instead, AVs make decisions based on hard-coded rules for what to do in every scenario, with predetermined tradeoffs. These tradeoffs could be between factors like the smoothness of the ride and negotiations with other cars, pedestrians, or cyclists. This works for simple scenarios but fails in rare ones as these rules are becoming harder and harder to define.

Today, the industry is limited by existing rules-based systems as it relates to trajectory planning and motion prediction — which represent the missing pieces of the self-driving brain. We’ve reached a turning point in how AV programs address these challenges.

At Lyft, we believe real-world driving behavior examples play a major role in finding the answer. Just as with human drivers, only with true “experience” will vehicles be able to both see and drive with fully-realized autonomy.

Exploring a novel machine learning approach to planning

To make self-driving a reality at scale, we need a radically new approach — one that can learn from human driving demonstrations and experiences instead of complex hand-crafted rules.

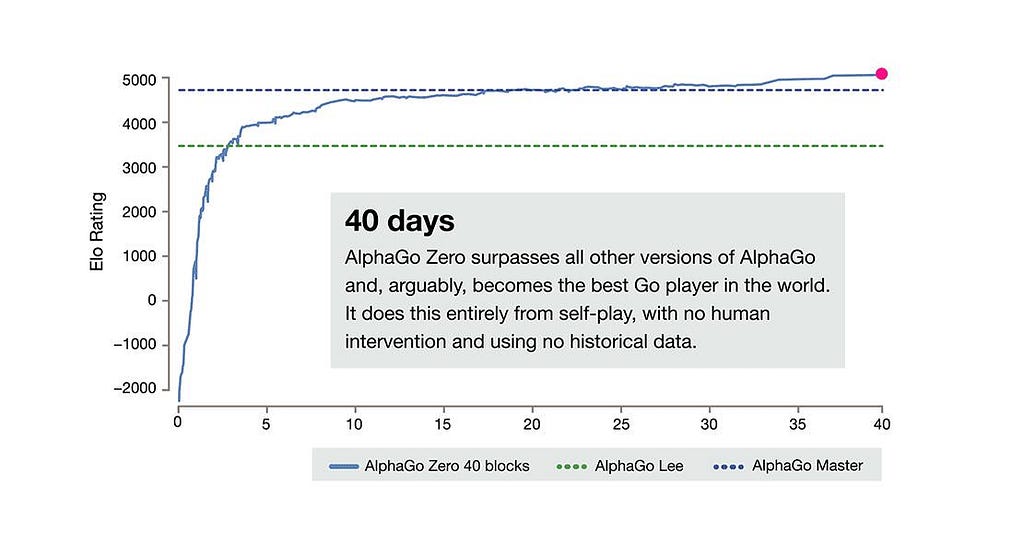

Take for example DeepMind’s AlphaGo, a program that beat the world champion in Go. Instead of relying on an approach that follows dictated rules, AlphaGo replaced rules with a deep neural network and algorithms to create learning systems based on experiences and self-play. This is known as imitation learning or inverse reinforcement learning, and a similar approach can be used to learn from human experiences. In essence, this is the difference between learning the rules of the road via a DMV handbook and experiencing driving first-hand to learn from real-world experiences.

This approach is novel to self-driving technology and it’s what we’re developing at Level 5. It involves two core components:

- New algorithms that apply next-generation machine learning to address the problems of planning and prediction.

- An immense amount of human driving examples to feed them with a vast dataset of real experiences they can learn and emulate.

Building new datasets to train the vehicles

Once these algorithms exist, the remaining challenge is how to acquire data to feed them. This requires a completely new class of datasets that detail real-world driving scenarios at scale. We believe these unprecedented datasets can be acquired through a rideshare network that captures what’s happening on the road every day, ranging from basic driving behavior to rare and challenging scenarios. At Lyft, we’re tapping into our nationwide rideshare network, available to 95% of the U.S. population, to study real-world driving examples that are informing the development of our own self-driving technology and machine learning systems.

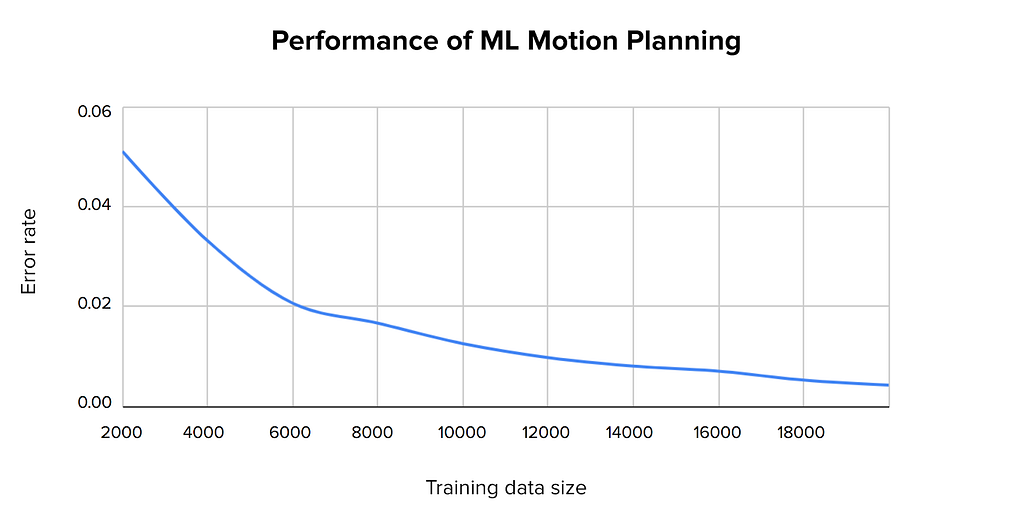

We’ve learned through our own experience that this paradigm works, and that the more behavioral examples applied to our planning system, the better our self-driving system performs.

With access to one of the largest rideshare networks in the world, we’re building the behavioral-based dataset needed to feed machine learning for our planning system. We’ve also shared the largest prediction dataset in the industry, and we invite the research community to join us in solving this challenge.

If you’re interested in learning more about how Lyft is navigating the autonomous road ahead, continue to follow this blog for updates and follow @LyftLevel5 on Twitter. To join Lyft in our self-driving development, visit our careers page.

The Next Frontier in Self-Driving: Using Machine Learning to Solve Motion Planning was originally published in Lyft Level 5 on Medium, where people are continuing the conversation by highlighting and responding to this story.