Graphics chip giant Nvidia mopped up the floor with its competition in a benchmark set of tests released Wednesday afternoon, demonstrating better performance on a host of artificial intelligence tasks.

The benchmark, called MLPerf, announced by the MLPerf organization, an industry consortium that administers the tests, showed Nvidia getting better speed on a variety of tasks that use neural networks, from categorizing images to recommending which products a person might like.

Predictions are the part of AI where a trained neural network produces output on real data, as opposed to the training phase when the neural network system is first being refined. Benchmark results on training tasks were announced by MLPerf back in July.

Nvidia’s results across the board trounced performance by Intel’s Xeon CPUs, and by field-programmable gate arrays from Xilinx. Many of the scores on the test results pertain to Nvidia’s T4 chip that has been in the market for some time, but even more impressive results were reported for its A100 chips unveiled in May.

The MLPerf organization does not crown a winner, or make any statements about relative strength. As an industry body it remains neutral and merely reports test scores.

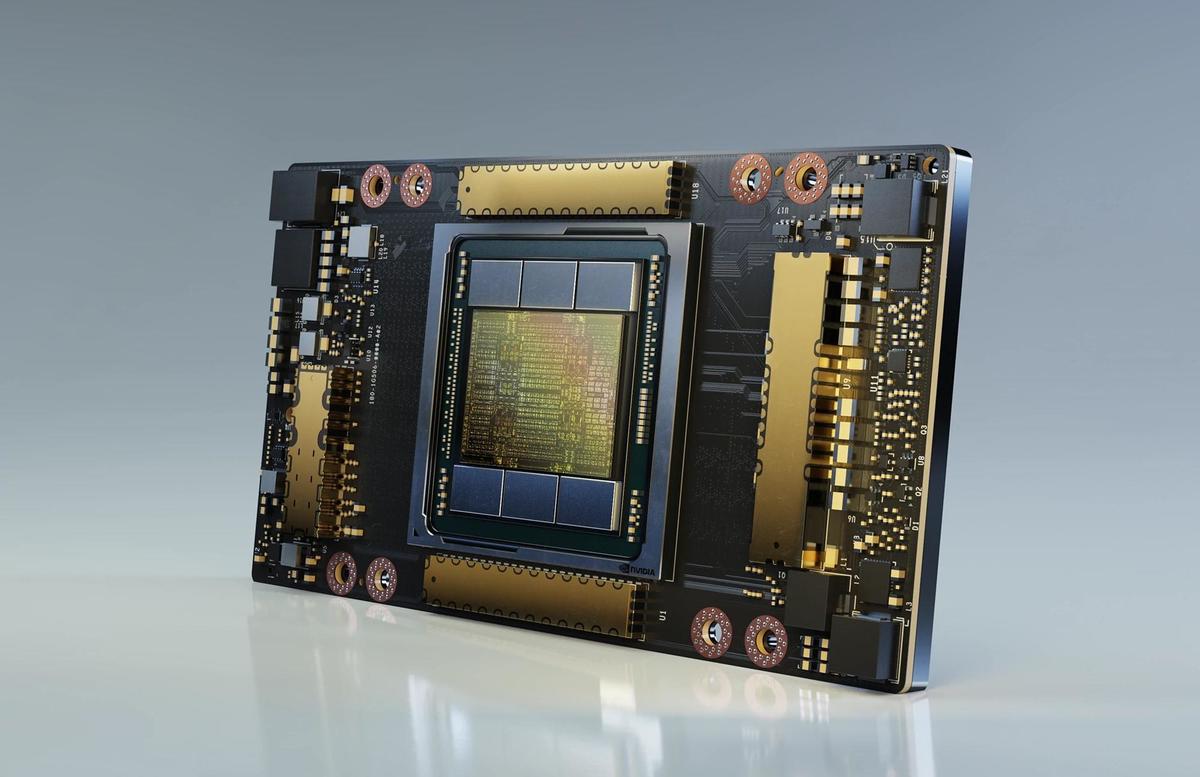

Nvidia’s A100, which took many top spots in the MLPerf benchmarks, is the latest version of Nvidia’s flagship processor. It was introduced in May.

Nvidia

But Nvidia issued a statement trumpeting its victory, stating, “NVIDIA won every test across all six application areas for data center and edge computing systems in the second version of MLPerf Inference.” Nvidia also published a blog post in which it provided handy charts to show its superiority.

The full results can be viewed in a spreadsheet provided by MLPerf. The way the competition is organized, various vendors submit results on a standard test of machine learning tasks, using systems that those vendors put together. Vendors who participated ranged from very large traditional computer companies, such as Dell and Fujitsu, to Dividiti, a U.K. based technology research firm that performs evaluation of hardware for AI as a consulting service.

The results include metrics such as the throughput and latency for a given task. For example, when measuring how Nvidia did on the classic ImageNet task, involving categorizing photos, MLPerf records the number of queries per second the system was able to handle. More queries per second is better. The computer systems contain multiple chips, and so the score is usually divided by the number of chips to obtain a per-chip score.

The highest throughput recorded for ImageNet, using the ResNet neural net, for example, was for an Nvidia A100 system using 8 chips that was running in conjunction with an AMD Epyc processor. That system was submitted by Inspur, a Chinese white box server maker. The Nvidia chips achieved a total throughput of 262,305 queries per second. Dividing by eight chips, that results in a top score of 32,788 queries per chip per second.

MLPerf released a spreadsheet showing the scores by throughput and latency on numerous AI tasks for each competing system. This snippet shows some of the computer systems submitted with Intel or Nvidia processors

MLPerf

In contrast, systems using two, four, and eight Intel Xeons delivered far lower throughout, with the best result being 1,062 images per second.

The report, which is released every year, contains a number of firsts this year. It is the first year that the MLPerf group is separating out the data center server tests from tests performed on so-called edge equipment. Edge equipment is infrastructure that is different from traditional data center servers. That includes autonomous vehicles and edge computing appliances. For example, some vendors submitted test results using Nvidia’s Xavier computing system, which is generally viewed as an autonomous vehicle technology.

It was also the first year that the group had specific results reported for mobile computing. Measurements were recorded on tasks for three leading smartphone processors that have embedded AI circuitry, including MediaTek’s Dimensity chip, Qualcomm’s Snapdragon 865, and Samsung Electronics’s Exynos 990. Apple’s A series processor did not take part.

There was also a suite of test results for laptop computers running Intel Core processors. While Intel’s numbers may not have stood up to Nvidia, the company successfully had its software system, OpenVino, represented on most of the benchmark tasks. That’s an important milestone for Intel’s software ambitions.

This year’s set of tests is much broader than in past years, David Kanter, head of MLPerf, said in an interview with ZDNet.

“We added four new benchmarks, and they are very customer driven,” said Kanter. To get around the danger that the test takers would write the test, said Kanter, MLPerf consulted AI industry practitioners in machine learning on the best tests to construct.

“We’re also specializing a bit, we have the separate edge and data center division,” he observed. The dedicated reports for mobile phones and notebooks are “a really big thing for the whole community, because there are no open, community-driven, transparent benchmarks for ML in notebooks or smartphones or tablets at all.”

“We think that’s a really big opportunity, to help shine some light on what’s going on there.”

MLPerf is proceeding on several fronts to improve its benchmarks and extend their relevance. For example, it is working on its own data sets to supplement what is done with existing data sets such as ImageNet.

“Building out data sets is, the analogy we like to use, is that the basis of the industrial revolution is like coal and steel and mass production, and the ability to measure things well, and we see some direct analogies there,” said Kanter.

“What’s going to take machine learning from being a very black magic-y, boutique, rarified thing to being ordinary, part of it is going to be good metrics, good data sets,” he said. “Data sets are the raw ingredients.”

In addition, the group is developing a mobile application for Android and iOS that will allow an individual to benchmark the AI performance of their smartphone. That software should appear first on Android, in about a month or two, he said, followed by the iOS version shortly thereafter.

“I would love to have it in the hands of everyone, and have them post their results online,” said Kanter of the mobile phone app.