By: Robert Morgan, Engineering Director and Mason Lee, Technical Program Manager

To build an autonomous vehicle (AV), we need to be able to safely and efficiently modify and test its software and hardware stacks. While on-road testing may seem like an effective way to do this, it’s simply unrealistic. It’s estimated that it would take more than 10 billion miles to collect enough data to fully validate a self-driving vehicle — that’s 400,000 trips around the Earth.

Let’s put this into perspective by considering the level of engineering required for a self-driving vehicle to complete a frequent and intrinsic action for human drivers: lane changing.

Human drivers instinctively consider the variables that impact their decision to change lanes: their own speed, the speed and distance between other vehicles on the road, congestion, whether a lane change even makes sense given an upcoming turn in the route, and others. For an autonomous vehicle to make the same decision, models for each of these variables must be developed and tested over and over (and over) again, resulting in an astronomical amount of time and resources required if solely tested on-road.

Whether making lane changes or executing more complex scenarios, it’s essential to have a scalable, virtual option to effectively validate AVs as we accelerate toward a self-driving future.

The digital co-pilot to AV road testing

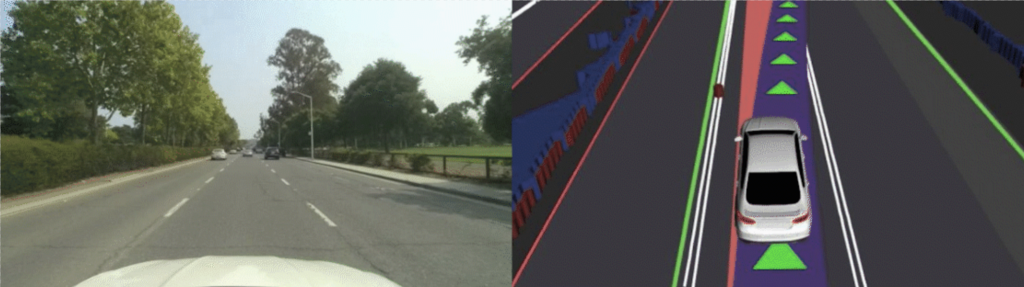

At Lyft, we supplement on-road testing with virtual validation to continuously test our self-driving vehicles’ software and hardware systems. This method involves running a high volume of virtual missions in a representative simulation while measuring performance along the way. We then evaluate the results to make necessary modifications to our software and hardware stacks, and feed the learnings back into the virtual validation system to improve autonomy performance, simulation, evaluation, and test coverage over time.

In addition to eliminating the need to complete miles of on-road missions to gather data for endless scenarios, virtual validation offers a number of other benefits:

- Safety. It allows us to test our system in a safe, virtual setting without taking on the risks that come with physical testing. We can also virtually test against rare edge cases — like a car slamming on its brakes in front of the AV — allowing us to proactively improve the performance and safety of our stack.

- Focus. In simulation, we are in control of the scenarios that the AV encounters. This means we can skip uneventful miles and focus on challenging scenarios that offer us more critical learning opportunities to improve our systems. We can also easily alter and test specific variables within a scene, like the types of traffic agents around us or the speed of oncoming vehicles. This allows us to extract more value from our testing, as opposed to spending time collecting uneventful data.

- Reproducibility. We have the resources to safely reproduce scenarios with vehicles on-road; however, they’re often time-consuming and would require a lot of manual setup. With virtual validation, reproducibility is built-in and we can easily study scenario variations in parallel. Disengagements that occur in simulation can be easily played back, altered, replayed, and shared.

Level 5’s approach to virtual validation

Virtual validation is not just a “final exam” we use to evaluate the performance of our software and hardware stacks for real-world deployment. It’s an approach we leverage and fuse with on-road vehicle testing throughout the AV development process.

Virtual validation is a rigorous, continuous process at Level 5. Every week, we collect our individual teams’ latest releases into a unified software update that we refer to as the “candidate.” Before we deploy the candidate on the AV fleet, it must run through a suite of virtual tests:

- Planning and Integration Regression Tests. This collection of tests includes feature-specific scenarios as well as replications of previous events and issues. This ensures that what has been working continues to work.

- Virtual Intervention Tests. At Level 5, we measure the number of human interventions per 1,000 miles to evaluate the high-level performance of our autonomy stack both on-road and in simulation. The Virtual Intervention Test consolidates scenarios seen in previous releases and replays the logs using the updated autonomy stack. The key goal in this stage is to detect potential issues in simulation using a high volume of real-world data.

- Full System Tests. At this stage, we evaluate all the software and hardware components of an AV, without the physical car itself. In our hardware-in-the-loop (HIL) testbed environment, we’re able to run full system tests for systemic functions separate from the actual behavior of the AV, including execution performance and timing, memory usage, and latency.

Once the stages of virtual testing are complete, the surfaced issues are sent to our Triage team for evaluation. They review the virtual disengagements, failing tests and diagnostics, and monitor the various automated metrics (like road law violations) for each candidate. Once the issues from virtual testing are addressed, we deploy the candidate onto our AVs for physical world testing. This allows us to not only validate the results from virtual testing, but also to feed these new real-world scenarios back into the virtual testing pipeline for future candidates.

Leveraging real-world data to focus on the right scenarios

Virtual validation enables us to simulate specific scenarios at scale and chip away at the target of 10 billion miles driven. But, how do we identify and address the challenging scenarios that require additional testing and virtual validation? The answer to this question lies with real-world datasets.

At Lyft, we have the opportunity to tap into data from our nationwide rideshare network to study real-world driving scenarios that can inform the development of our own self-driving technology. This data can help us focus on what and how we test, like interesting event discoveries such as an object falling out of a truck bed; or naturalistic maneuvers like whether the AV changes lanes in a way that’s comfortable for passengers.

Virtual validation is essential to the development of self-driving technology. It’s safer, faster, and produces more complete answers about the performance and safety of AVs in various scenarios. By combining virtual validation with on-road testing and real-world data, Level 5 can accelerate autonomous driving technology for years to come.

Follow this blog for updates and follow @LyftLevel5 on Twitter to continue to learn about Lyft’s road to autonomy. Visit our careers page to join Lyft in the self-driving movement.

Virtual Validation: A Scalable Solution to Test & Navigate the Autonomous Road Ahead was originally published in Lyft Level 5 on Medium, where people are continuing the conversation by highlighting and responding to this story.