For a decade and a half, Nvidia has been pushed its way into the datacenter, making its presence felt with its GPU accelerators that are designed to improve the performance and power efficiency of servers in HPC and enterprise compute environments and also expanding the opportunities for running highly parallel workloads.

In more recent years, Nvidia co-founder and chief executive officer Jensen Huang has said that the company would focus intensely on the burgeoning artificial intelligence field, arguing that AI would eventually find its way into almost every area of IT, from enterprise datacenters to the scientific institutions and the emerging public clouds. More recently, Nvidia has added to its datacenter arsenal, grabbing interconnect vendor Mellanox Technologies for $6.9 billion last year and bidding $40 billion in its current attempt to buy chip designer Arm Holdings for $40 billion, a deal that the vendor hopes to close early in 2022.

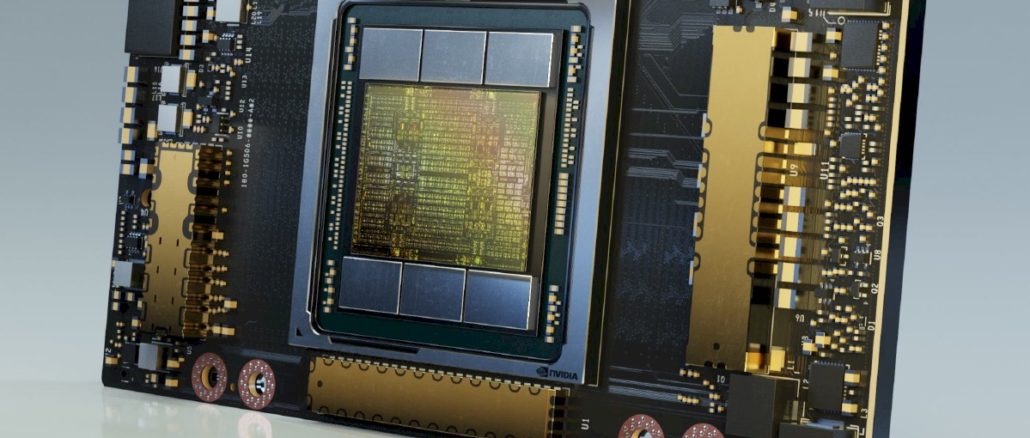

Nvidia has expanded its hardware offerings with a broader array of GPU accelerators, DGX (below) and HGX platforms and BlueField DPUs – as well as its upcoming Arm-based “Grace” CPU, due in 2023 – and has a growing AI-focused software business.

As we recently noted, these and other efforts have created a datacenter business for Nvidia that is continuing to ramp. The financial numbers for its latest fiscal quarter, which ended May 2, showed sales jumping 61.1 percent – hitting $5.66 billion – and a datacenter business that exceeded $2 billion in sales for the first time. If the numbers hold and such products as CPU, DPUs and networking do as well as the company hopes, Nvidia could take a significant portion of rival Intel’s current dominant share of the datacenter chip market.

Much of that will be on the back of the company’s embrace of AI. During the call with financial analysts to discuss the quarterly earnings, Huang spoke about his optimism about Nvidia’s datacenter business, noting that the company is “really in a great position there to fuse, for the very first time, simulation-based approaches with data-driven approaches that are called artificial intelligence. So across the board, our datacenter business is gaining momentum. We see great strength now, and it is strengthening and we are really set up for years of growth in the datacenter.”

At Nvidia’s virtual GTC Technology Conference in October 2020, where he unveiled advances in AI around everything from healthcare to robotics to videoconferencing, the CEO talked about what he called the current “age of AI,” saying that the company is “all in to advance and democratize this new form of computing.” In April at a GTC event, Huang spoke about more initiatives at Nvidia, including the Grace CPU specialized for HPC and AI workloads, the BlueField-3 data processing unit (DPU), software built on Nvidia Ai and Nvidia Omniverse, a platform for collaboration and training autonomous machines.

At the same event, Nvidia announced the latest generation of its DGX SuperPod AI supercomputer, which was first unveiled in 2019 and hit number 22 on the Top500 list for June 2019 of the world’s fastest supercomputers. At the April GTC, rolled out a DGX SuperPod featuring BlueField-2 DPUs and Base Command, software enabling users to access, share and run their SuperPods and accelerate AI software development.

Nvidia and the National Energy Research Scientific Computer Center (NERSC) announced the operation of Perlmutter, a Hewlett Packard Enterprise-built AI supercomputer armed with 6,159 A100 Tensor Core GPUs that will deliver almost 4 exaflops.

At this week’s Computex event, Nvidia is carrying forward with the focus on AI. The company sees the need to make it easier for organizations to adopt AI as the technology reaches deeper into enterprise datacenters.

“Nvidia has worked on artificial intelligence for quite some time and with the work that we have done and the progress we have made with a variety of customers, we are now both confident and comfortable that the next wave of democratization of AI is coming,” Manuvir Das, head of enterprise computing at Nvidia, said during a virtual press briefing, adding that the new offerings being announced at Computex and “the work we are doing with the ecosystem is really to get the ecosystem ready now to fully participate in this coming wave of the democratization of AI, where AI is utilized by every company on the planet rather than just adopters.”

The applications that enterprises use will continue to be infused with AI, with the user often unaware of any changes to the software, Das said. In addition, the ways organizations make and deliver their products will leverage AI, with manufacturing processes being automated and data used to learn what people are doing with those products and how they should be modified. Datacenters and their equipment also will evolve as AI becomes more commonplace, with the DPU playing an increasingly important role.

“Going forward, what we expect is servers that will racked into datacenters will have a CPU, a GPU for these applications to run and a DPU to secure the datacenter and make it more efficient,” Das said.

Nvidia is rolling out a subscription model for DGX SuperPods, marking the latest evolution of the networked clusters. The company last year started allowing organizations to purchase modules through partners and in April brought the BlueField-2 DPUs (seen below) on board. Das said enterprises now can rent modules through a rental subscription model, with Nvidia and storage vendor NetApp hosting the gear in Equinix datacenters.

The move mirrors what a growing number of OEMs are doing with their datacenter hardware. HPE, Dell Technologies, Cisco Systems and others have plans to make their entire portfolios available as a service, available via cloud-like consumption models. For Nvidia, access to the SuperPod modules will be through Base Command offered via Nvidia and NetApp, with NetApp bringing its data management capabilities.

The move will give enterprises that have yet to adopt AI an opportunity to work with it without having to spend a lot of money upfront. However, unlike other systems makers are doing with their as-a-service initiatives, Nvidia is not offering the subscriptions indefinitely; rather organizations will be able to rent the modules for a period of months.

“Our belief is if you are interested in the capability of a SuperPod and you want to use it for a significant amount of time, you should just buy one or lease one from one of our partners,” Das said. “This offering you is really meant to be if you think you might be going down that path [to AI but] you’re not sure. To be honest, it’s a pretty big check you have to write when you procure a SuperPod, so we’re providing a way to let you experience the best of what it has to offer, with both the hardware and software that’s very easy for you to use, and you don’t have to worry about signing up for a minimum one year or something like that. You can use it for a short amount of time because we know that when you have this experience, you will realize how much value it brings to you and then you go on the next step of procuring. … This is not a demo service. We encourage customers to use this for their production requirements, just like they will on a regular SuperPod. It’s just that they can choose the window for how long they have the system for.”

Nvidia also noted that Amazon Web Services and Google Cloud later this year will make Base Command available on cloud GPU instances.

The GPU maker also said a host of new AI-optimized servers are coming out from systems makers including HPE, Dell, Lenovo, Asus, Gigabyte and Supermicro as part of the Nvidia Certified Systems program, where the company certifies that such servers are optimized to run Nvidia AI Enterprise – an integrated suite of Nvidia AI and data analytics software – on VMware’s vSphere platform and Nvidia’s Omniverse Enterprise design collaboration software. In addition, they will support Red Hat’s OpenShift to accelerate AI development and Cloudera’s data engineering and machine learning tools.

In addition, new systems from the likes of Dell, Gigabyte, Supermicro, Asus and QCT will come out this year featuring GPUs based on Nvidia’s Ampere architecture and BlueField-2 DPUs. The systems will be powered by x86 chips from Intel, but Das said that servers running on Arm-designed CPUs are due in 2022.

“If you think about workloads going forward, a lot of the compute for AI is hosted on the GPU and the hypervisor and the network can be hosted on the DPU,” he said. “That means that the CPU’s role becomes more data orchestrator, the brain of the machine rather than the computer. This really opens the door to Arm CPUs, which are very energy efficient. That’s a great combination where you use an energy-efficient, cost-effective CPU to go with the GPU and DPU. That’s the design point we see for AI systems going forward.”

Nvidia’s bet years ago that AI represented its best growth opportunity appears to be paying off. Now the company wants to leverage the hardware and software innovations it has made and partnerships with system OEMs to make it easier for enterprises to join the AI crowd.