The tech world this week gets its first look under the hood of the NVIDIA BlueField data processing unit. The chip invented the category of the DPU last year, and it’s already being embraced by cloud services, supercomputers and many OEMs and software partners.

Idan Burstein, a principal architect leading our Israel-based BlueField design team, will describe the DPU’s architecture at Hot Chips, an annual conference that draws many of the world’s top microprocessor designers.

The talk will unveil a silicon engine for accelerating modern data centers. It’s an array of hardware accelerators and general-purpose Arm cores that speed networking, security and storage jobs.

Those jobs include virtualizing data center hardware while securing and smoothing the flow of network traffic. It’s work that involves accelerating in hardware a growing alphabet soup of tasks fundamental to running a data center, such as:

- IPsec, TLS, AES-GCM, RegEx and Public Key Acceleration for security

- NVMe-oF, RAID and GPUDirect Storage for storage

- RDMA, RoCE, SR-IOV, VXLAN, VirtIO and GPUDirect RDMA for networking, and

- Offloads for video streaming and time-sensitive communications

These workloads are growing faster than Moore’s law and already consume a third of server CPU cycles. DPUs pack purpose-built hardware to run these jobs more efficiently, making more CPU cores available for data center applications.

DPUs deliver virtualization and advanced security without compromising bare-metal performance. Their uses span the gamut from cloud computing and media streaming to storage, edge processing and high performance computing.

NVIDIA CEO Jensen Huang describes DPUs as “one of the three major pillars of computing going forward … The CPU is for general-purpose computing, the GPU is for accelerated computing and the DPU, which moves data around the data center, does data processing.”

A Full Plug-and-Play Stack

The good news for users is they don’t have to master the silicon details that may fascinate processor architects at Hot Chips. They can simply plug their existing software into familiar high-level software interfaces to harness the DPU’s power.

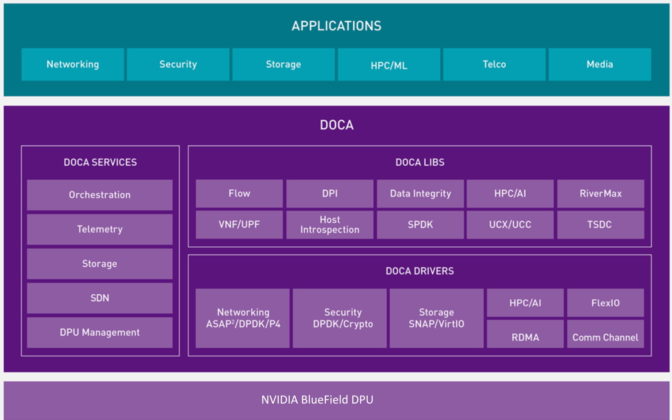

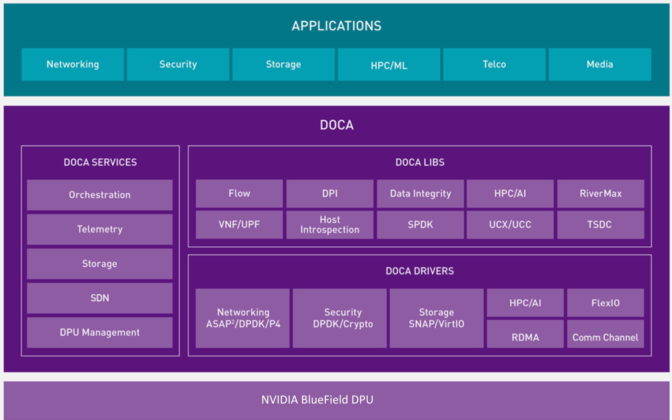

Those APIs are bundled into the DPU’s software stack called NVIDIA DOCA. It includes drivers, libraries, tools, documentation, example applications and a runtime environment for provisioning, deploying and orchestrating services on thousands of DPUs across the data center.

We’ve already received requests for early access to DOCA from hundreds of organizations, including several of the world’s industry leaders.

DPUs Deliver for Data Centers, Clouds

The architecture described at Hot Chips is moving into several of the world’s largest clouds as well as a TOP500 supercomputer and integrated with next-generation firewalls. It will soon be available in systems from several top OEMs supported with software from more than a dozen other partners.

Today, multiple cloud service providers around the world are using or preparing to deploy BlueField DPUs to provision compute instances securely.

BlueField Powers Supercomputers, Firewalls

The University of Cambridge tapped into the DPU’s efficiencies to debut in June the fastest academic system in the U.K., a supercomputer that hit No. 3 on the Green500 list of the world’s most energy-efficient systems.

It’s the world’s first cloud-native supercomputer, letting researchers share virtual resources with privacy and security while not compromising performance.

With the VM-Series Next-Generation Firewall from Palo Alto Networks, every data center can now access the DPU’s security capabilities. The VM-Series NGFW can be accelerated with BlueField-2 to inspect network flows that were previously impossible or impractical to track.

The DPU will soon be available in systems from ASUS, Atos, Dell Technologies, Fujitsu, GIGABYTE, H3C, Inspur, Quanta/QCT and Supermicro, several of which announced plans at Computex in May.

More than a dozen software partners will support the NVIDIA BlueField DPUs, including:

- VMware, with Project Monterey, which introduces DPUs to the more than 300,000 organizations that rely on VMware for its speed, resilience and security.

- Red Hat, with an upcoming developer’s kit for Red Hat Enterprise Linux and Red Hat OpenShift, used by 95 percent of the Fortune 500.

- Canonical, in Ubuntu Linux, the most popular operating system among public clouds.

- Check Point Software Technologies, in products used by more than 100,000 organizations worldwide to prevent cyberattacks.

Other partners include Cloudflare, DDN, Excelero, F5, Fortinet, Guardicore, Juniper Networks, NetApp, Vast Data and WekaIO.

The support is broad because the opportunity is big.

“Every single networking chip in the world will be a smart networking chip … And that’s what the DPU is. It’s a data center on a chip,” said Collette Kress, NVIDIA’s CFO, in a May earnings call, predicting every server will someday sport a DPU.

DPU-Powered Networks on the Horizon

Market watchers at Dell’Oro Group forecast the number of smart networking ports shipped will nearly double from 4.4 million in 2020 to 7.4 million by 2025.

Gearing up for that growth, NVIDIA announced at GTC its roadmap for the next two generations of DPUs.

The BlueField-3, sampling next year, will drive networks up to 400 Gbit/second and pack the muscle of 300 x86 cores. The BlueField-4 will deliver an order of magnitude more performance with the addition of NVIDIA AI computing technologies.

What’s clear from the market momentum and this week’s Hot Chips talk is just as it has in AI, NVIDIA is now setting the pace in accelerated networking.