Footage of the Tesla vehicle allegedly on “Full Self-Driving” that caused an eight-car crash pile-up in San Francisco in November has emerged.

It appears to show a classic case of phantom braking but also during Level 2 autonomy, the driver should have responded.

In November, an eight-car pile-up on the San Francisco Bay Bridge made the news after resulting in some minor injuries and blocking the traffic for over an hour.

But the headline was that the car that caused it was reportedly a “Tesla on self-driving mode,” or at least that’s what the driver told the police.

Now, as we know, Tesla does not have a “self-driving mode.” It has something it calls the “Full Self-Driving package,” which now includes Full Self-Driving Beta or FSD Beta.

FSD Beta enables Tesla vehicles to drive autonomously to a destination entered in the car’s navigation system, but the driver needs to remain vigilant and ready to take control at all times.

Since the responsibility rests with the driver and not Tesla’s system, it is still considered a level-two driver-assist system, despite its name.

Following the accident, the driver of the Tesla told the police that the vehicle was in “Full self-driving mode,” but the police seemed to understand the nuances in the accident report:

P-1 stated V-1 was in Full self-driving mode at the time of the crash, I am unable to verify if V-1’s Full 24 Self-Driving Capability was active at the time of the crash. On 11/24/2022, the latest Tesla Full Self 25 Driving Beta Version was 11 and is classified as SAE Intemational Level 2. SAE International Level2 is 26 not classified as an autonomous vehicle. Under Level 2 classification, the human in the driver seat must 27 constantly supervise support features including steering, braking, or accelerating as needed to maintain 28 safety. If the FullSelfDriving Capability software malfunctioned, P-1 should of manually taken control of 29 V-1 by over-riding the FullSelf Driving Capability feature.

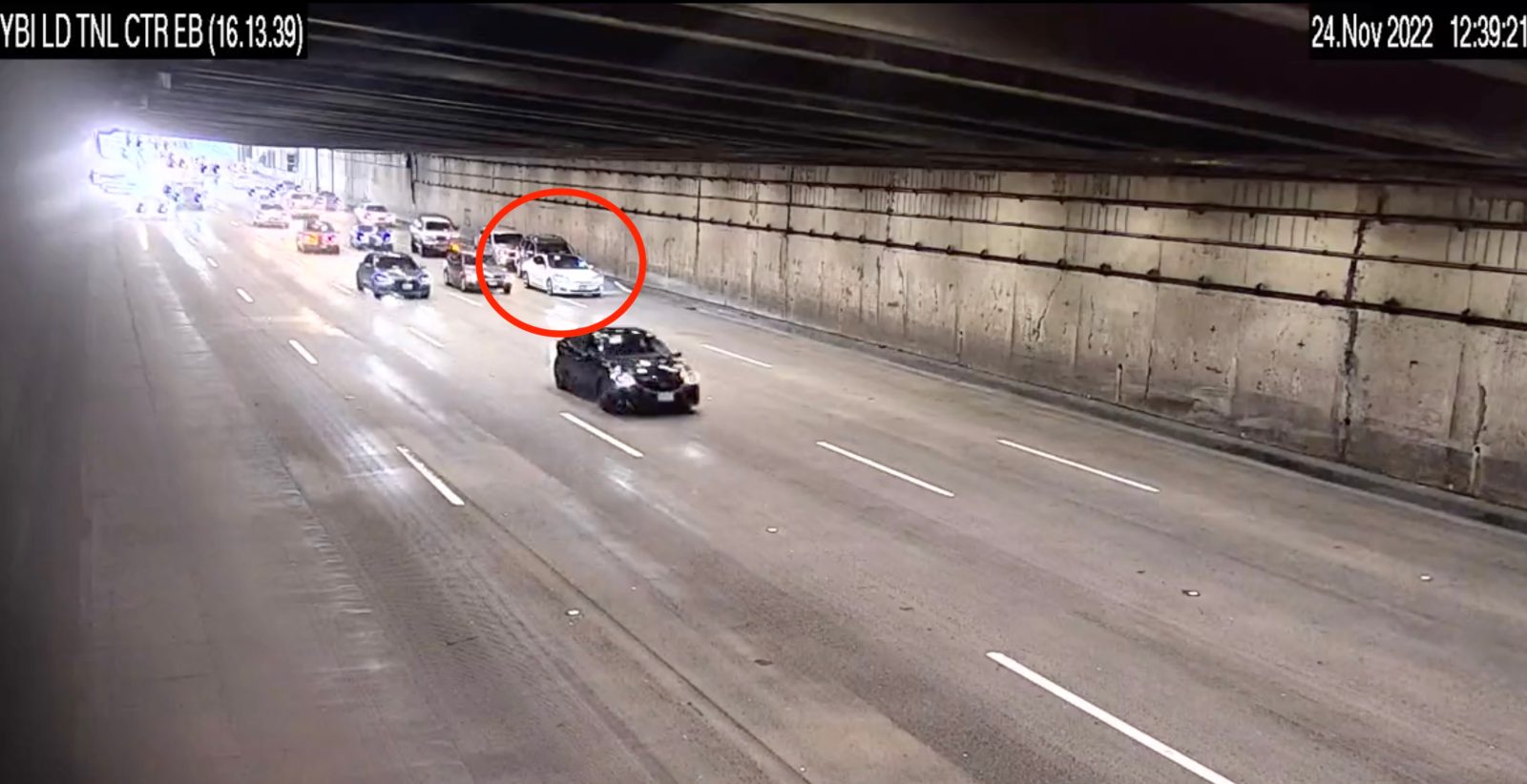

Now The Intercept has obtained footage of the accident, and it clearly shows the Tesla vehicle abruptly coming to a stop for no apparent reason:

This phenomenon is often referred to as “phantom braking,” and it has been known to happen relatively frequently on Tesla Autopilot and FSD Beta.

Back in November of 2021, Electrek released a report called “Tesla has a serious phantom braking problem in Autopilot.” It highlighted a significant increase in Tesla owners reporting dangerous phantom braking events on Autopilot.

This issue was not new in Tesla’s Autopilot, but our report focused on Tesla drivers noticing an obvious increase in instances based on anecdotal evidence, but it was also backed by a clear increase in complaints to the NHTSA. Our report made the rounds in a few other outlets, but the issue didn’t really go mainstream until The Washington Post released a similar report in February 2022. A few months later, NHTSA opened an investigation into the matter.

FTC: We use income earning auto affiliate links. More.