The AI bot is credited with authoring or co-authoring at least 200 books on Amazon’s storefront.

ChatGPT is listed as the author or co-author of at least 200 books on Amazon’s Kindle Store, according to Reuters. However, the actual number of bot-written books is likely much higher than that since Amazon’s policies don’t explicitly require authors to disclose their use of AI. It’s the latest example of AI-generated writing flooding the market and playing a part in ethically dubious content creation since the November release of OpenAI’s free tool.

“I could see people making a whole career out of this,” said Brett Schickler, a Rochester, NY salesman who published a children’s book on the Kindle Store. “The idea of writing a book finally seemed possible.” Schickler’s self-published story, The Wise Little Squirrel: A Tale of Saving and Investing, is a 30-page children’s story — written and illustrated by AI — selling for $2.99 for a digital copy and $9.99 for a printed version. Although Schickler says the book has earned him less than $100 since its January release, he only spent a few hours creating it with ChatGPT prompts like “write a story about a dad teaching his son about financial literacy.”

Other examples of AI-created content on the Kindle Store include children’s story The Power of Homework, a poetry collection called Echoes of the Universe and a sci-fi epic about an interstellar brothel, Galactic Pimp: Vol. 1.

“This is something we really need to be worried about, these books will flood the market and a lot of authors are going to be out of work,” said Mary Rasenberger, executive director of the Authors Guild. “There needs to be transparency from the authors and the platforms about how these books are created or you’re going to end up with a lot of low-quality books.”

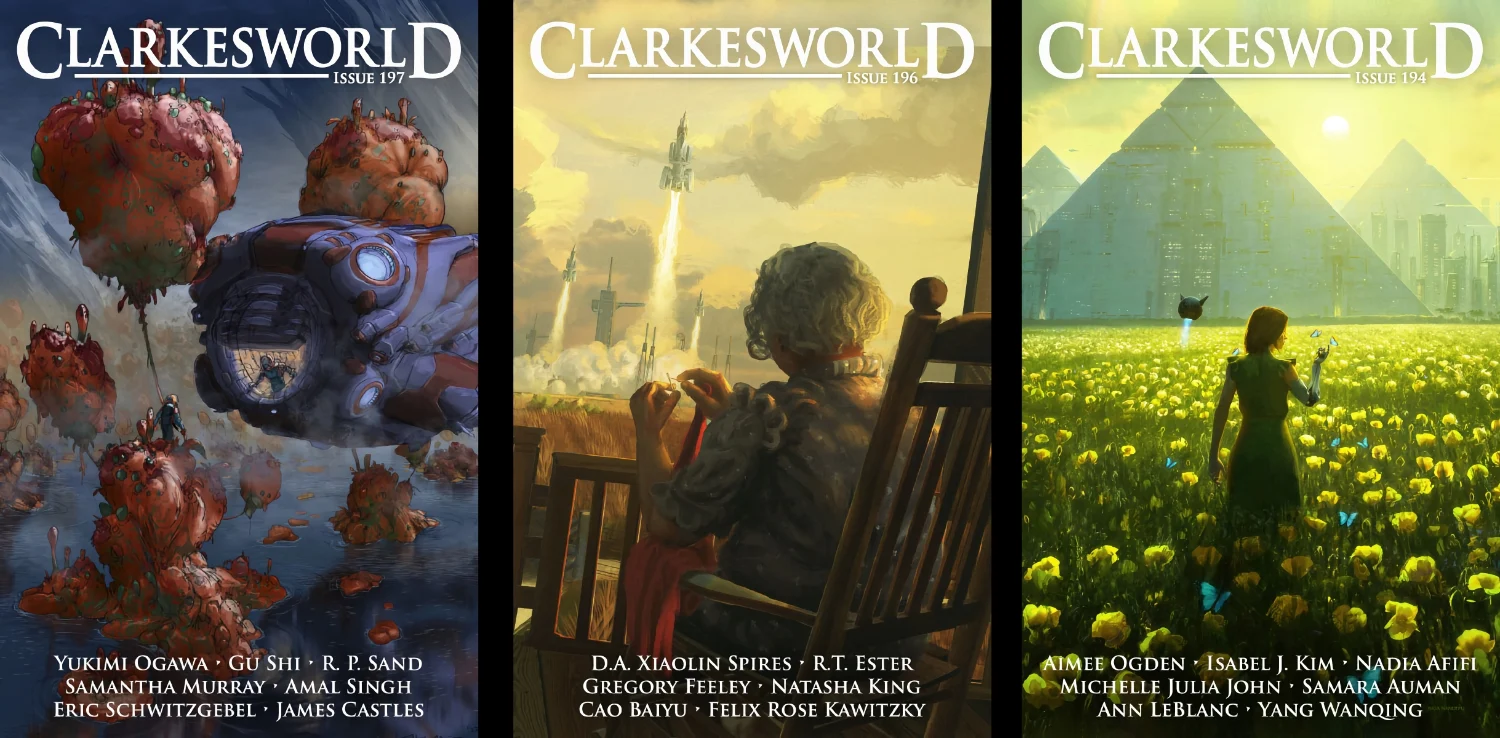

Clarkesworld

Meanwhile, science-fiction publication Clarkesworld Magazine has temporarily halted short-story submissions after receiving a flood of articles suspected of using AI without disclosure, as reported by PCMag. Although Editor Neil Clarke didn’t specify how he identified them, he recognized the (allegedly) bot-assisted stories due to “some very obvious patterns.” “What I can say is that the number of spam submissions resulting in bans has hit 38 percent this month,” he said. “While rejecting and banning these submissions has been simple, it’s growing at a rate that will necessitate changes. To make matters worse, the technology is only going to get better, so detection will become more challenging.”

Clarkesworld currently prohibits stories “written, co-written or assisted by AI,” and the publication has banned over 500 users this month for submitting suspected AI-assisted content. Clarkesworld pays 12 cents per word, making it a prime target. “From what I can tell, it’s not about credibility. It’s about the possibility of making a quick buck. That’s all they care about,” Clarke tweeted.

JASON REDMOND via Getty Images

Apart from ethical issues about transparency, there are also questions of misinformation and plagiarism. For example, AI bots, including ChatGPT, Microsoft’s Bing AI and Google’s Bard, are prone to “hallucinating,” the term for spouting false information confidently. Additionally, they’re trained on human-created content — almost always without the original author’s knowledge or permission — and sometimes use identical syntax to the source material.

Starting last year, tech publication CNET used an in-house AI model to write at least 73 economic explainers. Unfortunately, apart from the initially cagey approach that only revealed it was written by AI if you clicked on the byline, it also included numerous factual errors and nearly identical phrasing from other websites’ articles. As a result, CNET was forced to make extensive corrections and pause its use of the tool — however, one of its sister sites has already at least experimented with using it again.

All products recommended by Engadget are selected by our editorial team, independent of our parent company. Some of our stories include affiliate links. If you buy something through one of these links, we may earn an affiliate commission. All prices are correct at the time of publishing.