It’s also launching an upcoming business subscription that will omit user data from training by default.

OpenAI is tightening up ChatGPT’s privacy controls. The company announced today that the AI chatbot’s users can now turn off their chat histories, preventing their input from being used for training data.

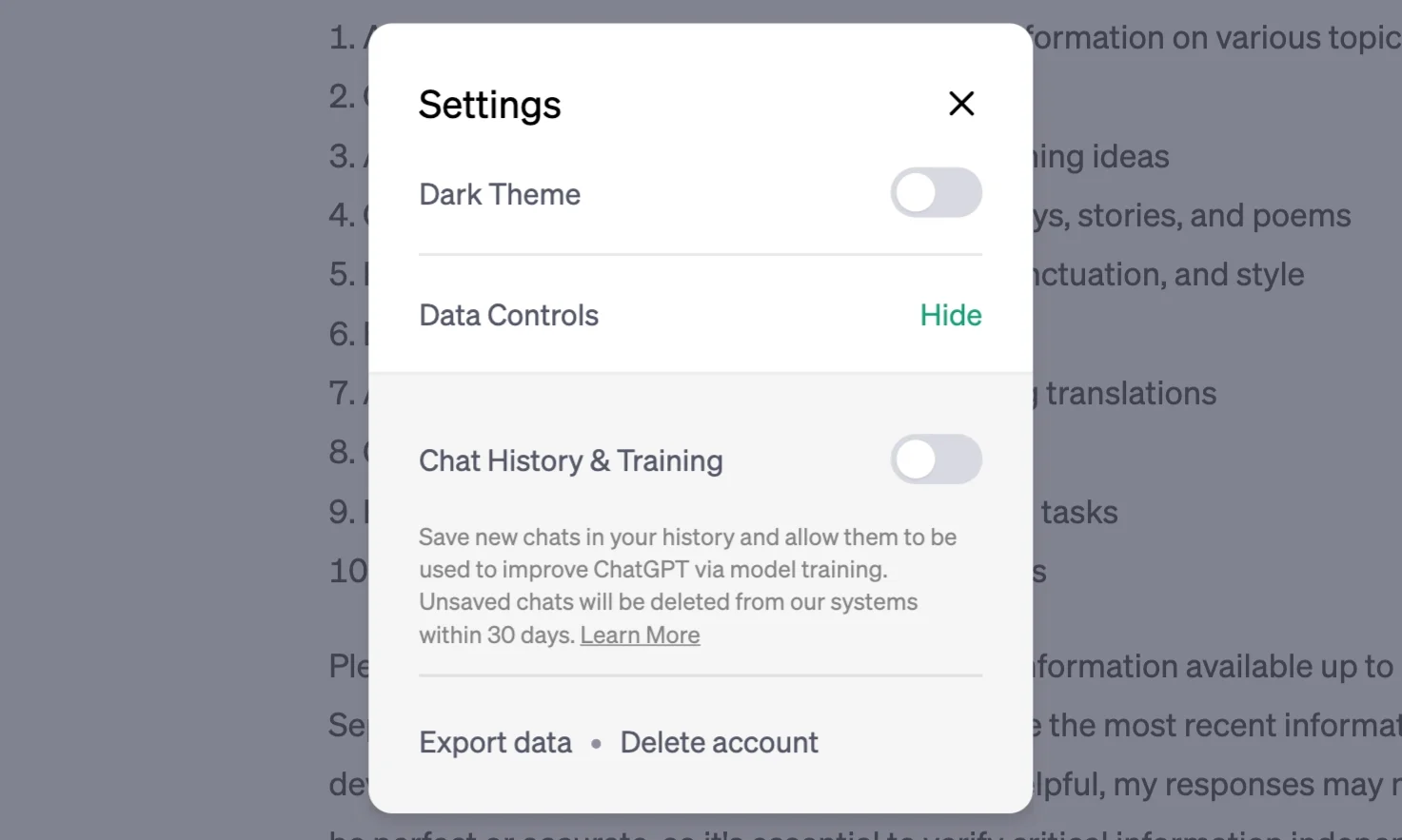

The controls, which roll out “starting today,” can be found under ChatGPT user settings under a new section labeled Data Controls. After toggling the switch off for “Chat History & Training,” you’ll no longer see your recent chats in the sidebar.

Even with the history and training turned off, OpenAI says it will still store your chats for 30 days. It does this to prevent abuse, with the company saying it will only review them if it needs to monitor them. After 30 days, the company says it permanently deletes them.

OpenAI

OpenAI also announced an upcoming ChatGPT Business subscription in addition to its $20 / month ChatGPT Plus plan. The Business variant targets “professionals who need more control over their data as well as enterprises seeking to manage their end users.” The new plan will follow the same data-usage policies as its API, meaning it won’t use your data for training by default. The plan will become available “in the coming months.”

Finally, the startup announced a new export option, letting you email yourself a copy of the data it stores. OpenAI says this will not only allow you to move your data elsewhere, but it can also help users understand what information it keeps.

Earlier this month, three Samsung employees were in the spotlight for leaking sensitive data to the chatbot, including recorded meeting notes. By default, OpenAI uses its customers’ prompts to train its models. The company urges its users not to share sensitive information with the bot, adding that it’s “not able to delete specific prompts from your history.” Given how quickly ChatGPT and other AI writing assistants blew up in recent months, it’s a welcome change for OpenAI to strengthen its privacy transparency and controls.