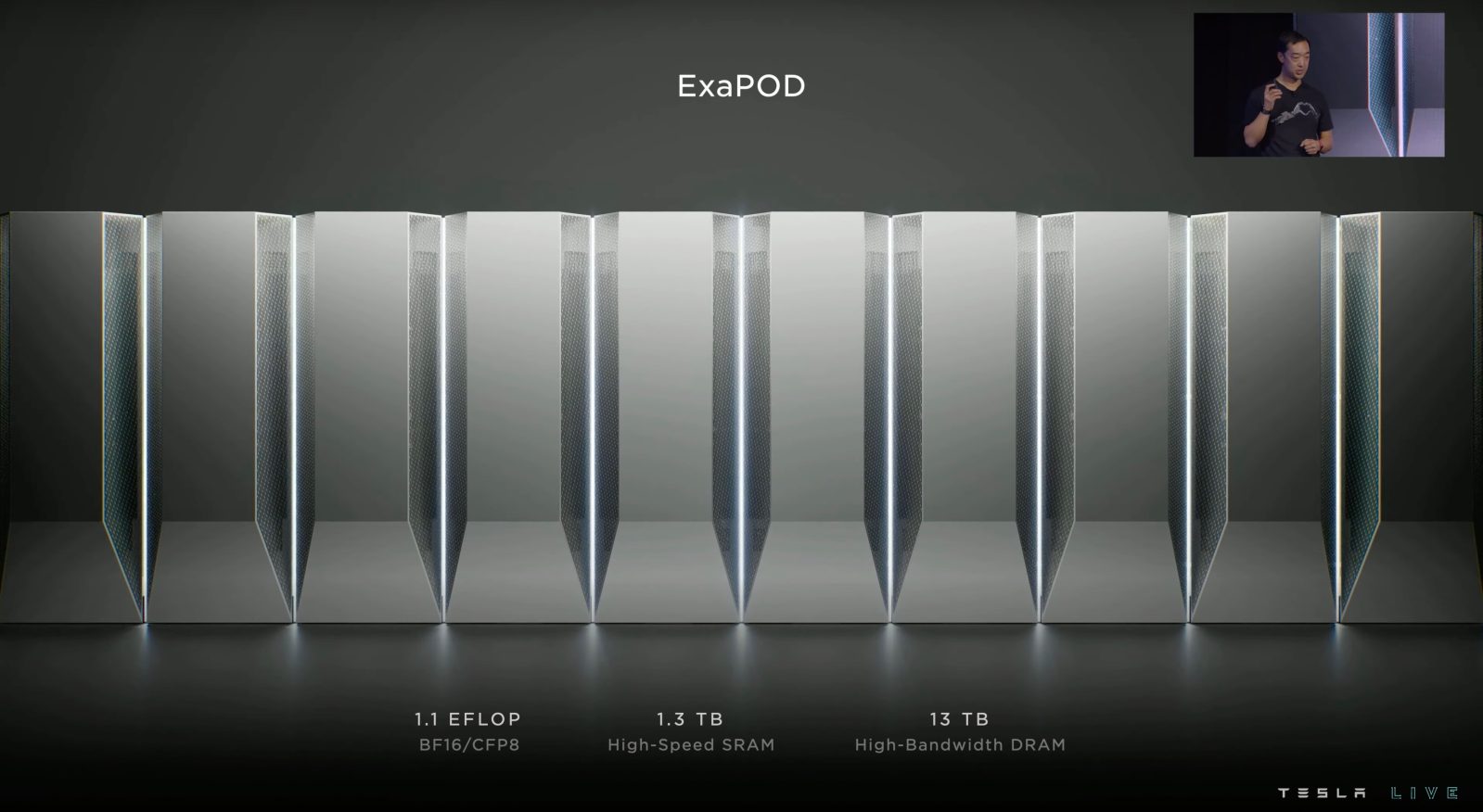

Elon Musk claims Tesla’s new AI supercluster will grow to over 500 MW, making it one of, if not the biggest in the world. At the same time, the CEO claims Tesla is achieving some record-breaking performance with its next-en AI chip.

A few months ago, we reported that Tesla was having issues building a new expansion at Gigafactory Texas to house a new giant supercomputer to train Tesla’s AI.

At the time, we heard that Tesla was aiming for a 100 MW cluster to be ready by August. Musk canceled other projects at Tesla to focus construction resources on the expansion.

Commenting on drone videos of the expansion, Musk said that it will grow to over 500 MW over the next 18 months:

Sizing for ~130MW of power & cooling this year, but will increase to >500MW over next 18 months or so. Aiming for about half Tesla AI hardware, half Nvidia/other. Play to win or don’t play at all.

We previously noted that it was strange that Tesla was internally referring to the project as a Dojo project, which refers to Tesla’s own supercomputing hardware, but sources were also told that the cluster would use Nvidia compute power.

Now, Musk confirmed that Tesla plans to use both its own hardware and Nvidia’s, as well as other suppliers.

However, things are getting a little unclear as Musk seems to also imply that Tesla will use some of its HW4 computers for the training clusters:

HW4 generally refers to Tesla’s in-car computer with an in-house designed chip, while Dojo is used for training, like this new cluster.

It’s unclear here if Musk is talking about using inference computing for training or just talking about Tesla’s overall planned computing power.

Electrek’s Take

Elon had mentioned at Tesla’s shareholders meeting that the company now had Nvidia-level AI chips, but the stock didn’t even move from that announcement as Nvidia became the most valuable company in the world.

I think Tesla’s AI effort is still not super credible for the market. That happens when you claim that you are about to achieve self-driving by the end of the year every year for the past 5 years.

At this point, we need to see Tesla make significant improvements to FSD with each new update. It sounded like this new cluster would help achieve that but Elon also recently said that Tesla was not compute-constrained for training right now, so it’s hard to really understand what is holding up improvements at this point.

FTC: We use income earning auto affiliate links. More.