Businesses seeking to harness the power of AI need customized models tailored to their specific industry needs.

NVIDIA AI Foundry is a service that enables enterprises to use data, accelerated computing and software tools to create and deploy custom models that can supercharge their generative AI initiatives.

Just as TSMC manufactures chips designed by other companies, NVIDIA AI Foundry provides the infrastructure and tools for other companies to develop and customize AI models — using DGX Cloud, foundation models, NVIDIA NeMo software, NVIDIA expertise, as well as ecosystem tools and support.

The key difference is the product: TSMC produces physical semiconductor chips, while NVIDIA AI Foundry helps create custom models. Both enable innovation and connect to a vast ecosystem of tools and partners.

Enterprises can use AI Foundry to customize NVIDIA and open community models, including the new Llama 3.1 collection, as well as NVIDIA Nemotron, CodeGemma by Google DeepMind, CodeLlama, Gemma by Google DeepMind, Mistral, Mixtral, Phi-3, StarCoder2 and others.

Industry Pioneers Drive AI Innovation

Industry leaders Amdocs, Capital One, Getty Images, KT, Hyundai Motor Company, SAP, ServiceNow and Snowflake are among the first using NVIDIA AI Foundry. These pioneers are setting the stage for a new era of AI-driven innovation in enterprise software, technology, communications and media.

“Organizations deploying AI can gain a competitive edge with custom models that incorporate industry and business knowledge,” said Jeremy Barnes, vice president of AI Product at ServiceNow. “ServiceNow is using NVIDIA AI Foundry to fine-tune and deploy models that can integrate easily within customers’ existing workflows.”

The Pillars of NVIDIA AI Foundry

NVIDIA AI Foundry is supported by the key pillars of foundation models, enterprise software, accelerated computing, expert support and a broad partner ecosystem.

Its software includes AI foundation models from NVIDIA and the AI community as well as the complete NVIDIA NeMo software platform for fast-tracking model development.

The computing muscle of NVIDIA AI Foundry is NVIDIA DGX Cloud, a network of accelerated compute resources co-engineered with the world’s leading public clouds — Amazon Web Services, Google Cloud and Oracle Cloud Infrastructure. With DGX Cloud, AI Foundry customers can develop and fine-tune custom generative AI applications with unprecedented ease and efficiency, and scale their AI initiatives as needed without significant upfront investments in hardware. This flexibility is crucial for businesses looking to stay agile in a rapidly changing market.

If an NVIDIA AI Foundry customer needs assistance, NVIDIA AI Enterprise experts are on hand to help. NVIDIA experts can walk customers through each of the steps required to build, fine-tune and deploy their models with proprietary data, ensuring the models tightly align with their business requirements.

NVIDIA AI Foundry customers have access to a global ecosystem of partners that can provide a full range of support. Accenture, Deloitte, Infosys and Wipro are among the NVIDIA partners that offer AI Foundry consulting services that encompass design, implementation and management of AI-driven digital transformation projects. Accenture is first to offer its own AI Foundry-based offering for custom model development, the Accenture AI Refinery framework.

Additionally, service delivery partners such as Data Monsters, Quantiphi, Slalom and SoftServe help enterprises navigate the complexities of integrating AI into their existing IT landscapes, ensuring that AI applications are scalable, secure and aligned with business objectives.

Customers can develop NVIDIA AI Foundry models for production using AIOps and MLOps platforms from NVIDIA partners, including Cleanlab, DataDog, Dataiku, Dataloop, DataRobot, Domino Data Lab, Fiddler AI, New Relic, Scale and Weights & Biases.

Customers can output their AI Foundry models as NVIDIA NIM inference microservices — which include the custom model, optimized engines and a standard API — to run on their preferred accelerated infrastructure.

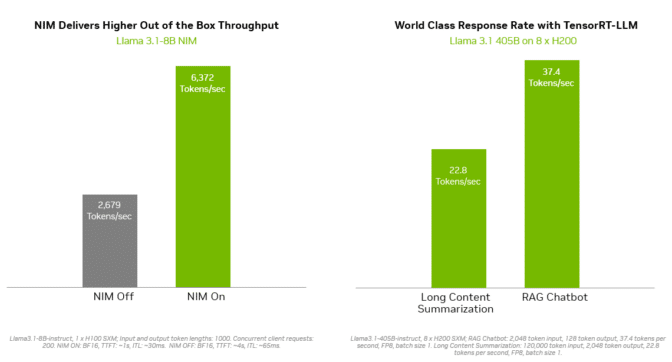

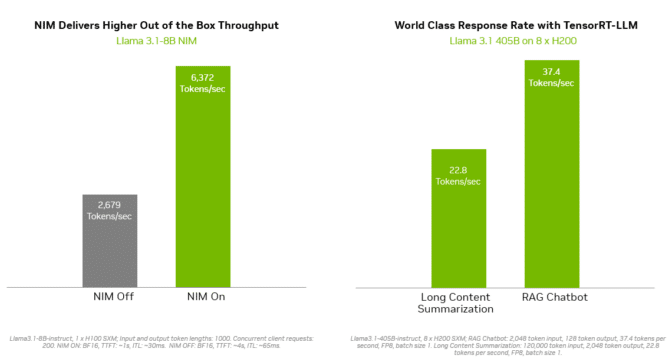

Inferencing solutions like NVIDIA TensorRT-LLM deliver improved efficiency for Llama 3.1 models to minimize latency and maximize throughput. This enables enterprises to generate tokens faster while reducing total cost of running the models in production. Enterprise-grade support and security is provided by the NVIDIA AI Enterprise software suite.

The broad range of deployment options includes NVIDIA-Certified Systems from global server manufacturing partners including Cisco, Dell Technologies, Hewlett Packard Enterprise, Lenovo and Supermicro, as well as cloud instances from Amazon Web Services, Google Cloud and Oracle Cloud Infrastructure.

Additionally, Together AI, a leading AI acceleration cloud, today announced it will enable its ecosystem of over 100,000 developers and enterprises to use its NVIDIA GPU-accelerated inference stack to deploy Llama 3.1 endpoints and other open models on DGX Cloud.

“Every enterprise running generative AI applications wants a faster user experience, with greater efficiency and lower cost,” said Vipul Ved Prakash, founder and CEO of Together AI. “Now, developers and enterprises using the Together Inference Engine can maximize performance, scalability and security on NVIDIA DGX Cloud.”

NVIDIA NeMo Speeds and Simplifies Custom Model Development

With NVIDIA NeMo integrated into AI Foundry, developers have at their fingertips the tools needed to curate data, customize foundation models and evaluate performance. NeMo technologies include:

- NeMo Curator is a GPU-accelerated data-curation library that improves generative AI model performance by preparing large-scale, high-quality datasets for pretraining and fine-tuning.

- NeMo Customizer is a high-performance, scalable microservice that simplifies fine-tuning and alignment of LLMs for domain-specific use cases.

- NeMo Evaluator provides automatic assessment of generative AI models across academic and custom benchmarks on any accelerated cloud or data center.

- NeMo Guardrails orchestrates dialog management, supporting accuracy, appropriateness and security in smart applications with large language models to provide safeguards for generative AI applications.

Using the NeMo platform in NVIDIA AI Foundry, businesses can create custom AI models that are precisely tailored to their needs. This customization allows for better alignment with strategic objectives, improved accuracy in decision-making and enhanced operational efficiency. For instance, companies can develop models that understand industry-specific jargon, comply with regulatory requirements and integrate seamlessly with existing workflows.

“As a next step of our partnership, SAP plans to use NVIDIA’s NeMo platform to help businesses to accelerate AI-driven productivity powered by SAP Business AI,” said Philipp Herzig, chief AI officer at SAP.

Enterprises can deploy their custom AI models in production with NVIDIA NeMo Retriever NIM inference microservices. These help developers fetch proprietary data to generate knowledgeable responses for their AI applications with retrieval-augmented generation (RAG).

“Safe, trustworthy AI is a non-negotiable for enterprises harnessing generative AI, with retrieval accuracy directly impacting the relevance and quality of generated responses in RAG systems,” said Baris Gultekin, Head of AI, Snowflake. “Snowflake Cortex AI leverages NeMo Retriever, a component of NVIDIA AI Foundry, to further provide enterprises with easy, efficient, and trusted answers using their custom data.”

Custom Models Drive Competitive Advantage

One of the key advantages of NVIDIA AI Foundry is its ability to address the unique challenges faced by enterprises in adopting AI. Generic AI models can fall short of meeting specific business needs and data security requirements. Custom AI models, on the other hand, offer superior flexibility, adaptability and performance, making them ideal for enterprises seeking to gain a competitive edge.

Learn more about how NVIDIA AI Foundry allows enterprises to boost productivity and innovation.