Tesla’s Full Self-Driving (FSD) v14, its first major update in a year, disappoints as data points to a lower increase in miles between disengagements than expected.

The system also features new hallucinations, brake stabbing, and excessive speeding.

Earlier this month, Tesla began rolling out its Full Self-Driving (FSD) v14 software update to some customers.

The update has been highly anticipated for several reasons.

First off, it has been a year since Tesla released any significant FSD update to customers, as it focused on its internal robotaxi fleet in Austin. The update is believed to feature improvements developed through Tesla’s robotaxi fleet, which requires supervising like its consumer FSD.

Secondly, CEO Elon Musk has claimed that Tesla still plans for “Supervised Full Self-Driving” to become unsupervised by the end of the year in consumer vehicles. For that to happen, we needed to see a massive improvement from v13 to v14.

As I previously reported, I anticipated an improvement in miles between critical disengagements from ~400 miles in v13 to ~800 to 1,200 miles in v14. It would be a significant improvement, but still way short of what’s needed to make FSD unsupervised.

Tesla notoriously doesn’t release any data about its FSD program. Musk has literally told people to rely on anecdotal experiences posted on social media to gauge progress.

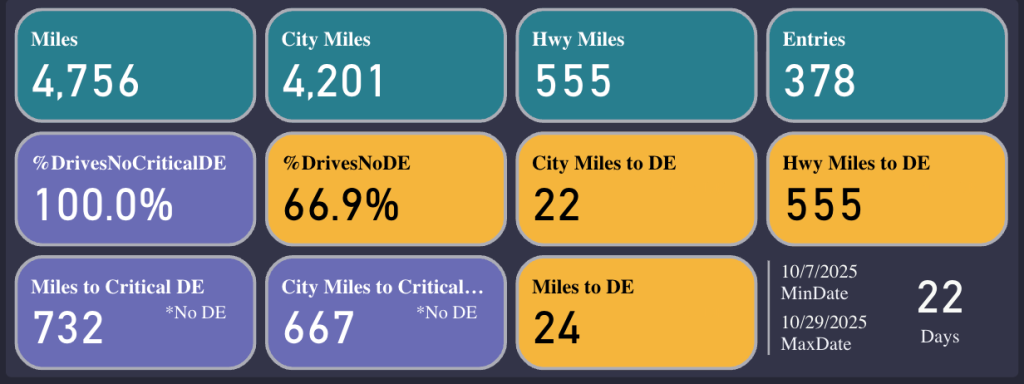

Fortunately, there’s a crowdsourced dataset that gives us some data to track progress with miles between critical disengagement. It’s far from perfect, but it is literally the best data available, and Musk himself has shared the dataset in the past – albeit while misrepresenting it.

In the last week, Tesla started pushing the FSD v14 update (now v14.1.4) to more owners – resulting in more crowdsourced data and anecdotal evidence.

With now over 4,000 miles of FSD v14 data, miles between critical disengagement sits about 732 miles – below the lower end of our expectations:

Tesla would need to be closer to 10,000 miles between critical disengagements to allow unsupervised operation, and even then, it would likely be in geo-fenced areas with speed limitations.

This is unlikely to happen by the end of the year, as Musk predicted, as FSD v14 appears to have some significant issues still.

First off, many FSD v14 drivers are reporting that the update is having problems with hallucinations where the car decides to stop on the side of the road seemingly randomly:

It does seem like FSD v14 sometimes misinterprets other vehicles’ turn signals as emergency vehicle lights and pulls over.

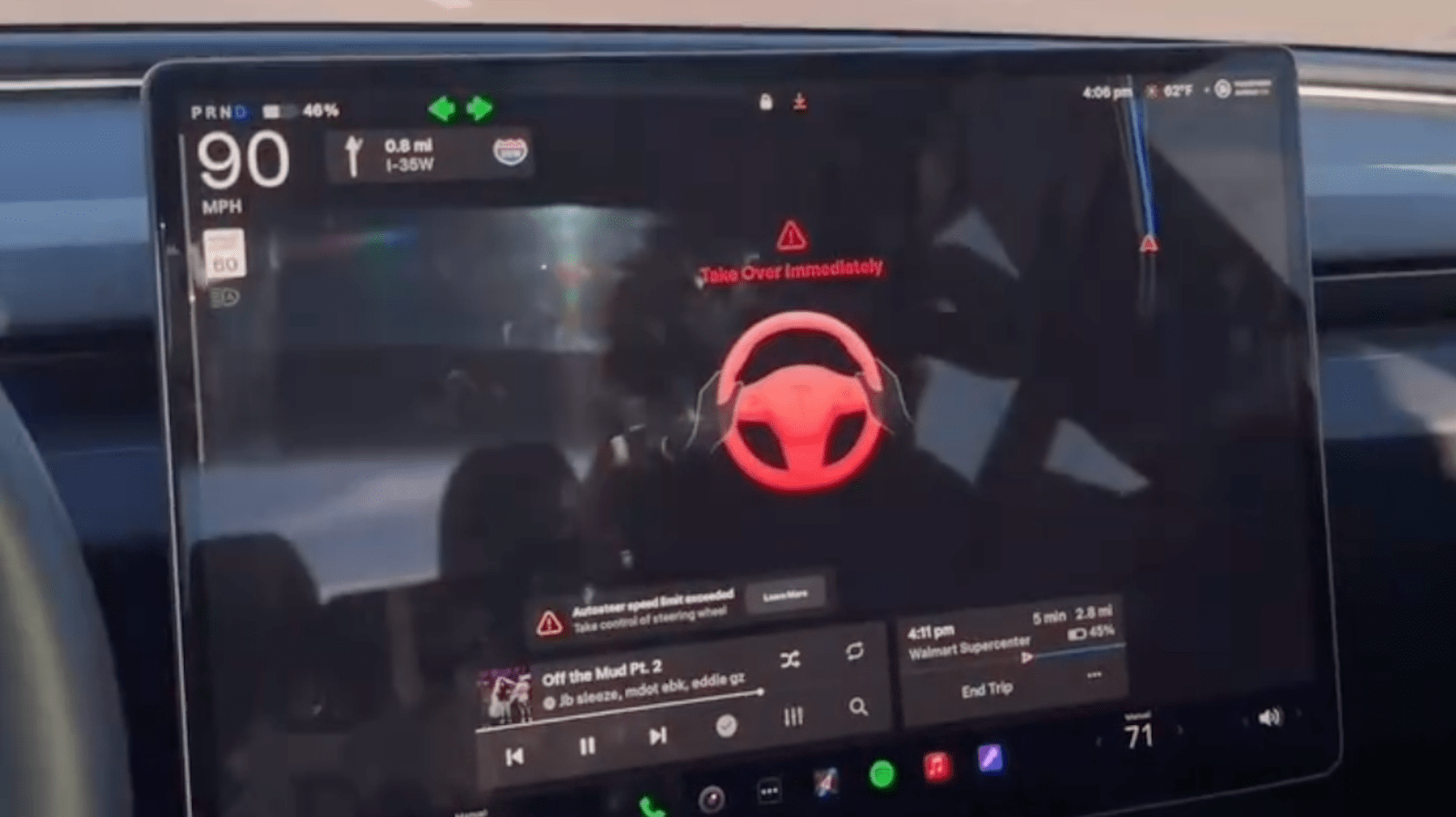

In some cases, FSD v14 has been known to completely disable FSD features inside vehicles:

Many FSD v14 drivers have also reported an increase in “brake stabbing”, where the vehicle seems to hesitate and frantically applies the brakes and releases them – resulting in a stabbing motion.

As previously reported, Tesla also brought back its ‘Mad Max’ mode in FSD v14, which allows for driving exceedingly over the speed limit.

Electrek’s Take

Now, I don’t want to hear anything about my use of anecdotal evidence and crowdsourced data. That’s literally the best data available for FSD.

Unlike virtually all other companies developing self-driving technology, Tesla refuses to release any.

If it were to release some data, I’d be happy to use it.

One thing is clear from v14 so far: unsupervised FSD in consumer vehicles is not happening in any meaningful way this year.

I expect significant improvements in upcoming FSD v14 point updates. Maybe enough to get it to my previous expectations of ~800 to 1,200 miles between disengagements, but that’s about it.

Finally, while I generally don’t count on NHTSA to enforce any rule in any significant way when it comes to Tesla’s “Full Self-Driving” effort, I think they might actually do something about “Mad Max.”

This video on Instagram has 4.5 million views, and it shows extremely dangerous driving behavior at up to 90 mph (145 km/h)

Even if this video exaggerates the driving behavior, Tesla FSD on Mad Max has been clocked exceeding speed limits by wide margins on several occasions.

I think the authorities will have to intervene here, because it makes no sense for an unproven autonomous driving system to be able to operate under those parameters.

FTC: We use income earning auto affiliate links. More.