The billions of devices that are expected to proliferate in the coming years at the “edge” of networks, such as autonomous vehicles and embedded Internet-of-Things, present manufacturers with a quandary: the manufacturers want to add smarts to the devices via machine learning, but they can’t know what exactly to add until they test their neural networks and see what works out there in the marketplace.

Coming in to save the day, so they contend, is a six-year-old startup company named Efinix, based in Santa Clara. The company has been refining the art of programmable chips. It now says that its customers can use its parts to first test a market for AI, and then, once the right neural nets are developed, mass-produce chips to serve those nets.

Also: AI Startup Cornami reveals details of neural net chip

The company’s chief executive, Sammy Cheung, took some time to talk with ZDNet about Efinix’s technology on the sidelines of the Linley Group Fall Processor Conference last week, hosted by venerable semiconductor analysis firm The Linley Group.

“A customer of mine is a camera company from Taiwan,” explains Cheung. “Each model of connected camera that they design and sell may initially only be tens of thousands of units.”

“They didn’t know how to go after a market for products with millions of units; now they can go after something much higher volume.”

The task of AI on these edge devices is “inference,” the part of machine learning when the neural net uses what it has learned during the training phase to deliver answers to new problems. While Nvidia’s GPU chips dominate the training phase of machine learning in the data center, devices out in the wilds, at the edge, that rely on battery power, need low-power chips to perform inference very efficiently.

The market for inference chips in edge computing is swelling with competitors. Two others, Cornami, and Flex Logix, have been covered by ZDNet recently.

Efinix’s solution is something called “Quantum,” which combines “FPGAs,” on the one hand, chips whose circuitry can be re-programmed, with “ASICs,” chips whose wiring is fixed at manufacturing time.

Also: AI startup Flex Logix touts vastly higher performance than Nvidia

Cheung’s proposition is that vendors such as the connected camera maker can first start out with Efinix’s FPGAs, to try out different neural nets, changing the circuitry as neural networks evolve. Once a vendor has become comfortable with their design, they can move to the company’s Quantum combo chip that uses both FPGA and ASIC circuits, and get greater performance for their finished designs.

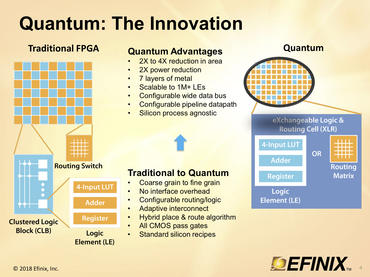

Efinix’s claims superiority performance in tight power budgets for its FPGA chips relative to Intel and Xilinx for neural network applications. This graphic explains the main aspects the company says sets apart its technology.

Efinix Inc.

FPGAs have become an increasingly popular approach for machine learning: Microsoft uses them for the “Brainwave” neural net project that powers many of its cloud-based services such as Bing and Cortana. The FPGA market has been dominated for years by Intel and Xilinx: Microsoft builds Brainwave on an Intel part.

But both Altera and Xilinx have ignored the emerging edge market. They are building huge chips that demand tens of watts, whereas Efinix is aiming for devices that may have only one watt of power in which to perform neural network acceleration. “Altera and Xilinx classically focused on high-end markets,” says Cheung. “They were never able to go mainstream.”

While FPGAs classically are a costly approach, Cheung says development costs with its parts are “much lower” than for traditional FPGAs, and only about 25% more expensive than making an ASIC, which is traditionally the cheaper solution.

Also: Google says ‘exponential’ growth of AI is changing nature of compute

The key to the Quantum technology, as Cheung explained at the conference, are the thousands of compute “elements” that make up the chip. A traditional FPGA has clusters of computing that do the “multiply-accumulate” math that a neural net requires. They surround that compute with wires that “route” signals between the clusters. But that design, like a checkerboard, ends up producing traffic jams as data travels from one part of the chip to another.

In the case of Efinix’s part, each cluster can perform either the compute or the routing function. What that means is that areas of the chip can be re-deployed from crunching numbers to moving data as needed, like freeing up new avenues in a city street plan. That relieves the traffic jams of data.

Among chip startups, which customarily get $100 million or more in backing, the 40-person Efinix is running a tight ship. To date, the company has gotten just under $26 million, including money from Samsung Electronics, in a Series A round of funding led by angel investors, a Series B last year, and a bridge financing of $9.5 million this year.

“We shocked the bankers by only raising $26 million,” says Cheung with a smile.

Cheung expects Efinix has a shot next year at turning its losses to break-even.

Previous and related coverage:

What is AI? Everything you need to know

An executive guide to artificial intelligence, from machine learning and general AI to neural networks.

What is deep learning? Everything you need to know

The lowdown on deep learning: from how it relates to the wider field of machine learning through to how to get started with it.

What is machine learning? Everything you need to know

This guide explains what machine learning is, how it is related to artificial intelligence, how it works and why it matters.

What is cloud computing? Everything you need to know about

An introduction to cloud computing right from the basics up to IaaS and PaaS, hybrid, public, and private cloud.