Self-driving Engineer Training Base: Get hands On Real Self-driving Vehicles, Cohort II

![]() Nancy LeeBlockedUnblockFollowFollowingDec 27

Nancy LeeBlockedUnblockFollowFollowingDec 27

Self-driving Engineer Training Base greeted its second cohort of participating engineers this week: attending course lecture about Autoware, finishing traffic lights recognition challenge, getting hands on the real self-driving car, debugging the codes and moving the car, eating, sharing and having fun. Let’s take a peep at what happened in the five days from Dec.16 to Dec.20th, 2019.

Training Base Overview and Cohort II Rewinding

Dedicated to talents cultivation in the autonomous driving industry, Self-driving Engineer Training Base is a hands-on training program launched by the joint partnership of PIX, Udacity and Tier IV. With the onsite training and series of courses from the Training Base, participants will experience the fun of engineering and road tests by running their algorithms on real cars, further mastering the principles and applications of autonomous driving with hands-on practice. Autonomous driving is an industry with great demand for talents. Many engineers enter the autonomous driving field by changing careers from other related industries such as robotics, automation, and AI etc., which results in a lack of experience in running algorithms on the real vehicles or knowing little about the vehicle control and the hardware. Onsite training courses from the Training Base qualify participants with the relevant skills and knowledge of autonomous driving.

Cohort I was successfully launched and held in September 2019. This week the second cohort onsite training has draw to a satisfactory end as well. A quick peep: 5 days, 3 teams, 3 self-driving cars, 4 training lectures, and 1 challenge. We’ve got participants from Hangzhou, Shanghai, Beijing, Wuhan and all over China. They were students of Udacity online course Self-driving Engineer Nanodegree, with different backgrounds in related fields, such as doctor from University of Sheffield, graduate student from NYU, engineers from Aptiv, developer from China Mobile and smart robotics enterprise Sineva, and also engineers from car companies such as Ruichi Electric Car, Borgward and Dongfeng Motor. The participants had experienced the full process of autonomous driving from software to hardware during the five days, immersing themselves in the spirits of exploring and creating, from the learning about self-driving background/framework/core technologies, to software and system deployment, Autoware applications such as map building, data processing and path planning, and to code optimization and model training. While achieving the Ultimate Challenge of traffic light “Start & Stop” task on the real roads, the participating engineers had gone through the systematic approach of self-driving.

Group photo from Self-driving Engineer Training Base Cohort II

Group photo from Self-driving Engineer Training Base Cohort II

Five Day Onsite Training Recap

Digging into details of the five days, One by One

Day #1

The first day was mainly about self-driving introduction regarding its background, development, framework and core technologies, meanwhile learning about how to drive GPUs, install GPU Tensorflow, configure OpenCV, Autoware and other essential installation packages.

In order to create a cozy and easy-to-catch-up environment on the Sunday morning (the first day of onsite training), participants gathered at a spacious hotel suite for the introduction session to kickstart the fireside chats and onsite training.

Mentor gave brief introduction of the five-day agenda

Mentor gave brief introduction of the five-day agenda Lunch in winter sunshine

Lunch in winter sunshine

Participants got to know each other and phased into three teams in delightful atmosphere. In the afternoon, all the participants headed to the new factory for software deployment and for getting familiar with the vehicle hardware.

Modular working space at PIX for teams

Modular working space at PIX for teams

Excited to be in the new factory, they just couldn’t wait to be in the car. Each team chose a self-driving car as their study objective, on which they would stay most of time in the next few days, even at dark nights. Participants soon came up with cool names for their teams in the afternoon on the first day:

Sub-Zero Team: The name originates from TopGear’s Cool Wall. TG is a fast-paced and stunt-filled motor show which tests whether cars live up to their manufacturers’ claims. The show grew into popularity worldwide with the talented and humorous hosts Clarkson and Hammond, who introduced the Cool Wall, on which they decided which cars were cool and which were not by categories “Seriously Uncool”, “Uncool”, “Cool”, and “Sub Zero”. So Sub-Zero are those super cool badass cars. Can self-driving cars be less cooler?Triple S Team: Smart, Superfast and Self-driving, which generate triple S and composite the top priority of “Safety”. Also in Chinese, three Ss stand for “溜溜溜”, which pronounces similarly to“666”. It has become one of the most popular network phrases in China to say something is awesome, great job and fantastic.Tur(n)ing Team: Turing was a great English mathematician, computer scientist, logician, and philosopher, a brilliant pioneer in theoretical computer science, the concepts of algorithm and computation with the Turing machine. Turing is an icon for computer science, artificial intelligence and adventure, and when Turing meets self-driving, Turning happens. A Tur(n)ing for great changes. A Tur(n)ing for visionary future powered by autonomous-driving technology. A Tur(n)ing for early pioneers and adventurers in this industry.

Ingenious names.

Team and their self-driving car

Team and their self-driving car Team and their self-driving car

Team and their self-driving car

On the first day, some teams followed the instructions but some not, which was good because students were encouraged to discover and try new approaches by themselves. Experiments and exploration would lead to more inspiration and practical learning. In the afternoon during the software/system deployment session, two teams came across some configuration problems resulting from errors due to the different versions of TensorFlow. One team didn’t succeed while compiling Autoware and it turned out to be a problem with the GPU driver. The main issues came from missing steps or not following key instructions, which led to errors. Each team encountered certain difficulties during the first day, but they all managed to follow the agenda finally and achieved the objectives of Day 1.

Day #2

The main task of Day 2 was collecting traffic light data on the test site and labelling the collected data. In the morning, Prof.Alex gave a thorough introduction about Autoware framework and guided the participants into the basic functions of Autoware. As the designated assistant professor from Nagoya University and research consultant at TierIV Inc., Alex has profound knowledge in self-driving software and proficiency in Autoware’s application on various vehicles, with doctor degree (D.Eng.) in Computer Science from University of Tsukuba, Japan.

Prof.Alex gave lecture in the classroom

Prof.Alex gave lecture in the classroom

Autoware is the world’s first “all-in-one” open-source software for self-driving vehicles and it is widely used among developers and engineers in the autonomous driving industry. In Alex’s Day 2 lecture, he covered key operations and topics of Autoware ranging from basic operations, data recording, sensor calibration, 3D mapping generation and localization etc.. There were many specific and useful steps: By clicking at the ROSBAG button in Autoware, users are able to access ROSBAG recording functions; In Setup tab, the position of Localizer (3D Laser scanner) can be specified and models of vehicles (STL, URDF files) can be added; 3D map can be generated by recording LiDAR scan data into ROSBAG; To calculate self-position (the position and the direction in the map) and to specify the initial localization position either using the RViz button, GNSS data or known map coordinate values.

Autoware course table on Day 2

Autoware course table on Day 2 Discussion with mentor after lecture

Discussion with mentor after lecture

As the ultimate challenge was the traffic lights recognition to achieve “Start & Stop” functions, participating engineers were mostly focused on the data labelling and model training based on what was collected on Day 1 (collected images of the traffic lights). This part was simple repetitive work which was not complicated but needed to be done. Teams continued their data collection until the second day: one team used cellphone to take pictures to collect image data, some used the on-vehicle cameras to collect data based on the software deployment on the car from Day 1, while Sub-Zero team took a different approach, they didn’t take traffic lights images nor did they label the data, instead, they downloaded the traffic lights models online which were already trained by contributors so the team could just begin the recognition task, which saved them plenty of time to engage in other important tasks.

Teamwork on the software deployment and data processing

Teamwork on the software deployment and data processing Team discussion and mentor guidance

Team discussion and mentor guidance

Day #3

Teams were in various paces, one after another achieving the proposed tasks. With the foundation on Day 2 of labelled data, trained targeted detectors and configured files, teams would officially begin to work on the cars on Day 3 for field test and code debugging based on the performances. The three cars onsite provided by PIX were by-wire control capable and were equipped with sensors and devices such as Velodyne LiDAR, on-board cameras, in-vehicle industrial-grade computing platforms, RTK, radars and more, which had provided powerful and convenient computing and processing capabilities for self-driving ability on PIX cars.

Calibrating LiDAR

Calibrating LiDAR

In the morning, Prof.Alex finished his final lecture part of Autoware in depth regarding its hands-on experience, covering theoretical knowledge and on-hands guidance from object detection and tracking, path generation & path planning, to path following and vehicle control. Alex mentioned in his course that supervised machine learning would facilitate object detection. Tools like YOLO would also be helpful. As for traffic light detection, steps were important: detecting traffic light color from camera images, calculating the coordinates of traffic lights from the current position and VectorMap information obtained by localization, and linking the detection result with a path planning node to enable Starting/Stopping at traffic light. Besides the ultimate-challenge-related knowledge and instructions, Alex also provided mentorship for the path planning and object tracking by using IMM-UKF tracker and Open Planner, detecting control signals, ROSBAG playback, localization and other tools or functions.

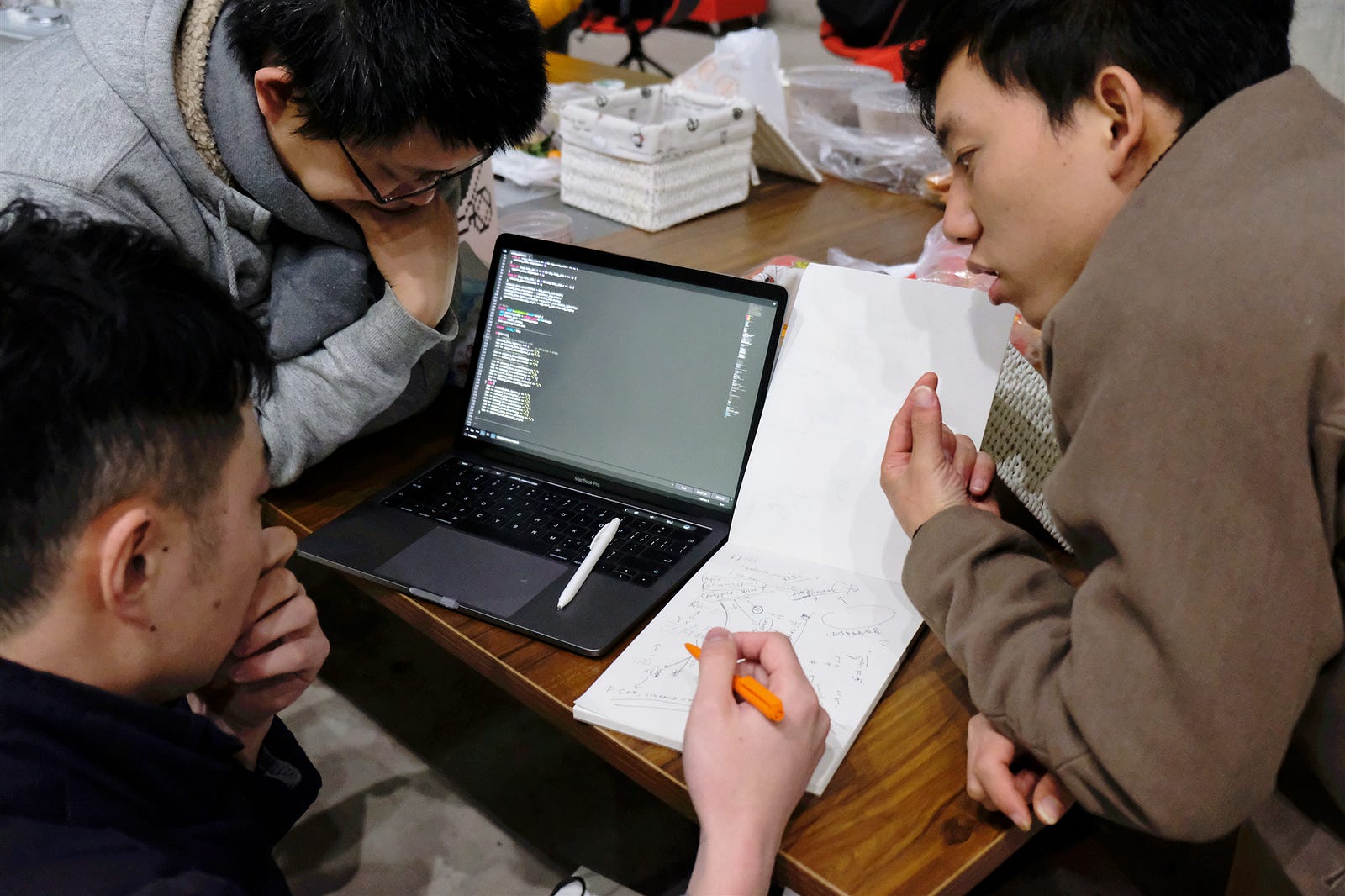

Drawing sketches to explain steps

Drawing sketches to explain steps

In the afternoon, all three teams dedicated themselves to car testing and model training. They needed to get the cars moving under autonomous driving mode based on instructions from Alex’s lecture in the morning. Triple S team had succeeded in that on Day 2 and the other two teams made it as well on Day 3. They all completed the task without too many problems. In order to make it more challenging so that engineers would learn more in depth, Prof.Alex increased the difficulty by adding a surprise task: the self-driving car had to remain in front of the stop line for three seconds while detecting the traffic light. The teams tried different approaches and methods to realize that task. It was fun but hard. On Day 3, all three teams completed the tasks of collecting Pointcloud Map and getting cars moving under autonomous driving mode.

Sub-Zero team testing on road

Sub-Zero team testing on road Tur(n)ing team testing on road

Tur(n)ing team testing on road

Day #4

Day 4 was like a roller coaster day with ups and downs: secret guest arrived, by-wire control penetration course, raining started and inevitably staying up late.

In the morning, Chang, engineer from PIX shared in depth the vehicle control and drive-by-wire of PIX self-driving cars. Car drive-by-wire is a system that converts the driver’s steering actions into electrical signals through sensors, which are then transmitted directly to the actuators through cables. PIX drive-by-wire vehicles include by-wire control braking system, throttle system and steering system, as well as drive-by-wire electronics system. The different control units interact with the vehicle to make it self-driving with electronic control capability. With Chang’s course, participants gained a profound understanding into the vehicle control and car hardware.

PIX engineer Chang was presenting the vehicle control

PIX engineer Chang was presenting the vehicle control

During the lecture, a secret guest showed up in the classroom with a familiar face that the participants recognized soon. It was David Silver from Udacity, where he leads the Self-Driving Car Engineer Nanodegree Program. Before Udacity, David was a research engineer on the autonomous vehicle team at Ford. He has an MBA from Stanford, and BSE in computer science from Princeton. Participants were excited as he was the person in the videos giving lectures during the Udacity online courses. David gave a brief introduction of himself and the self-driving industry. His humor and charm fascinated the engineers. David and Alex provided much guidance in the next two days. After David’s short speech, Prof.Alex made some onsite demonstrations and real-time practices for the participants, solving and answering their concerns for the use of Autoware.

David and Alex met and chat

David and Alex met and chat Udacity mentor David Silver visited PIX office

Udacity mentor David Silver visited PIX office

In the afternoon, it started raining, which arose worries among the teams as the visual detection might be influenced, and it did actually affect the detection and recognition as it turned out. The teams worked closely and tried to follow the agenda. Starting from Day 2, certain participants had begun to stayed up late at night for road testing and model training. The main mission of Day 4 was to modify Autoware source codes and to transplant the outcomes of Day 2 and Day 3 into Autoware. It happened to both Tur(n)ing team and Sub-Zero team that the codes didn’t work after transplantation. One team solved the problem fast and succeeded in detecting the traffic lights. The other team finally found out that they repeatedly wrote two processing codes in different places out of carelessness, which led to the non-functionality when running the program. Overall, three teams all worked hard and devoted during the five days, and they managed to help each other when needed. The night continued on Day 4 and Tur(n)ing team worked till 6AM the next day. Seldom did the teams get much sleep in the last three days during the bootcamp.

Checking hardware issue

Checking hardware issue Charging the car

Charging the car Codes optimization discussion at deep night

Codes optimization discussion at deep night

Day #5

Day 5 was filled with excitement, anxiety, tiredness and also happy relaxing at night. Day 5 completely belonged to the engineers. They kept optimizing codes, testing on roads and improving detection performance, getting ready for the Ultimate Challenge Onsite at 3 PM. Each team would have half an hour to finish the designated tasks. However, plans were just plans. Changes always happened. Until 3 PM on Day 5, the three teams were still in the car testing outside on the road track.

Mentor debugging the program

Mentor debugging the program Tur(n)ing team testing traffic light detection at the stopping line

Tur(n)ing team testing traffic light detection at the stopping line

The factory had a rectangular road surrounded outside, where two traffic lights were setup at the turnings for detection and task testing. During the process, Tur(n)ing team ran out of gasoline in the car and had to go out to refuel in the middle of the testing, which wasted some time. The other two teams were meanwhile taking time to test the codes. Triple S team had a serious problem that their car couldn’t steer automatically under the new approach they took and they tried to tackle the problem during the whole testing process. Finally they managed to make it by restarting the program after running certain codes. But still, they had trouble stopping the car stably at the stopping line on the slope road. Both Triple S team and Tur(n)ing team finished the challenge with their own solutions. For Tur(n)ing team, their problem didn’t solve until 6PM, by when it had already started to get dark, but fortunately their training models were so accurate that they could detect the traffic lights even at night. All teams managed to finish the test and tasks by 8PM, though it was a bit behind the schedule, but they’ve all made it.

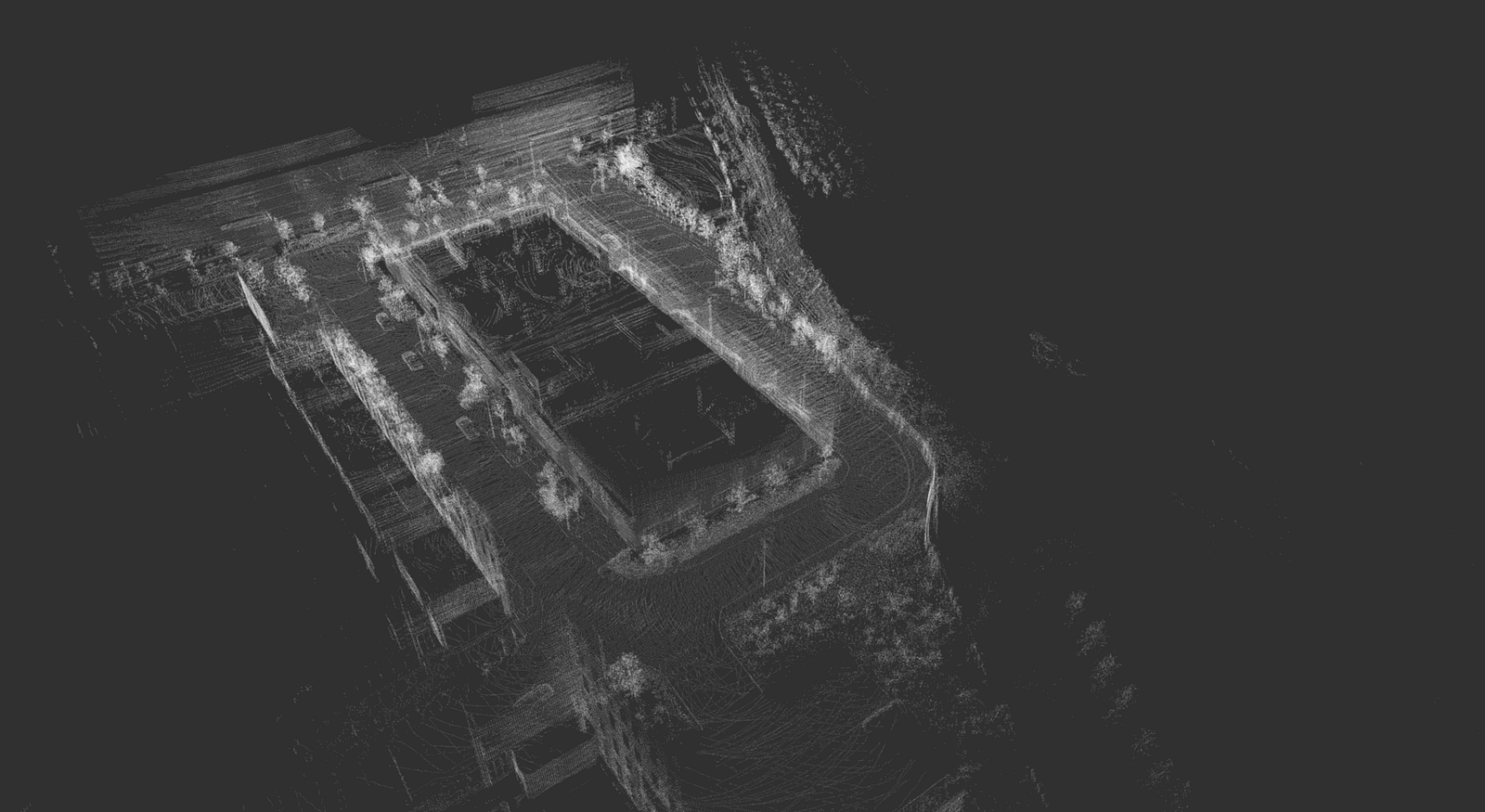

Pointcloud map of the test road

Pointcloud map of the test road The sheer happiness and excitement when challenge succeeded at 6 PM on the final day

The sheer happiness and excitement when challenge succeeded at 6 PM on the final day

At 9PM, the party kickstarted! The teams finally got time to relax and rest after five-day continuous work and contribution. The big long table in the factory was full of pizza, cakes, juice, pot meat, fruits and all kinds delicious foods on the top, also beers! Participants drank and talked, feeling like a meetup dedicated to self-driving engineers. Before the catch-up, Show & Tell session from each team went on. They’ve all managed to leverage some time to prepare the presentation out of the busy agenda. Three teams shared their gains, feelings inspirations and insights during the five-day onsite training. Most of the participants were engineers working in companies or enterprises and they were eager to embark on a career in the autonomous driving industry. One participant explained that he would be assigned to the self-driving department of their company soon and the training base program had provided him with the essential skills and knowledge he needed for this new role. Another engineer mentioned his company has the similar car as PIX’s vehicle platform and now he knew how to make it self-driving and that was cool.

Show & Tell session on Day 5 night

Show & Tell session on Day 5 night Show & Tell session on Day 5 night

Show & Tell session on Day 5 night

Participants were awarded with the program certificates during the Show&Tell session. Under the joint impact of PIX, Udacity and Tier IV, the Onsite Training Certificate indicated that the engineer had finished the self-driving onsite training successfully with an essential knowledge of self-driving vehicles from software to hardware through their hands-on practice in the five days. The small awarding ceremony was delightful, followed by pleasant discussion, chatting and laughing into deep night. Eventually, they also became friends.

Teams getting their certificates

Teams getting their certificates

Self-driving Engineer Training Base is a program for training and learning, meanwhile it’s also a playground for engineers to have fun and make new friends, getting inspirations from each other and sharing the spirits of collaboration. Here, participants practice and test self-driving technologies on real physical cars, learning both software and hardware comprehensively. They’re equipped with the solid knowledge and experience of autonomous driving control, software and more.

Engineers’ passion for engineering and creativity is ignited, with the shared value of using autonomous driving technology to shape a better world. Awaiting the next cohort!