AutoML: Automating the design of machine learning models for autonomous driving

![]() Waymo TeamBlockedUnblockFollowFollowingJan 15

Waymo TeamBlockedUnblockFollowFollowingJan 15

By: Shuyang Cheng and Gabriel Bender*

At Waymo, machine learning plays a key role in nearly every part of our self-driving system. It helps our cars see their surroundings, make sense of the world, predict how others will behave, and decide their next best move.

Take perception: our system employs a combination of neural nets that enables our vehicles to interpret sensor data to identify objects and track them over time so it can have a deep understanding of the world around it. The creation of these neural nets is often a time-consuming task; optimizing neural net architectures to achieve both the quality and speed needed to run on our self-driving cars is a complex process of fine-tuning that can take our engineers months for a new task.

Now, through a collaboration with Google AI researchers from the Brain team, we’re putting cutting-edge research into practice to automatically generate neural nets. What’s more, these state-of-the-art neural nets are higher quality and quicker than the ones manually fine-tuned by engineers.

To bring our self-driving technology to different cities and environments, we will need to optimize our models for different scenarios at a great velocity. AutoML enables us to do just that, providing a large set of ML solutions efficiently and continuously.

Transfer Learning: Using existing AutoML architectures

Our collaboration started out with a simple question: could AutoML generate a high quality and low latency neural net for the car?

Quality measures the accuracy of the answers produced by the neural net. Latency measures how fast the net provides its answers, which is also called the inference time. Since driving is an activity that requires our vehicles to use real-time answers and given the safety-critical nature of our system, our neural nets need to operate with low latency. Most of our nets that run directly on our vehicles provide results in less than 10ms, which is quicker than many nets deployed in data centers that run on thousands of servers.

In their original AutoML paper[1], our Google AI colleagues were able to automatically explore more than 12,000 architectures to solve the classic image recognition task of CIFAR-10: identify a small image as representative of one of ten categories, such as a car, a plane, a dog, etc. In a follow-up paper[2], they discovered a family of neural net building blocks, called NAS cells, that could be composed to automatically build better than hand-crafted nets for CIFAR-10 and similar tasks. With this collaboration, our researchers decided to use these cells to automatically build new models for tasks specific to self-driving, thus transferring what was learned on CIFAR-10 to our field. Our first experiment was with a semantic segmentation task: identify each point in a LiDAR point cloud as either a car, a pedestrian, a tree, etc.

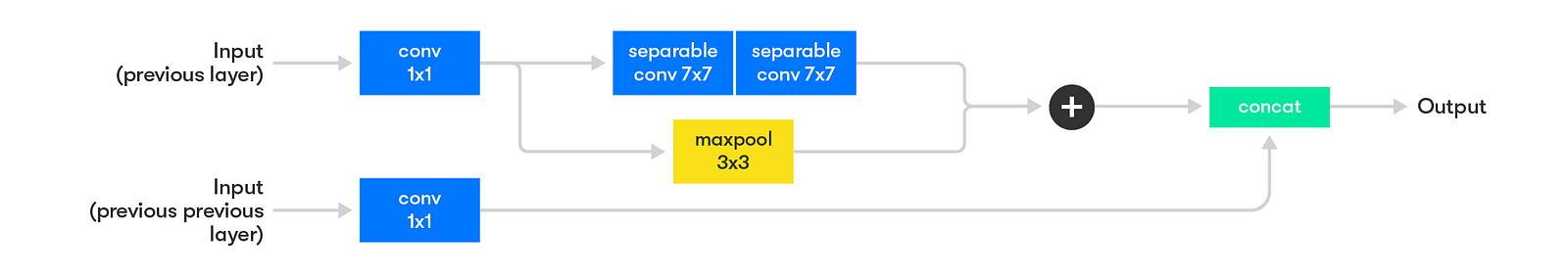

One example of a NAS cell. This cell processes inputs from the two previous layers in a neural net.

One example of a NAS cell. This cell processes inputs from the two previous layers in a neural net.

To do this, our researchers set up an automatic search algorithm to explore hundreds of different NAS cell combinations within a convolutional net architecture (CNN), training and evaluating models for our LiDAR segmentation task. When our engineers fine-tune these nets by hand, they can only explore a limited amount of architectures, but with this method, we automatically explored hundreds. We found models that improved the previously hand-crafted ones in two ways:

Some had a significantly lower latency with a similar quality.Others had an even higher quality with a similar latency.

Given this initial success, we applied the same search algorithm to two additional tasks related to the detection and localization of traffic lanes. The transfer learning technique also worked for these tasks, and we were able to deploy three newly-trained and improved neural nets on the car.

End-to-End Search: Searching for new architectures from scratch

We were encouraged by these first results, so we decided to go one step further by looking more widely for completely new architectures that could provide even better results. By not limiting ourselves to combining the already discovered NAS cells, we could look more directly for architectures that took into account our strict latency requirements.

Conducting an end-to-end search ordinarily requires exploring thousands of architectures manually, which carries large computational costs. Exploring a single architecture requires several days of training on a data center computer with multiple GPU cards, meaning it would take thousands of days of computation to search for a single task. Instead, we designed a proxy task: a scaled-down LiDAR segmentation task that could be solved in just a matter of hours.

One challenge that the team had to overcome was finding a proxy task similar enough to our original segmentation task. We experimented with several proxy task designs before we could ascertain a good correlation between the quality of architectures on that task and those found on the original task. We then launched a search similar to the one from the original AutoML paper but now on the proxy task: a proxy end-to-end search. This was the first time this concept has been applied for use on LiDAR data.

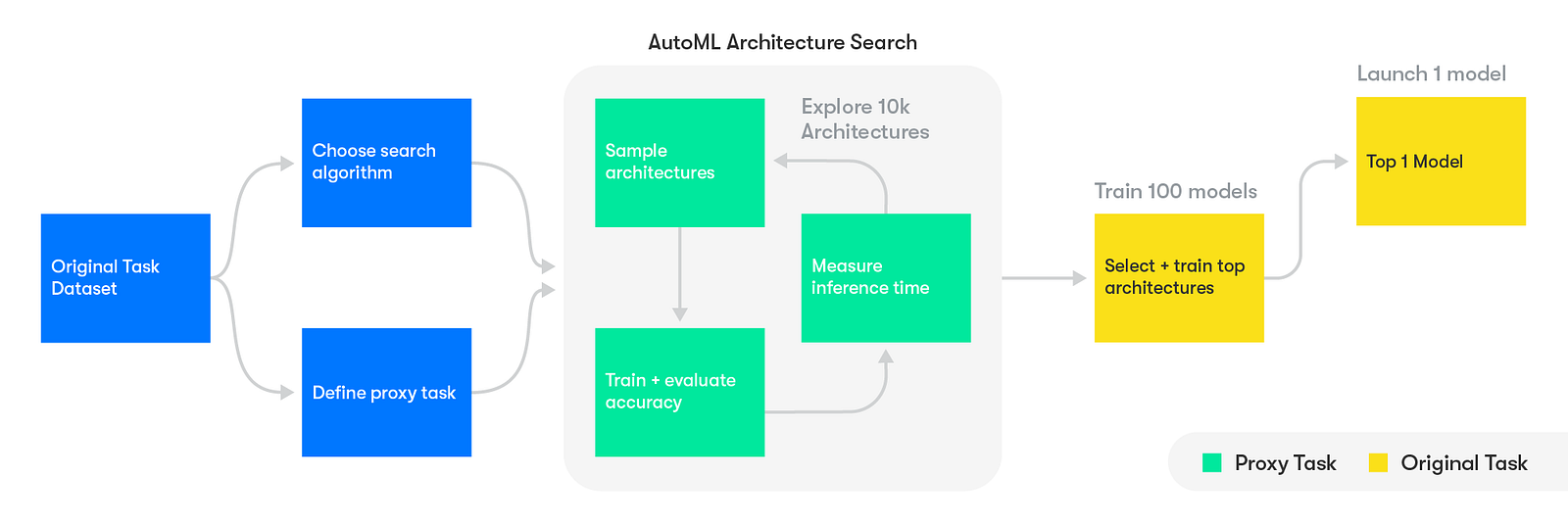

Proxy end-to-end search: Explore thousands of architecture on a scaled-down proxy task, apply the 100 best ones to the original task, validate and deploy the best of the best architectures on the car.

Proxy end-to-end search: Explore thousands of architecture on a scaled-down proxy task, apply the 100 best ones to the original task, validate and deploy the best of the best architectures on the car.

We used several search algorithms, optimizing for quality and latency, as this is critical on the vehicle. Looking at different types of CNN architectures and using different search strategies, such as random search and reinforcement learning, we were able to explore more than 10,000 different architectures for the proxy task. By using the proxy task, what would have taken over a year of computational time on a Google TPU cluster only took two weeks. We found even better nets than we had before when we had just transferred the NAS cells:

Neural nets with 20–30% lower latency and results of the same quality.Neural nets of higher quality, with an 8–10% lower error rate, at the same latency as the previous architectures.

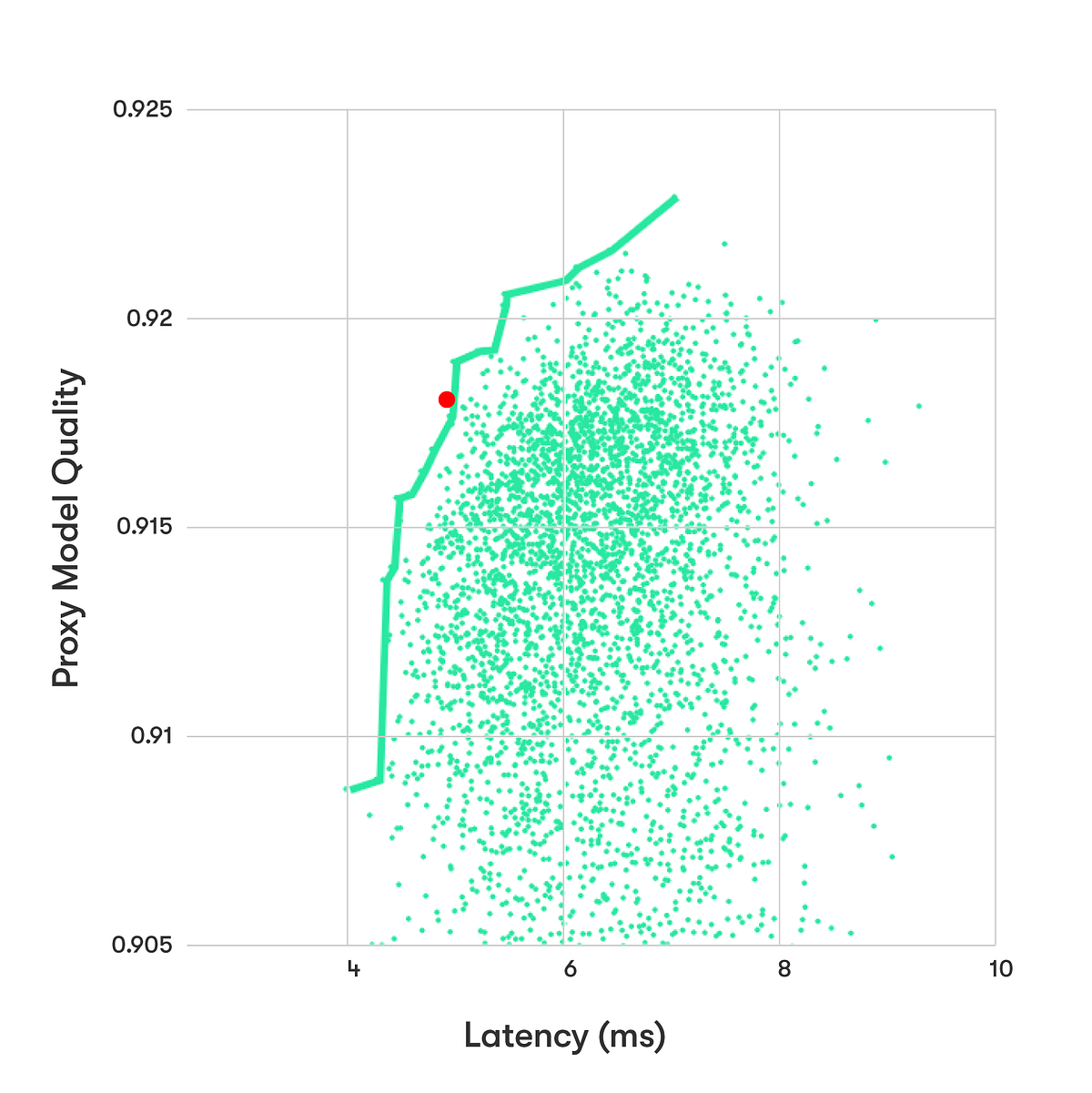

1) The first graph shows about 4,000 architectures discovered with a random search on a simple set of architectures. Each point is an architecture that was trained and evaluated. The solid line marks the best architectures at different inference time constraints. The red dot shows the latency and performance of the net built with transfer learning. In this random search, the nets were not as good as the one from transfer learning. 2) In the second graph, the yellow and blue points show the results of two other search algorithms. The yellow one was a random search on a refined set of architectures. The blue one used reinforcement learning as in [1] and explored more than 6,000 architectures. It yielded the best results. These two additional searches found nets that were significantly better than the net from transfer learning.

1) The first graph shows about 4,000 architectures discovered with a random search on a simple set of architectures. Each point is an architecture that was trained and evaluated. The solid line marks the best architectures at different inference time constraints. The red dot shows the latency and performance of the net built with transfer learning. In this random search, the nets were not as good as the one from transfer learning. 2) In the second graph, the yellow and blue points show the results of two other search algorithms. The yellow one was a random search on a refined set of architectures. The blue one used reinforcement learning as in [1] and explored more than 6,000 architectures. It yielded the best results. These two additional searches found nets that were significantly better than the net from transfer learning.

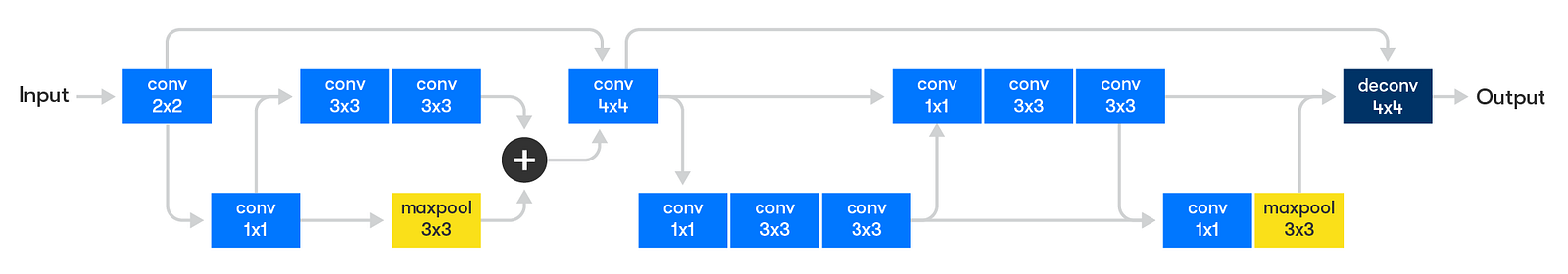

Some of the architectures found in the search showed creative combinations of convolutions, pooling, and deconvolution operations, such as the one in the figure below. These architectures ended up working very well for our original LiDAR segmentation task and will be deployed on Waymo’s self-driving vehicles.

One of the neural net architectures discovered by the proxy end-to-end search.

One of the neural net architectures discovered by the proxy end-to-end search.

What’s next

Our AutoML experimentations are just the beginning. For our LiDAR segmentation tasks, both transfer learning and proxy end-to-end search provided nets that were better than hand-crafted ones. We now have the opportunity to apply these mechanisms to new types of tasks too, which could improve many other neural nets.

This development opens up new and exciting avenues for our future ML work and will improve the performance and capabilities of our self-driving technology. We look forward to continuing our work with Google AI, so stay tuned for more!

References

[1] Barret Zoph and Quoc V. Le. Neural architecture search with reinforcement learning. ICLR, 2017.

[2] Barret Zoph, Vijay Vasudevan, Jonathon Shlens, Quoc V. Le, Learning Transferable Architectures for Scalable Image Recognition. CVPR, 2018.

*Acknowledgements

This collaboration between Waymo and Google was initiated and sponsored by Matthieu Devin of Waymo and Quoc Le of Google. The work was conducted by Shuyang Cheng of Waymo and Gabriel Bender and Pieter-jan Kindermans of Google. Extra thanks for the support of Vishy Tirumalashetty.

Members of the Waymo and Google teams (from left): Gabriel Bender, Shuyang Cheng, Matthieu Devin, and Quoc Le

Members of the Waymo and Google teams (from left): Gabriel Bender, Shuyang Cheng, Matthieu Devin, and Quoc Le

Go to Source