Co-authored with Marius Milea

Introduction

At Bestmile, we are using Terraform to represent our AWS infrastructure as code. As our infrastructure evolved, our Terraform code became more and more tangled in order to cope with the growth.

The maintainability of our Terraform code was becoming an issue and so was its efficiency.

Terraform is great, but it needs a few additions to make it shine. This is where Terragrunt comes into play.

Terragrunt is a wrapper for Terraform that extends its functionality and it also addresses some of its limitations. Terragrunt wraps the Terraform code by using HCL (HashiCorp Configuration Language) code, so Terragrunt will run your Terraform code based on how you define the HCL code. It is this HCL code that brings the extra benefits described below and makes Terragrunt really a wonderful tool.

To cut it short, this is what we would have missed out on by only using Terraform:

- the lack of Terraform module dependencies: it is not possible to dependency chain two modules in Terraform because it’s simply not supported. Terragrunt solves this by implementing the dependencies statement that allows other modules to be executed first.

- no retries on known errors: some Terraform errors will go away just by rerunning the apply command

- no possibility to deploy the same version of a Terraform module across all our environments: let’s say we want to make a change to our VPC Terraform module. Ideally, that change should be deployed as an artifact, tagged with a proper version and distributed to all environments. Terragrunt solves this by sourcing a particular branch/tag for its deployment.

- no possibility of keeping the Terraform backend configuration DRY across all our environments (S3 state bucket, region, DynamoDB table etc): for every sub-component (EKS, S3, IAM, MSK etc) of our infrastructure we had to redefine the Terraform backend configuration over and over again. More sub-components, and more pain for our SRE team. Terragrunt solves this issue by using the path_relative_to_include() function to figure out the current directory.

- when the Terraform code grows significantly enough, it must be organised into folders. Terraform has no way of running a global plan or apply command across all those folders. Terragrunt has a plan-all and apply-all to make it easier to run the Terraform code across all folders.

Migration steps

Structural code changes from Terraform to Terragrunt

Even though migrating to Terragrunt doesn’t require too much refactoring of the currently running Terraform code, it’s important to see how the Terraform repository looks like before starting:

$ tree

.

├── dev

│ ├── efs.tf

│ ├── eks.tf

...omitted for brevity...

│ ├── vpc.tf

├── mgmt

│ ├── eks.tf

│ ├── mgmt.tf

│ ├── outputs.tf

├── modules

│ ├── efs

│ │ ├── main.tf

│ │ ├── output.tf

│ │ └── variables.tf

│ ├── eks

│ │ ├── main.tf

│ │ ├── output.tf

│ │ └── variables.tf

...omitted for brevity...

│ ├── vpc

│ │ ├── main.tf

│ │ ├── output.tf

│ │ └── variables.tf

├── prod

│ ├── efs.tf

│ ├── eks.tf

...omitted for brevity...

│ ├── vpc.tf

├── staging

│ ├── efs.tf

│ ├── eks.tf

...omitted for brevity...

│ ├── vpc.tf

The code from above is what we’ve been using to run our AWS infrastructure for a good while.

Now, after the migration to Terragrunt, our repository looks like this:

$ tree

.

├── README.md

├── atlantis.yaml

├── live

│ ├── account.hcl

│ └── us-east-1

│ ├── dev

│ │ ├── efs

│ │ │ └── terragrunt.hcl

│ │ ├── eks

│ │ │ └── terragrunt.hcl

│ │ ├── env.hcl

...omitted for brevity...

│ │ ├── vpc

│ │ │ └── terragrunt.hcl

│ ├── mgmt

│ │ ├── env.hcl

│ │ ├── vpc

│ │ │ └── terragrunt.hcl

│ ├── prod

│ │ ├── efs

│ │ │ └── terragrunt.hcl

│ │ ├── eks

│ │ │ └── terragrunt.hcl

│ │ ├── env.hcl

...omitted for brevity...

│ │ ├── vpc

│ │ │ └── terragrunt.hcl

│ ├── region.hcl

│ └── staging

│ │ ├── efs

│ │ │ └── terragrunt.hcl

│ │ ├── eks

│ │ │ └── terragrunt.hcl

│ │ ├── env.hcl

...omitted for brevity...

│ │ ├── vpc

│ │ │ └── terragrunt.hcl

└── terragrunt.hcl

Terragrunt example

We will demonstrate how to implement the VPC functionality (we have chosen VPC for this example, but any other AWS component would work just as well) using Terragrunt. This is comprised of two parts:

- the Terraform module

- the Terragrunt code

The Terraform module is the official module found here, but it can also be a custom made module.

The Terragrunt code is made of two parts as well:

- the input variables which can be environment-wide variables (env.hcl), region-wide variables (region.hcl) and/or account-wide variables (account.hcl)

./terragrunt.hcl

./live/account.hcl

./live/us-east-1/region.hcl

./live/us-east-1/dev/env.hcl

./live/us-east-1/prod/env.hcl

where ./live/us-east-1/dev/dev.hcl would look like this:

# Set common variables for the environment. This is automatically pulled in in the root terragrunt.hcl configuration to

# feed forward to the child modules.

locals {

dev_region_vars = read_terragrunt_config(find_in_parent_folders("region.hcl"))

# VPC VARIABLES

vpc_private_subnets_range = local.dev_region_vars.locals.dev_vpc_private_subnets_range

vpc_public_subnets_range = local.dev_region_vars.locals.dev_vpc_public_subnets_range

vpc_azs = local.dev_region_vars.locals.dev_vpc_azs

vpc_cidr = local.dev_region_vars.locals.dev_vpc_cidr

...ommited for brevity...

}

and ./live/us-east-1/region.hcl would look like this:

# Set common variables for the region. This is automatically pulled in in the root terragrunt.hcl configuration to

# configure the remote state bucket and pass forward to the child modules as inputs.

locals {

aws_region = "us-east-1"

# DEV VPC

dev_vpc_private_subnets_range = ["10.10.1.0/24", "10.10.2.0/24", "10.10.3.0/24"]

dev_vpc_public_subnets_range = ["10.10.101.0/24", "10.10.102.0/24", "10.10.103.0/24"]

dev_vpc_azs = ["us-east-1a", "us-east-1b", "us-east-1c"]

dev_vpc_cidr = "10.10.0.0/16"

...ommited for brevity...

}

- and the actual Terragrunt code which lives in ./live/us-east-1/dev/vpc/terragrunt.hcl:

locals {

# Load environment-wide variables

environment_vars = read_terragrunt_config(find_in_parent_folders("env.hcl"))

# Extract needed variables for reuse

env = local.environment_vars.locals.environment

private_subnets_range = local.environment_vars.locals.vpc_private_subnets_range

public_subnets_range = local.environment_vars.locals.vpc_public_subnets_range

azs = local.environment_vars.locals.vpc_azs

cidr = local.environment_vars.locals.vpc_cidr

}

# Terragrunt will copy the Terraform configurations specified by the source parameter, along with any files in the

# working directory, into a temporary folder, and execute your Terraform commands in that folder.

# If the terraform module is in the root directory make sure to set the `//` before the branch or version.

terraform {

source = "git::git@your_git_repo.com:your_team/terragrunt-module-vpc.git//?ref=v1.0"

}

# Include all settings from the root terragrunt.hcl file

include {

path = find_in_parent_folders()

}

# These are the variables we have to pass in to use the module specified in the terragrunt configuration above

inputs = {

env = "${local.env}"

private_subnets_range = "${local.private_subnets_range}"

public_subnets_range = "${local.public_subnets_range}"

azs = "${local.azs}"

cidr = "${local.cidr}"

}

Importing resources from Terraform into Terragrunt

Importing the resources from the current Terraform state into the new Terragrunt state is a two step process:

- List and show the current Terraform state:

$ terraform state list | grep vpc

...ommited for brevity...

module.dev_vpc.module.vpc.aws_vpc.this[0]

...ommited for brevity...

$ terraform state show module.dev_vpc.module.vpc.aws_vpc.this[0]

# module.dev_vpc.module.vpc.aws_vpc.this[0]:

resource "aws_vpc" "this" {

arn = "arn:aws:ec2:us-east-1:YOUR_ACCOUNT_ID:vpc/vpc-0123456789"

...ommited for brevity...

id = "vpc-0123456789"

...ommited for brevity...

}

- Import the state into Terragrunt:

$ terragrunt import module.vpc.aws_vpc.this[0] vpc-0123456789

Note that in the example above we’re using the VPC ID to import the VPC resource into Terragrunt. Other AWS components may use other identifiers when importing them. For example, when importing a security group rule, the identifier would be more complex as shown here.

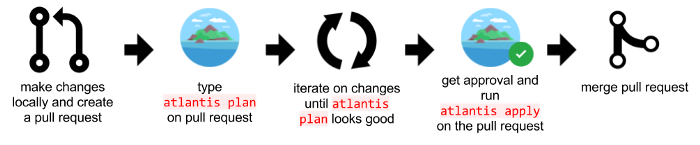

Atlantis workflow

Atlantis is a tool we use for pull request automation. This means that with every pull request, we get the Terraform plan right in the pull request itself which makes collaboration much easier. And that’s not all, there are a lot more benefits of using Atlantis which you can read about here.

Note: the Atlantis part is completely optional. One doesn’t need Atlantis in order to be able to run Terraform and Terragrunt. However, it is such a valuable tool that we thought including it here would be absolutely worth it.

Now, in order to get Atlantis to work with Terragrunt we followed these three steps.

Setup Terragrunt with Atlantis

Create a Docker image including the Terragrunt package

FROM runatlantis/atlantis:latest

# Terragrunt version

ARG TERRAGRUNT

ADD https://github.com/gruntwork-io/terragrunt/releases/download/${TERRAGRUNT}/terragrunt_linux_amd64 /usr/local/bin/terragrunt

RUN chmod +x /usr/local/bin/terragrunt

Deploy Atlantis

You’ll need to change and adapt the values of the Atlantis deployment. At Bestmile we deploy all our services with Helm.

$ helm inspect values stable/atlantis > atlantis-values.yaml

...

edit your file with the proper values

...

$ helm install -f atlantis-values.yaml atlantis

Configure atlantis.yaml in your Terragrunt Live repository and add specific Atlantis Workflow

We’ve used this awesome tool to automatically generate the Atlantis configuration:

$ terragrunt-atlantis-config generate --autoplan --parallel=false --workflow terragrunt --root ./ --output ./atlantis.yaml

$ cat atlantis.yaml

version: 3

automerge: false

parallel_apply: false

parallel_plan: false

projects:

- autoplan:

enabled: true

when_modified:

- '*.hcl'

- '*.tf*'

dir: ./live/us-east-1/dev/vpc

workflow: terragrunt

workflows:

terragrunt:

plan:

steps:

- run: /usr/local/bin/terragrunt plan -no-color -out $PLANFILE

apply:

steps:

- run: /usr/local/bin/terragrunt apply -no-color $PLANFILE

As you can see in the output of the atlantis.yaml file, we are using a specific “workflow” for Atlantis to run the Terragrunt commands. We’ve adapted our workflow to our needs but the Atlantis documentation offers some other examples.

Collaboration workflow with Atlantis

Once upon a time…

We were running the Terraform commands locally Terraform plan and Terraform apply. Working like this had a major drawback: our colleagues could not review what was going on. Besides a “simple” PR. The output had to be shared over email/slack so the team could validate the changes and move forward.

This was very tedious and error-prone.

The future is here…

With the discovery of the brand new world of Atlantis: our workflow has improved dramatically. No more hundred-line emails or never-ending Slack snippets!

Happiness was right around the corner PR.

With Atlantis, every plan or apply is commented in a PR! This is how our collaboration workflow now looks:

Hiccups along the way

We faced some challenges when importing the state of some of the resources into the Terragrunt state, but thankfully the Terragrunt community is very active and we were able to find a workaround. The main issues we encountered are described below.

Security groups

At first we were using a hand-made module for the aws_security_group , which led us to some of the following problems during the import. Terragrunt/Terraform automatically creates a aws_security_group_rule if the rule is not already defined as this documentation shows. In order to bypass this problem, once we imported the security group resource, we had to manually delete every aws_security_group_rule that had been automatically generated.

$ terragrunt state rm module.sg.aws_security_group_rule.workers-1[0]

$ terragrunt state rm module.sg.aws_security_group_rule.workers-1[1]

$ terragrunt state rm module.sg.aws_security_group_rule.workers-1[2]

...

This lead us to change the way we provision our security groups by starting using the official Terraform module for security groups, which offered many more options than our module.

Re-creation of all imported resources

Depending on the module you’re using, Terragrunt sometimes wants to recreate all the resources even if everything has been awesomely imported. This led us to a deep dive into both (Terragrunt’s and Terraform’s) remote states. Before we start, we need to pull both remote states:

Terragrunt:

$ cd /src/bestmile/terragrunt/live/us-east-1/dev/some_module

$ terragrunt state pull > state.json

Terraform:

$ cd /src/bestmile/terraform/some_module

$ terraform state pull > state.json

We have discovered that some imported resources do not have the name_prefix set in the Terragrunt state.

That is a simple one!

We just needed to set the value of name_prefix to match what is in the Terraform state.

Once we have updated the Terragrunt state with these variables we have to push the state back into our Terragrunt backend (in our case S3 Buckets). The changes will be taken into account during the next terragrunt plan.

terragrunt state push state.json

Unfortunately, pushing the Terragrunt state will lead to another small hiccup we have encountered.

Cannot Push the Terragrunt state

At the top of the Terragrunt state there will be a “serial” number, make sure to increment it by 1 if you cannot push the modified Terragrunt state. (source)

Tricky imports that are not based on the “id”:

In order to make our life easier we developed a python script that would query the Terraform documentation and look for the import command in it.

Our script would run and output something like this to facilitate our imports.

$ python tg_import.py -e dev -r vpc

terragrunt import module.vpc.aws_eip.nat[0] eip-nat-id-1234567890

terragrunt import module.vpc.aws_internet_gateway.this[0] igw-id-1234567890

terragrunt import module.vpc.aws_nat_gateway.this[0] nat-gw-1234567890

terragrunt import module.vpc.aws_route.private_nat_gateway[0] rt-pv-1234567890

terragrunt import module.vpc.aws_route.public_internet_gateway[0] rt-pub-1234567890

terragrunt import module.vpc.aws_route_table.private[0] rt-tb-pv-1234567890

terragrunt import module.vpc.aws_route_table.public[0] rt-tb-pub-1234567890

terragrunt import module.vpc.aws_route_table_association.private[0] tassoc-pv-1234567890

terragrunt import module.vpc.aws_route_table_association.public[0] tassoc-pub-1234567890

terragrunt import module.vpc.aws_subnet.private[0] subnet-1234567890

terragrunt import module.vpc.aws_subnet.public[0] subnet-9876543210

terragrunt import module.vpc.aws_vpc.this[0] vpc-1234567890

Conclusion

Migrating to Terragrunt was quite a journey. It was painful at times and time consuming. But did we get any benefits after all the trouble?

YES!

The tedious work we had to dedicate in order to migrate from Terraform to Terragrunt was specific to the existing Terraform state migration into Terragrunt state one.

Among the major benefits that the tool has to offer, we improved the modules we used to deploy our infrastructure. This resulted in fewer lines of infrastructure code as Terragrunt helped us to DRY our code-base.

What we love the most in our git repository hosting the live infrastructure is the folder structure. The layered approach makes it cleaner than what he had at the beginning. Any developer with little to no knowledge about our Terragrunt can understand the layers of Bestmile’s infrastructure.

Versioning has brought us much happiness. When we want to introduce some changes into our infrastructure we follow the standard promotion process. We start by bumping the version in Dev then move to the others environment until we reach Prod.

Migrating from Terraform to Terragrunt was originally published in Bestmile on Medium, where people are continuing the conversation by highlighting and responding to this story.