Path-breaking work that translates an amputee’s thoughts into finger motions, and even commands in video games, holds open the possibility of humans controlling just about anything digital with their minds.

Using GPUs, a group of researchers trained an AI neural decoder able to run on a compact, power-efficient NVIDIA Jetson Nano system on module (SOM) to translate 46-year-old Shawn Findley’s thoughts into individual finger motions.

And if that breakthrough weren’t enough, the team then plugged Findley into a PC running Far Cry 5 and Raiden IV, where he had his game avatar move, jump — even fly a virtual helicopter — using his mind.

It’s a demonstration that not only promises to give amputees more natural and responsive control over their prosthetics. It could one day give users almost superhuman capabilities.

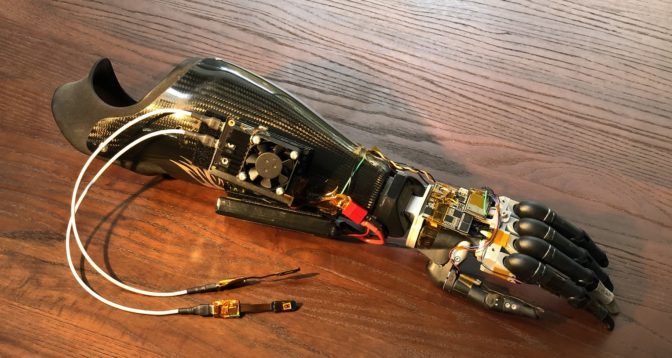

The effort is detailed in a draft paper, or pre-print, titled “A Portable, Self-Contained Neuroprosthetic Hand with Deep Learning-Based Finger Control.” It details an extraordinary cross-disciplinary collaboration behind a system that, in effect, allows humans to control just about anything digital with thoughts.

“The idea is intuitive to video gamers,” said Anh Tuan Nguyen, the paper’s lead author and now a postdoctoral researcher at the University of Minnesota advised by Associate Professor Zhi Yang.

“Instead of mapping our system to a virtual hand, we just mapped it to keystrokes — and five minutes later, we’re playing a video game,” said Nguyen, an avid gamer, who holds a bachelor’s degree in electrical engineering and Ph.D. in biomedical engineering.

In short, Findley — a pastor in East Texas who lost his hand following an accident in a machine shop 17 years ago — was able to use an AI decoder trained on an NVIDIA TITAN X GPU and deployed on the NVIDIA Jetson to translate his thoughts in real-time into actions inside a virtual environment running on, of course, yet another NVIDIA GPU, Nguyen explained.

Bionic Plan

Findley was one of a handful of patients who participated in the clinical trial supported by the U.S. Defense Advanced Research Projects Agency’s HAPTIX program.

The human physiology study is led by Edward Keefer, a neuroscientist and electrophysiologist who leads Texas-based Nerves Incorporated, and Dr. Jonathan Cheng at the University of Texas Southwestern Medical Center.

In collaboration with Yang’s and Associate Professor Qi Zhao’s labs at the University of Minnesota, the team collected large-scale human nerve data and is one of the first to implement deep learning neural decoders in a portable platform for clinical neuroprosthetic applications.

That effort aims to improve the lives of millions of amputees around the world. More than a million people lose a limb to amputation every year. That’s one every 30 seconds.

Prosthetic limbs have advanced fast over the past few decades — becoming stronger, lighter and more comfortable. But neural decoders, which decode movement intent from nerve data promise a dramatic leap forward.

With just a few hours of training, the system allowed Findley to swiftly, accurately and intuitively move the fingers on a portable prosthetic hand.

“It’s just like if I want to reach out and pick up something, I just reach out and pick up something,” reported Findley.

The key, it turns out, is the same kind of GPU-accelerated deep learning that’s now widely used for everything from online shopping to speech and voice recognition.

Teamwork

For amputees, even though their hand is long gone, parts of the system that controlled the missing hand remain.

Every time the amputee imagines grabbing, say, a cup of coffee with a lost hand, those thoughts are still accessible in the peripheral nerves once connected to the amputated body part.

To capture those thoughts, Dr. Cheng at UTSW surgically inserted arrays of microscopic electrodes into the residual median and ulnar nerves of the amputee forearm.

These electrodes, with carbon nanotube contacts, are designed by Keefer to detect the electrical signals from the peripheral nerve.

Dr. Yang’s lab designed a high-precision neural chip to acquire the tiny signals recorded by the electrodes from the residual nerves of the amputees.

Dr. Zhao’s lab then developed machine learning algorithms that decode neural signals into hand controls.

GPU-Accelerated Neural Network

Here’s where deep learning comes in.

Data collected by the patient’s nerve signals — and translated into digital signals — are then used to train a neural network that decodes the signals into specific commands for the prosthesis.

It’s a process that takes as little as two hours using a system equipped with a TITAN X or NVIDIA GeForce 1080 Ti GPU. One day users may even be able to train such systems at home, using cloud-based GPUs.

These GPUs accelerate an AI neural decoder designed based on a recurrent neural network running on the PyTorch deep learning framework.

Use of such neural networks has exploded over the past decade, giving computer scientists the ability to train systems for a vast array of tasks, from image and speech recognition to autonomous vehicles, too complex to be tackled with traditional hand-coding.

The challenge is finding hardware powerful enough to swiftly run this neural decoder, a process known as inference, and power-efficient enough to be fully portable.

So the team turned to the Jetson Nano, whose CUDA cores provide full support for popular deep learning libraries such as TensorFlow, PyTorch and Caffe.

“This offers the most appropriate tradeoff among power and performance for our neural decoder implementation,” Nguyen explained.

Deploying this trained neural network on the powerful, credit card sized Jetson Nano resulted in a portable, self-contained neuroprosthetic hand that gives users real-time control of individual finger movements.

Using it, Findley demonstrated both high-accuracy and low-latency control of individual finger movements in various laboratory and real-world environments.

The next step is a wireless and implantable system, so users can slip on a portable prosthetic device when needed, without any wires protruding from their body.

Nguyen sees robust, portable AI systems — able to understand and react to the human body — augmenting a host of medical devices coming in the near future.

The technology developed by the team to create AI-enabled neural interfaces is being licensed by Fasikl Incorporated, a startup sprung from Yang’s lab.

The goal is to pioneer neuromodulation systems for use by amputees and patients with neurological diseases, as well as able-bodied individuals who want to control robots or devices by thinking about it.

“When we get the system approved for nonmedical applications, I intend to be the first person to have it implanted,” Keefer said. “The devices you could control simply by thinking: drones, your keyboard, remote manipulators — it’s the next step in evolution.”