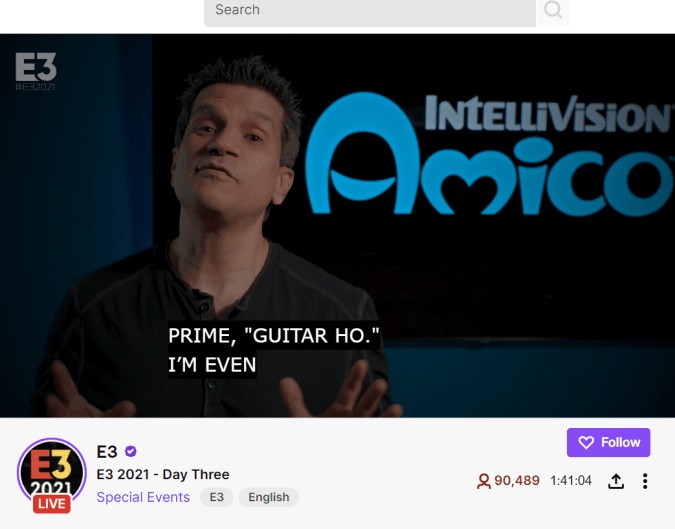

E3 is in full swing this week, drawing tens of thousands of live viewers to its livestreamed gaming announcements. There’s plenty of fun trailers and exciting teasers to check out, but if you’re relying on the feed’s closed captions to make sense of what’s being unveiled today, you might be very confused.

Try comprehending this, for example: “I’ve seen greatest things like NY Hawk Pro Star, MROID Prime, ‘Guitar Ho.’” or “In fact, I own theuinness rld record for the person who has worked on… inheir lifetime. St video games motr is very proud.”

While maybe you can figure out the gist of what’s being said by looking at the complete (albeit error-ridden) sentence in this article now, imagine these words being spelled out in real-time flying by as they try to keep pace with a live speaker. It’s a lot harder to figure out.

These weren’t the only examples of garbled captions on E3’s livestream I encountered, but to list them all would make this article endless — it was that bad. Feel free to scroll through this gallery of screenshots for more examples from the third day of the show (captioning appeared largely normal over the weekend). The strange captions were showing up on both Twitch and YouTube today, which makes it more likely that an issue was happening on E3 organizer Entertainment Software Association (ESA)’s end.

Gallery: E3 livestream closed caption fails | 23 Photos

Gallery: E3 livestream closed caption fails | 23 Photos

To figure out what’s going on, I spoke with (Engadget parent company) Verizon Media Studios’ own senior manager of streaming tech Dennis Scarna. He believes there are a few possible explanations. “Garbled caption data can be attributed to a problem in the data stream/signal flow, poor audio quality or steno errors,” he said.

The ESA told Engadget that it has hired a trained staff to manually transcribe its feed live. In general, human captioners get a feed of the livestream they need to transcribe and are given an encoder box to type the captions into. Their words would be fed back into an organization’s streaming infrastructure as embedded data and fed out to various platforms (like Twitch and YouTube, for example).

Anyone who has tried taking notes during a fast-talking professor’s lecture will know how difficult it is to transcribe any event live. Even the best stenographers will struggle to keep up with a conversation, especially if there’s cross-talk involved. But the level at which E3’s closed captions were failing looked less like a human being struggling to keep up with the stream and more like a technical error like, as Scarna theorized, “data [that] could still be corrupt even if it’s manual.”

Since live transcribing can be incredibly challenging, some mistakes are unavoidable. But large chunks of E3’s streams appeared to be pre-recorded, which meant they could have been captioned ahead of time. We asked the ESA how much of its livestreams were pre-recorded, and the association said the metric changes for every broadcast and that a majority of its content is live or is coming to it as live to be included in the broadcast.

Screenshots

We also asked why pre-recorded segments weren’t pre-captioned, and the ESA said it made the decision to supply captioning for the entire program, not just its own produced videos but those of its partners as well. But because it did not have scripts ahead of time, its live captioners are typing down what’s being said with no notice on what to expect.

The ESA also said it has sign language interpreters available upon request (although it’s unclear how a viewer can request a sign language interpreter mid-stream), and has detailed its accessibility efforts on its website.

Engadget editors watched the E3 Day 3 livestream nonstop today, and until around 5pm ET, the closed captioning bordered on incomprehensible (like the screenshots we provided). Engadget had reached out to the ESA around 1pm ET to ask about the issue. We finally got answers around the time of the improvement, which seems to indicate that the ESA was made aware of the problem and may have been working on a fix. As of this writing, the quality of the captions is markedly improved. We’re still awaiting clarification from the ESA on what went wrong and what was done to fix the issue.

It’s unlikely that AI-generated closed captions would have helped. While the technology is getting better, they’re still imperfect and many broadcasters prefer relying on human stenographers. And if the issue was corrupt data, it would have hampered AI just as much. Accurate transcriptions are a crucial part of making content more inclusive, especially for the deaf and hard-of-hearing community. People with disabilities play games, too, and events and mistakes like this only go further to show how often people with different needs can be left out of the conversation. The industry has made strides in improving the inclusivity of its tech products, but it’s 2021 and we need to be doing better than this.

All products recommended by Engadget are selected by our editorial team, independent of our parent company. Some of our stories include affiliate links. If you buy something through one of these links, we may earn an affiliate commission.