Written by Chris Duckett, APAC Editor

Chris started his journalistic adventure in 2006 as the Editor of Builder AU after originally joining CBS as a programmer. After a Canadian sojourn, he returned in 2011 as the Editor of TechRepublic Australia, and is now the Australian Editor of ZDNet.

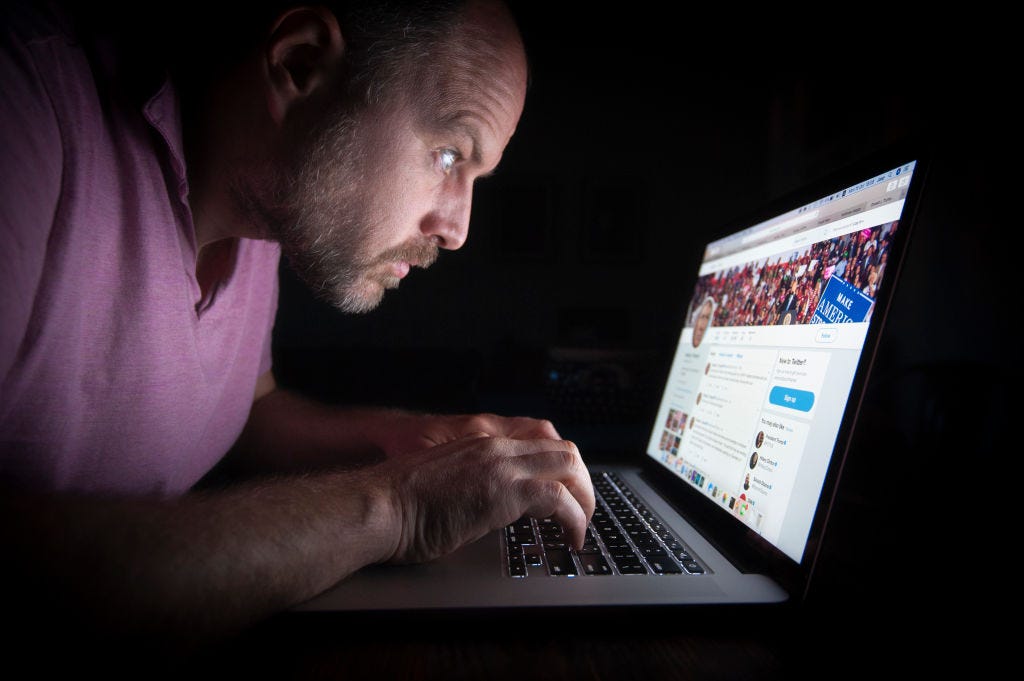

Image: Getty Images

Much like how car manufacturers had to be forced to implement safety features such as seat belts, Australian eSafety Commissioner Julie Inman Grant believes social platforms and tech giants need to be guided by international standards.

“What we’re saying is this era of technological exceptionalism has got to end,” Inman Grant said on a panel at the World Economic Forum on Monday.

“We’ve got food safety standards, we’ve got consumer protection laws, we need the companies assessing their risks and then building the potential protections in as a forethought, rather than an afterthought … embedding those digital seatbelts and erecting those digital guardrails.”

As the world hurtles towards a future that could include augmented reality, metaverses, and other different realities, Inman Grant said such experiences could be supercharged, and that also includes when users are harmed in such environments.

“If we don’t learn the lessons of the web 2.0 world, and start designing for the governance and safety by design, and security and privacy for the metaverse world — I mean, what could possibly go wrong with full sensory haptic suits, hyper-realistic experiences, and teledildonics all coming together in the metaverse?” the commissioner said.

“If there’s no accountability and no transparency, we’re kind of ignoring that human malfeasance will always exist, and so, how are we going to remediate harm?”

Taking a wider view, Inman Grant said as the world gets more polarised and binary, a new balancing of rights may occur.

“I think we’re going to have to think about a recalibration of a whole range of human rights that are playing out online — from freedom of speech, to the freedom to be free from online violence, or the right of data protection, to the right to child dignity.”

Inman Grant earlier told the forum that freedom of speech does not equate into a total free-for-all, and her agency had seen success in getting harmful content taken down.

“Just this week, I issued about AU$4.5 million to a number of sites mostly based in the United States that are hosting the Buffalo manifesto and the gore material.”

The eSafety office gained the ability last year to issue takedown notices backed by civil penalties of up to AU$550,000 for companies and AU$111,000 for individuals.

See also: Misinformation needs tackling and it would help if politicians stopped muddying the water

Executive director and co-founder of Access Now Brett Solomon said there was a chance a “state-centric online policing framework” such the eSafety office was not creating a safer internet or world, and could be a dangerous precedent for less liberal nations.

“What [esafety] is engaged in — this is a very live experiment on society in real time. And how do we actually know the results?” he said.

“How do we know that our communities are safer as a result of this massive, legislative and regulatory model that’s sending a message to the rest of the world, there’s a big risk here that maybe it’s not actually working.”

Inman Grant retorted that the agency has helped thousands of people that would not have been able to get content removed due to not being able to bridge the power gap between themselves and the tech giants and social platforms.

Finnish Minister of Transport and Communications Timo Harakka said it was better that any adjustment on rights was done openly and democratically, rather than allowing tech giants to impose decisions themselves. Harakka cited the example of the social platforms eventually getting around to removing former US President Donald Trump.

“Twitter and Facebook never saw problem, suddenly they shut down Trump’s Twitter account. So there was a problem but we never got to the real point: What exactly was the policy there?” he said.

Harakka said it was “very, very dangerous” that the algorithms used on social platforms have no transparency.

“For instance, as soon as the war in Ukraine and the Russian invasion or attacks started, the second most recommended YouTube video was ‘Why West is culpable of this attack to Ukraine’,” he said.

“So what was this algorithm about? So it’s promoting this binary world view, promoting aggression, and these algorithms are in many ways something that need [investigation] while taking care of free speech.”