The most detailed ever single embroidery to feature in a Rolls-Royce motor car has been designed and created at the Home of Rolls-Royce in Goodwood, West Sussex. A Peregrine Falcon, the fastest bird in the world, famed for its power and speed, has become the subject of scrutiny for the marque’s talented Bespoke Design team.… Continue reading @BMW: FALCON WRAITH FEATURES MOST DETAILED ROLLS-ROYCE EMBROIDERY EVER

Category: Official Press Release

@Hyundai: Transforming How We Interact with Our Cars : Natural Language Speech Recognition

The era has come when navigation is searched by voice while driving. Hyundai Motor Group’s first natural language voice recognition service in the 8th generation Sonata, how was it developed? Talk to your car just like you would talk to a friend! The 8th generation SONATA is equipped with natural language processing, a HMG first.… Continue reading @Hyundai: Transforming How We Interact with Our Cars : Natural Language Speech Recognition

@Hyundai: Updating Your Car Over the Air

Over-the-air update, which updates software and data on the vehicle system without having to visit a service center and connect the vehicle to a PC, has arrived for HMG vehicles. Over-the-air update, which updates software and data on the vehicle system without having to visit a service center and connect the vehicle to a PC,… Continue reading @Hyundai: Updating Your Car Over the Air

Abrupt Stop Detection

skip to Main Content Human drivers confront and handle an incredible variety of situations and scenarios—terrain, roadway types, traffic conditions, weather conditions—for which autonomous vehicle technology needs to navigate both safely, and efficiently. These are edge cases, and they occur with surprising frequency. In order to achieve advanced levels of autonomy or breakthrough ADAS features, these edge cases must be addressed. In this series, we explore common, real-world scenarios that are difficult for today’s conventional perception solutions to handle reliably. We’ll then describe how AEye’s software definable iDAR™ (Intelligent Detection and Ranging) successfully perceives and responds to these challenges, improving overall safety.

Download AEye Edge Case: Abrupt Stop Detection [pdf]

Challenge: A Child Runs into the Street Chasing a BallA vehicle equipped with an advanced driver assistance system (ADAS) is cruising down a leafy residential street at 25 mph on a sunny day with a second vehicle following behind. Its driver is distracted by the radio. Suddenly, a small object enters the road laterally. At that moment, the vehicle’s perception system must make several assessments before the vehicle path controls can react. What is the object, and is it a threat? Is it a ball or something else? More importantly, is a child in pursuit? Each of these scenarios require a unique response. It’s imperative to brake or swerve for the child. However, engaging the vehicle’s brakes for a lone ball is unnecessary and even dangerous.

How Current Solutions Fall ShortAccording to a recent study done by AAA, today’s advanced driver assistance systems (ADAS) will experience great difficulty recognizing these threats or reacting appropriately. Depending on road conditions, their passive sensors may fail to detect the ball and won’t register a child until it’s too late. Alternatively, vehicles equipped with systems that are biased towards braking will constantly slam on the brakes for every soft target in the street, creating a nuisance or even causing accidents.

Camera. Camera performance depends on a combination of image quality, Field-of-View, and perception training. While all three are important, perception training is especially relevant here. Cameras are limited when it comes to interpreting unique environments because everything is just a light value. To understand any combination of pixels, AI is required. And AI can’t invent what it hasn’t seen. In order for the perception system to correctly identify a child chasing a ball, it must be trained on every possible permutation of this scenario, including balls of varying colors, materials, and sizes, as well as children of different sizes in various clothing. Moreover, the children would need to be trained in all possible variations—with some approaching the vehicle from behind a parked car, with just an arm protruding, etc. Street conditions would need to be accounted for, too, like those with and without shade, and sun glare at different angles. Perception training for every possible scenario may be possible. However, it’s an incredibly costly and time-consuming process.

Radar. Radar’s basic flaw is that it can only pick up a few degrees of angular resolution. When radar picks up an object, it will only provide a few detection points to the perception system to distinguish a general blob in the area. Moreover, an object’s size, shape, and material will influence its detectability. Radar can’t distinguish soft objects from other objects, so the signature of a rubber or leather ball would be close to nothing. While radar would detect the child, there would simply not be enough data or time for the system to detect, and then classify and react.

Camera + Radar. A system that combines radar with a camera would have difficulty assessing this situation quickly enough to respond correctly. Too many factors have the potential to negatively impact their performance. The perception system would need to be trained for the precise scenario to classify exactly what it was “seeing.” And the radar would need to detect the child early enough, at a wide angle, and possibly from behind parked vehicles (strong surrounding radar reflections), predict its path, and act. In addition, radar may not have sufficient resolution to distinguish between the child and the ball.

LiDAR. Conventional LiDAR’s greatest value in this scenario is that it brings automatic depth measurement for the ball and the child. It can determine within approximately a few centimeters exactly how far away each is in relation to the vehicle. However, today’s LiDAR systems are unable to ensure vehicle safety because they don’t gather important information—such as shape, velocity, and trajectory—fast enough. This is because conventional LiDAR systems are passive sensors that scan everything uniformly in a fixed pattern and assign every detection an equal priority. Therefore, it is unable to prioritize and track moving objects, like a child and a ball, over the background environment, like parked cars, the sky, and trees.

Successfully Resolving the Challenge with iDARAEye’s iDAR solves this challenge successfully because it can prioritize how it gathers information and thereby understand an object’s context. As soon as an object moves into the road, a single LiDAR detection will set the perception system into action. First, iDAR will cue the camera to learn about its shape and color. In addition, iDAR will define a dense Dynamic Region of Interest (ROI) on the ball. The LiDAR will then interrogate the object, scheduling a rapid series of shots to generate a dense pixel grid of the ROI. This dataset is rich enough to start applying perception algorithms for classification, which will inform and cue further interrogations.

Having classified the ball, the system’s intelligent sensors are trained with algorithms that instruct them to anticipate something in pursuit. At that point, the LiDAR will then schedule another rapid series of shots on the path behind the ball, generating another pixel grid to search for a child. iDAR has a unique ability to intelligently survey the environment, focus on objects, identify them, and make rapid decisions based on their context.

Software ComponentsComputer Vision. iDAR is designed with computer vision, creating a smarter, more focused LiDAR point cloud that mimics the way humans perceive the environment. In order to effectively “see” the ball and the child, iDAR combines the camera’s 2D pixels with the LiDAR’s 3D voxels to create Dynamic Vixels. This combination helps the AI refine the LiDAR point clouds around the ball and the child, effectively eliminating all the irrelevant points and leaving only their edges.

Cueing. A single LiDAR’s detection on the ball sets the first cue into motion. Immediately, the sensor flags the region where the ball appears, cueing the LiDAR to focus a Dynamic ROI on the ball. Cueing generates a dataset that is rich enough to apply perception algorithms for classification. If the camera lacks data (due to light conditions, etc.), the LiDAR will cue itself to increase the point density around the ROI. This enables it to gather enough data to classify an object and determine whether it’s relevant.

Feedback Loops. Once the ball is detected, a feedback loop is generated by an algorithm that triggers the sensors to focus another ROI immediately behind the ball and to the side of the road to capture anything in pursuit, initiating faster and more accurate classification. This starts another cue. With that data, the system can classify whatever is behind the ball and determine its true velocity so that it can decide whether to apply the brakes or swerve to avoid a collision.

The Value of AEye’s iDARLiDAR sensors embedded with AI for intelligent perception are vastly different than those that passively collect data. After detecting and classifying the ball, iDAR will immediately foveate in the direction where the child will most likely enter the frame. This ability to intelligently understand the context of a scene enables iDAR to detect the child quickly, calculate the child’s speed of approach, and apply the brakes or swerve to avoid collision. To speed reaction times, each sensor’s data is processed intelligently at the edge of the network. Only the most salient data is then sent to the domain controller for advanced analysis and path planning, ensuring optimal safety.

Abrupt Stop Detection —A Pedestrian in HeadlightsSmarter Cars Podcast Talks LiDAR and Perception Systems with AEye President, Blair LaCorteFalse PositiveCargo Protruding from VehicleAEye Team Profile: Jim RobnettAEye Team Profile: Aravind RatnamAEye Expands Business Development and Customer Success Team to Support Growing Network of Global Partners and CustomersiDAR Sees Only What MattersAutonomous Cars with Marc Hoag Talks “Biomomicry” with AEye President, Blair LaCorteprevious post: Previousnext post: Next ← Obstacle Avoidance ← False PositiveAbout Management Team Advisory Board InvestorsiDAR Agile LiDAR Dynamic Vixels AI & Software Definability iDAR in ActionProducts AE110 AE200 iDAR Select Partner ProgramNews Press Releases AEye in the News Events AwardsLibrary Technology News & Views Profiles Videos BlogCareersSupportContact Back To Top

@VW Group: SUV Coupé for the e-tron Family: The Audi e-tron Sportback

* Information on fuel/power consumption and CO2 emissions in ranges depending on the chosen equipment level of the car.** The collective fuel consumption values of all models named and available on the German market can be found in the list provided at the end of this MediaInfo. Elegant, efficient, expressive: the exterior design The Audi… Continue reading @VW Group: SUV Coupé for the e-tron Family: The Audi e-tron Sportback

Bus passes just became part of your employee benefits

“Commuting as benefits” is becoming increasingly popular as employers discover the advantages of having workers show up to work on time, rested and less stressed. For some, commuting to work is a quick trip by car or subway. But for most it’s long, tedious and involves multiple modes of transport. The worst part is, we… Continue reading Bus passes just became part of your employee benefits

Media Explores Where 3D Automotive Lidar is Headed with Velodyne CTO Anand Gopalan

November 19, 2019 Real-time 3D lidar is poised to be the third leg of the trifecta of sensor technologies enabling both advanced driver-assistance (ADAS) and autonomous vehicles, writes Ed Brown in a Photonics & Imaging Technology story. Brown spoke with Velodyne Lidar CTO Anand Gopalan about the current state of 3D automotive lidar and where… Continue reading Media Explores Where 3D Automotive Lidar is Headed with Velodyne CTO Anand Gopalan

Lyft: Cleveland Cavaliers and Lyft — Supporting Nonprofits Together

We’re excited to announce that we’re expanding our partnership with the Cleveland Cavaliers and Rocket Mortgage FieldHouse. Now, we’re not only the official rideshare partner of the Cavaliers, we’re working together to support the important work of nonprofit organizations in Northeast Ohio and across the nation. “We’re proud to work with the Cleveland Cavaliers to… Continue reading Lyft: Cleveland Cavaliers and Lyft — Supporting Nonprofits Together

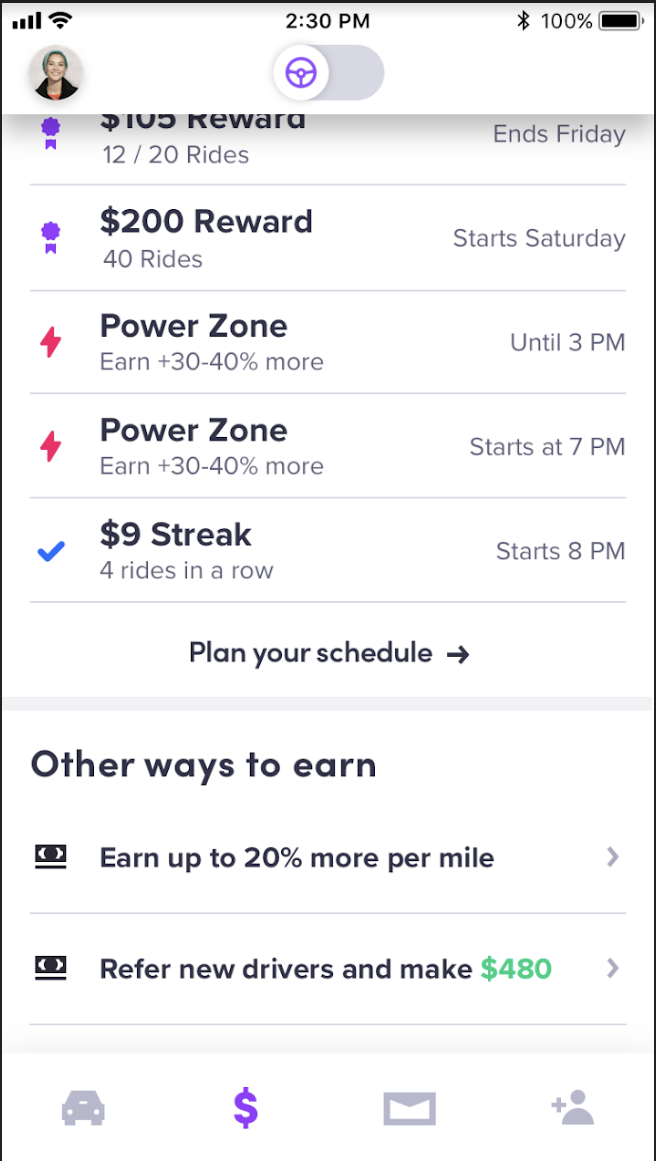

Lyft: Earn More Per Mile for Smart Driving

Safe driving is its own reward. But starting in December, Express Drive renters can earn more for safe driving. Just meet your personalized smart driving and ratings targets (as explained below), and you could earn a rate increase of up to 20% per mile. How it Works Today you earn a standard Per Mile rate,… Continue reading Lyft: Earn More Per Mile for Smart Driving

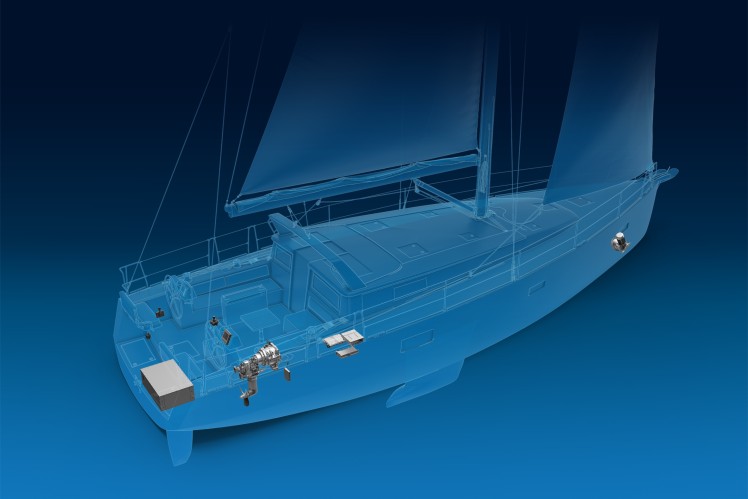

Press: E-volution for Sea Vessels: ZF Develops Fully Electric Propulsion System for Sailing Yachts

Zero noise and zero emissions: full electric propulsion system makes sailing more environmentally friendly New technology boosts ZF’s leadership position in sustainable marine propulsion systems E-drive proves its worth in the innovation ship since September 2019 Friedrichshafen. ZF is continuing to expand its portfolio of environment-friendly marine propulsion solutions. The new fully electric propulsion system… Continue reading Press: E-volution for Sea Vessels: ZF Develops Fully Electric Propulsion System for Sailing Yachts