Elon Musk announced today that Tesla will enable its electric vehicle owners with (Supervised) Full Self-Driving (FSD) to “text and drive” in “a month or two,” without explaining how they will get around the clear laws that prohibit that.

As recently as a few months ago, Musk was again claiming that Tesla would finally deliver its long-promised “unsupervised self-driving” to consumer vehicles by the end of the year – something he has done every year for the last 6 years and never delivered.

The latest timeline is less than 2 months away.

At Tesla’s shareholders meeting today, Musk updated his timeline – now saying Tesla is a “few months away” from unsupervised FSD – potentially pushing it into 2026.

He also added that Tesla is “almost” ready to allow “texting and driving’ on FSD and said he expects Tesla to enable it within “a month or two.”

However, the CEO didn’t elaborate on how Tesla plans to enable that.

Texting and driving is illegal in most jurisdictions, including the US. There are significant fines and legal penalties if caught. To “allow that”, Tesla would need to take responsibility for the consumer vehicles when FSD is driving, which would mean the previously promised “unsupervised self-driving” or SAE levels 3 to 5 autonomous driving.

There are several legal and regulatory steps Tesla must take to make it happen, and so far, there’s no evidence that the automaker has embarked on that journey.

So far, Tesla has limited itself to pilot projects with internal fleets to offer ride-hailing services with in-car supervisors where regulations allow.

Musk said that Tesla will “look at the data” before allowing texting and driving.

Tesla has notoriously never released any relevant data regarding the safety of its autonomous driving features.

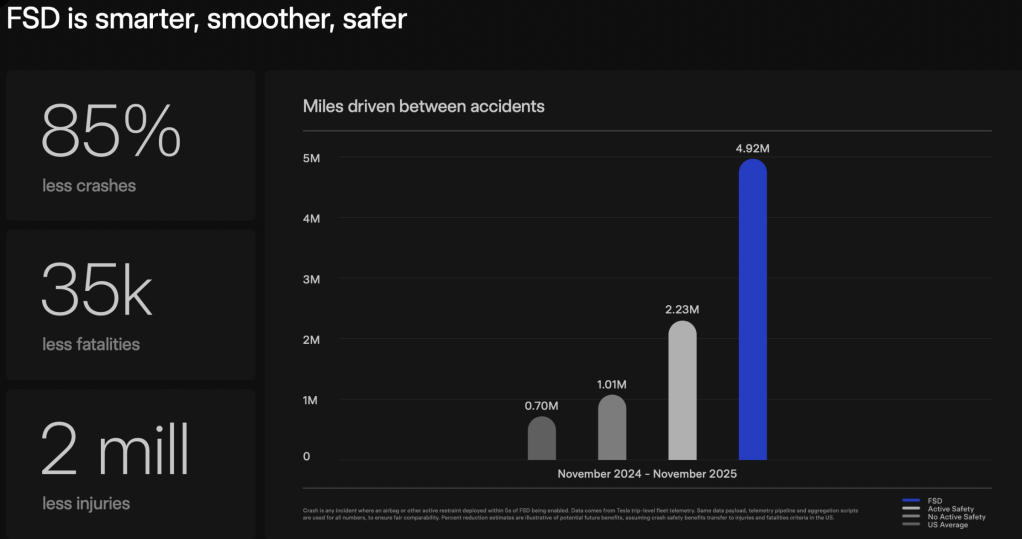

The automaker does release a quarterly “Autopilot safety report”, which consists of Tesla releasing the miles driven between crashes for Tesla vehicles with Autopilot features turned on, and comparing that with the miles driven by vehicles with Autopilot technology with the features not turned on, as well as the US average mileage between crashes.

There are three major problems with these reports:

- Methodology is self‑reported. Tesla counts only crashes that trigger an airbag or restraint; minor bumps are excluded, and raw crash counts or VMT are not disclosed.

- Road type bias. Autopilot is mainly used on limited‑access highways—already the safest roads—while the federal baseline blends all road classes. Meaning there are more crashes per mile on city streets than highways.

- Driver mix & fleet age. Tesla drivers skew newer‑vehicle, higher‑income, and tech‑enthusiast; these demographics typically crash less.

For the first time today, Tesla appears to have separated the Autopilot and FSD mileage, which gives us a little more data, but it still has many of the same problems listed above:

The main issue is that this data doesn’t prove that FSD crashes once every 4.92 million miles, but that human plus FSD crashes every 4.92 million miles based on Tesla’s own definition of a crash.

In comparison, we have official data from Tesla’s Robotaxi program in Austin, which is supposedly more advanced than FSD, showing a crash every 62,500 miles. That’s also with a safety supervisor on board, preventing more crashes.

Electrek’s Take

Another false promise and false hope to keep Tesla owners and shareholders going for a few more months.

You can’t just “allow texting and driving”. Laws are preventing that. Musk must mean Tesla officially making FSD a level 3 to 4 autonomous driving system and taking responsibility for it when active.

So far, in the US, only Mercedes-Benz has that capacity for stretches of highways in California and Nevada under SAE level 3 autonomy.

I feel like there are so many things that need to happen before that.

First off, logically, Tesla removing its in-car supervisors in its robotaxi service in Austin should come way sooner. Then, it should be able to demonstrate that they don’t crash every few tens of thousands of miles, which is the case right now with supervisors preventing further crashes.

The only thing that I can see happening here is that Tesla literally simply updates its driver monitoring system to stop looking for people using their phones while using FSD.

If that’s the case, it’s about as dumb as it gets and opens Tesla up to more liability.

FTC: We use income earning auto affiliate links. More.