DIYRobocars — Move it Hackathon:How to Hack 2 Cars to Make them Self-driving in 5 Days?

The Self-driving Hackathon went great. Some real-world solutions and approaches for car hacking are shared in details in this blog.

Why The Hackathon

Self-driving is a high-barrier industry for general developers and startups, which we realized when we began our self-driving startup PIX Moving. We hope to find some ways to lower the barrier and make the self-driving technology more affordable and available for common developers and hobbyists. Then we found more people with the same vision as us, Bogdan, also one founder of this event. He is a hacker and engineer from the Europe, who wants to build affordable self-driving car through hacking. And Chris Anderson, founder of DIYRobocars, who builds a community for self-driving hobbyists to drive innovation with open source approach. So the hackathon begins, it also becomes the first event of DIYRobocars in mainland China as we appreciate the approach and spirits of DIYRobocars community.

Who Participate

We shared our ideas with others and in the communities, received many positive feedbacks as well as responses from the talents and engineers worldwide. They are experienced with self-driving hardware as well as software. There are students from University of Technology Sydney and University of Science and Technology at Zewail City, also engineers from Shenzhen, China, developer from India, software PhD from Singapore and ex-employee from self-driving startup etc.. Finally 20+ engineers from 12 countries came a long way and kickstarted a journey of car hacking in Guiyang, China.

Opening Ceremony, Prof.Yoshiki from Tier IV, which maintains Autoware

Opening Ceremony, Prof.Yoshiki from Tier IV, which maintains Autoware Opening Ceremony, Miao Jinghao, Senior Architect of Apollo Auto

Opening Ceremony, Miao Jinghao, Senior Architect of Apollo Auto

Hackathon Mission

CAN Bus Hacking to achieve by-wire controlInstallation and test run of open source self-driving software on full-sized car, achieving obstacle avoidance, trajectory tracking and path following with RTK or LiDAR (HD point-cloud map not necessary)Traffic command gesture recognition and controlSelf-driving setup and test of 1/16 scale car powered by Raspberry Pi Some thoughts on the hackathon from Scott, participant and core member of this event:As I understood it, the fundamental goal of the hackathon is to lower the barrier to beginning development of autonomous cars. The two tasks originally suggested by the organizers are to hack the CAN bus of two cars (provided by them) and to prototype a system for recognizing gestures by traffic cops. Because the number of participants was large relative to these tasks, many (or all) participants and I knew at the beginning that we would need to select additional goals and break into smaller groups.There are many open problems that must be solved before autonomous cars in urban settings can be realized. As such, there is a rich selection of problems from which we could form additional goals for the hackathon. However, given time constraints, we focused on improving existing tools by working on code and documentation that eventually can be contributed to the upstream repositories. Because we wanted to integrate the results of CAN bus hacking with the classifier for gesture recognition, and because we planned to use Autoware as a quick solution for infrastructure and basic capabilities, we focused on potential contributions to the tools that we used: Autoware (https://github.com/CPFL/Autoware) and comma.ai panda (https://github.com/commaai/panda).

Some thoughts on the hackathon from Scott, participant and core member of this event:As I understood it, the fundamental goal of the hackathon is to lower the barrier to beginning development of autonomous cars. The two tasks originally suggested by the organizers are to hack the CAN bus of two cars (provided by them) and to prototype a system for recognizing gestures by traffic cops. Because the number of participants was large relative to these tasks, many (or all) participants and I knew at the beginning that we would need to select additional goals and break into smaller groups.There are many open problems that must be solved before autonomous cars in urban settings can be realized. As such, there is a rich selection of problems from which we could form additional goals for the hackathon. However, given time constraints, we focused on improving existing tools by working on code and documentation that eventually can be contributed to the upstream repositories. Because we wanted to integrate the results of CAN bus hacking with the classifier for gesture recognition, and because we planned to use Autoware as a quick solution for infrastructure and basic capabilities, we focused on potential contributions to the tools that we used: Autoware (https://github.com/CPFL/Autoware) and comma.ai panda (https://github.com/commaai/panda).

Hardware and Equipment List

The Hacking Process

Most writing of this part is contributed by Participant Adam Shan and Zhou Bolin. Thanks

Before this event, we discussed some urgent issues in the self-driving car development for individual developers on slack and summarized three goals as follows:

CAN-BUS Hackimplement an on-site test self-driving car with basic tracking and obstacle avoidance capabilitiesTraffic police gesture detection and recognition.

We decided to grouping based on the three approaches above. Participants with the corresponding skills and talents chose freely for which group to join. Eventually there were three groups: CAN-BUS hacking group, Autoware group and Traffic gesture recognition group.

CAN-Bus Hacking group was to hack into the Coffee-Car and Honda Civic CAN-BUS, controlling the throttle, braking and steering of the vehicle, and finally to the twist message from the Autoware group to control the vehicle.

Autoware group was to complete the integration and testing of the entire system, including the driver of various sensors (in this Hackathon we use the VLP-32c LiDAR and cameras), collecting and plotting the 3D map of the test sites, drawing waypoint, calibrating the camera and Lidar, testing the system and so on.

Gesture recognition group was to collect traffic police gesture dataset, train deep learning model and write gesture recognition ROS nodes.

The below are the detailed methods and approaches contributed by the joint efforts of the three groups in this hackathon.

1.CAN-Bus Hacking

Modern vehicles all come equipped with a CAN-BUS Controller Area Network, instead of having millions of wires running back and forth from various devices in your car to the battery, it’s making use of a more clever system. From each node (IE Switch pod that controls your windows or electric door locks) it broadcasts a message across the CAN. When the TIPM detects a valid message it will react accordingly like, locking the doors, switching on lights and so on.

In Move-it hackathon, the Hacking objects are a raw chassis (we called it Coffee-Car) and a Honda Civic, all provided by the organizers. Overall, retrofitting a car that can be controlled is primarily needed. However, automotive companies do not open their CAN-BUS interface to individual developers and small teams. Open-source CAN control projects (such as OSCC) only support several vehicles. It’s a common problem at present.

The overall approach is the the same for both Coffee-car and Honda Civic: read the vehicle’s electronic message (including steering, throttle, braking, etc.) to understand the message data of the vehicle and then obtain the control of the vehicle by analyzing the corresponding message data.

For the Honda Civic 2016, we made few steps as follows:

1)Connect to the CAN BUS: we need to connect to one of the CAN buses(it has two). We made a connector to attach ourselves to the camera thing.

2)Understand the CAN data: we looked at the comma.ai openpilot project. There is useful code for many cars. We found difficult to compile the dependences so we extracted the useful bits for us and for the cars. Those were:

DBC file for Honda Civic we needed to modify slightly to allow CAN tools library to load it.hondacan.py which contains code to create messages, checksum calculation, etc.carstate.py which contains code to read what’s the state of the car. This was the first file that guided us in the right direction to find the DBC file, and the Honda CAN messages.

3)Publish it in ROS: we made a ROS node initially to publish the CAN messages as can_msgs/Frame messages, which can be found here: panda_bridge_ros.py.

4)Control the Car: we made scripts to test steering and braking commands, tried them with the car lifted up from the front and the messages didn’t do anything. We were warned that the steering could only do minimal torque but we could not make rotate the steering wheel. And also that in theory the car cannot be commanded under 12mph (20km/h). Seems to be true. And the last two things is to understanding the steering of the car and hack the torque sensor on the steering wheel to send steering commands.

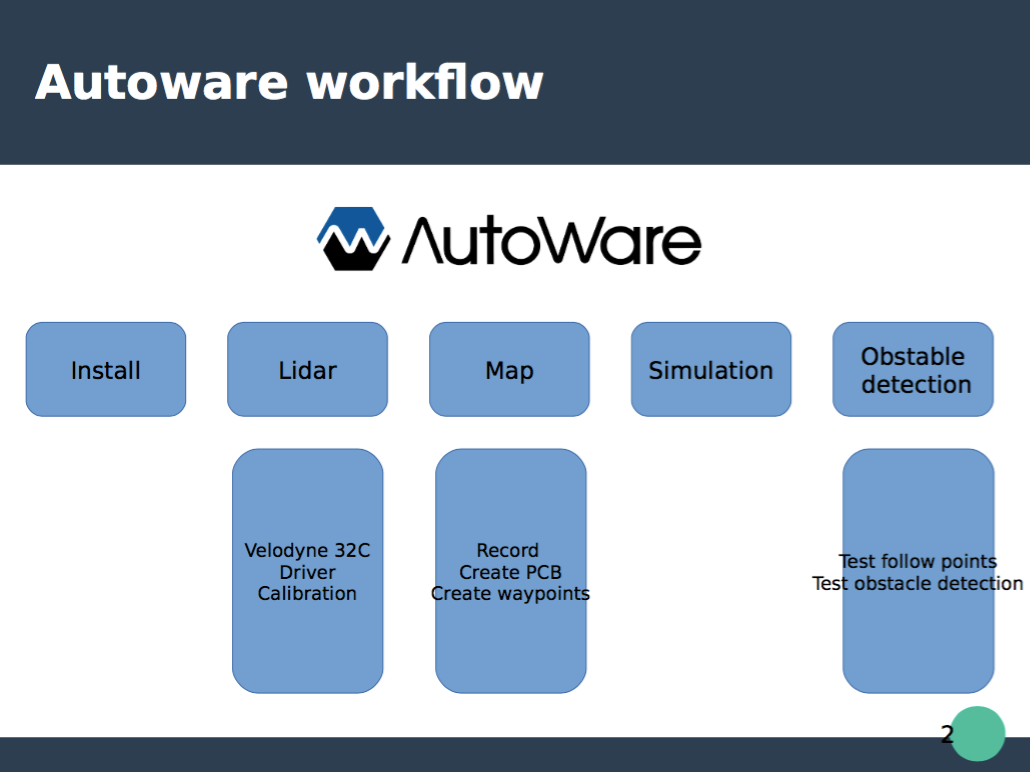

2.Software-Autoware

Two mature open source frameworks are capable of implementing self-driving car in such a short time, they are:

1)Autoware is an open source software for urban autonomous driving, maintained by Tier IV.

2)Apollo Auto is an open source autonomous driving platform. It is a high performance flexible architecture which supports fully autonomous driving capabilities.

We choose Autoware as the top-level software stack in Move-it Hackathon. It provides us with localization, object detection, driving control as well as 3D map generation. It is often difficult to analyze the interactions with multiple sensors at once, and Autoware provides tools that enable efficient analysis and debugging of system. Also, the logged messages can be replayed repeatedly, allowing us to debug without actual sensors.

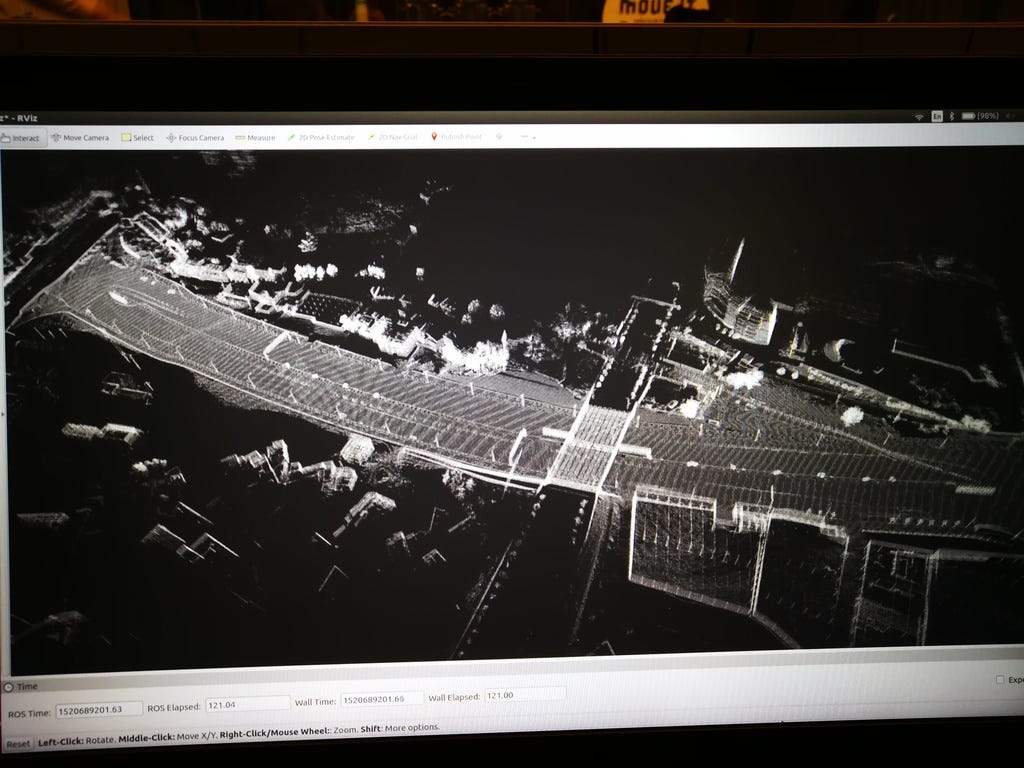

The general approach for this part: effectively tandem the whole framework of the vehicle from perception, decision-making to control. The main hardware needed for the entire process is LiDAR. Hardware deployment of Autoware on Coffee-Car was the 32-channel Velodyne Lidar, which is the only mandatory hardware sensed by Autoware. We setup the 32-channel LiDAR to collect point cloud map data and built a map by Autoware, then generated the waypoint for path planning.

3.Gesture Recognition

Based on some previous studies, we found that there was no open source traffic police gesture recognition solution yet. The existing several papers did not open its datasets and codes to the public. There are roughly two ideas to detect traffic police gestures based on vision:

1) Perform pose estimation on the traffic police to obtain skeleton information, and then input the sequence skeleton information into a model, and then classify the sequence gesture.

2)Directly skip pose estimation and input the sequence of motion images into a model to recognize the gesture. No matter which approach is adopted, there are two important prerequisites: first, we have to distinguish the traffic police from ordinary pedestrians; second, we need to collect gesture datasets. Therefore, the first task is to complete the collection and preparation of relevant data sets.

For traffic police identification, we wrote a python script to capture 10,000 images containing traffic police keywords in the network and used YOLO2 to detect human in the images, so that all the people in the picture were cut off and saved separately. Through the manual annotation of our own and local university volunteers, we have obtained a dataset for traffic police identification, including more than 10,000 images of Chinese traffic police and more than 10,000 images of pedestrians. Through these data, we trained a convolutional neural network to identify traffic police with pedestrians.

Since that there is no relatively standard public traffic gesture dataset, we have to collect it ourselves. We used six cameras to photograph more than 180 groups of traffic police gestures from more than 20 volunteers in different directions, and also used two laser radars to record the point cloud data. We used the green screen as the background when collecting data so that the user can fill the background to do data augmentation. We manually tagged the point-in-time files and used the YOLO2 to extract all the traffic gestures from the video at the rate of 30FPS and save them as images. Eventually, we get a sequence image data set of 1700000 traffic gesture images.

After getting the data set, we started the training of the deep learning model. We finally chose to use image instead of the skeleton to train the model for that pose estimation is a computationally intensive task. The deep learning method can directly use the original image as input to create an abstract representation by the neural network itself. Due to the limited activity time, we failed to successfully train the sequence model, but we used Inception-v4 directly for feature extraction and gesture recognition for single-frame images.

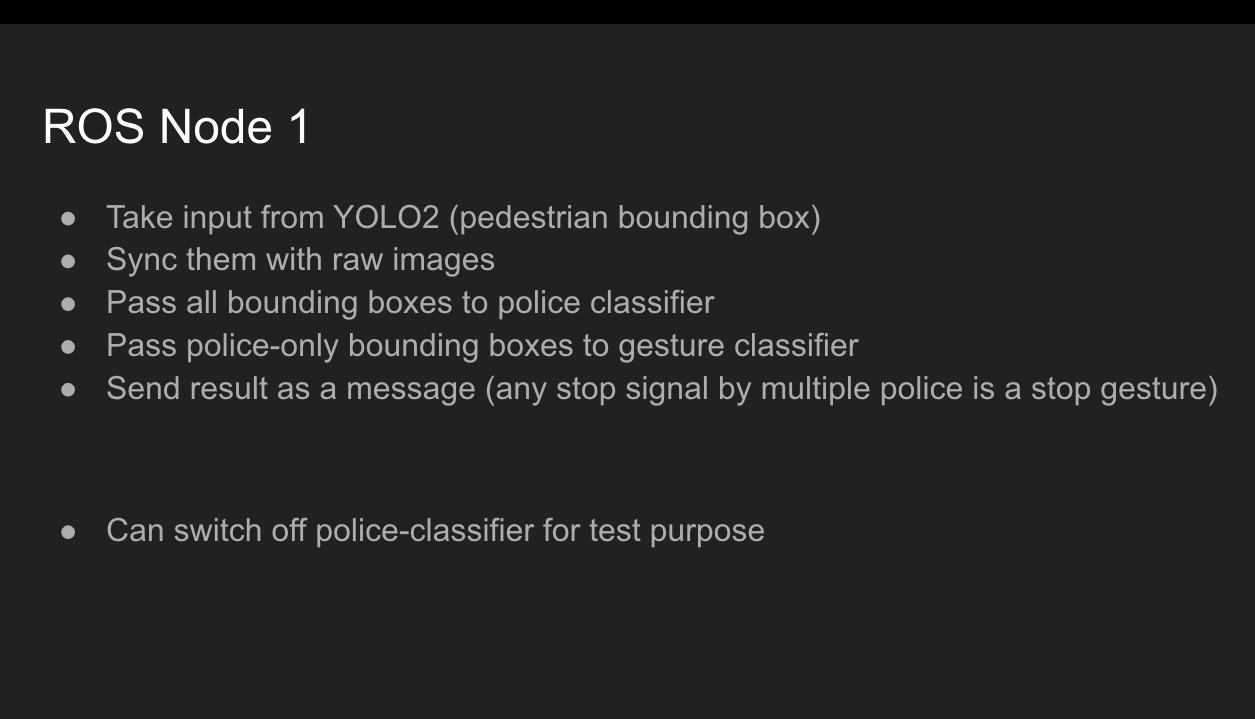

The last step is to write a ROS node to deploy the model into Autoware. We subscribe the output topic of the pedestrian detection module in Autoware (still YOLO2) and publish the detection results to the /police_gesture topic.

The Outcomes Overview

1.CAN-Bus Hacking Group

Coffee-Car Chassis: the vehicle can be controlled to drive following the specific trajectory and to complete the scheduled commands as well as obstacles during the process(fully self-driving). The basic self-driving for Coffee-Car is achieved.Honda Civic: the outcomes are similar to Coffee-car (not fully self-driving), but the control of Civic is more stable with better ride experienceControl codes based on ROS, GitHub Repo:https://github.com/moveithackathon-canhack/civic-hackCan Bus Hack Blog https://github.com/moveithackathon-canhack/civic-hack/blob/master/BLOG.md

2.Software-Autoware Group

The 3D high-precision mapping for the test site is built successfully (Autoware locates by point could map)After the original point cloud data collection, Waypoint path planning was made for trajectory tracking of the vehicle.Joint calibration of the camera and LidarApplication of Autoware on the self-driving of Coffee-Car.Wrote the Driver for MindVision Camera:https://github.com/moveithackathon-canhack/mv-camera-ros

3.Gesture Recognition Group

Completed the collection and preprocessing of two standard datasets for traffic commands gesture recognition. Trained the deep learning model of gesture recognition and gesture detection (the model may not be optimal due to time limits. The accuracy rates for gesture recognition and detection are respectively 91% And 93%, we’ll keep updating).

Two datasets:

1)Traffic Police/Pedestrian Dataset: A total of 10,000 traffic police/pedestrian images were collected and labeled

2)Chinese Traffic Gesture Dataset (CTGD): Collected 180 sets of traffic command gesture images respectively from six camera stands (six angels), which is close to 170,000 labeled images, including four traffic command gestures (Stop, Go, Turn Right, Stop Aside). It is currently the only public dataset of this kind in China.

Code, models, and datasets are all publicly available on GitHub: https://github.com/moveithackathon-canhack/traffic-gesture-recognition

The Next Move-it

Everyone was dedicated and passionate during the five days. We worked together, ate together, drank beer, had coffee, casual chats, staying up at night, getting to know different cultures and the awesome skills of each other.

Now the March hackathon has draw to a satisfactory end, but the hacking goes. We’ll continue our work and collaborate online remotely to continuously drive the innovation in self-driving. The spirits for hacking and open source will bind us together tightly. We can always spark a great idea and jump on the plane/train to gather together for creating real-world solutions.

What’s the next event will be? A city hacking project in China? A Self-driving race challenge in the US? Self-driving test & run in an abandoned city in Ukraine? Or just a Meetup with beers and wine for the self-driving engineers and hobbyists worldwide? Well, we’ll wait and see

Follow us on

Twitter: https://twitter.com/thepixmoving

Medium: PIX Moving

Move-it Slack: https://moveithackathon.slack.com

DIYRobocars Slack: https://donkeycar.slack.com