Softbank Robotics Must-see offers Whiz, the latest vacuum from SoftBank Robotics isn’t nearly as charming as Pepper or its tiny counterpart NAO, but it’s certainly more practical. Today in Las Vegas at the ISSA trade show for the Worldwide Cleaning Industry Association, SoftBank Robotics America (SBRA) and ICE Robotics introduced Whiz to the North American… Continue reading SoftBank Robotics launches a $499 monthly robotic vacuum service

Tag: Autonomous

Record 136 NVIDIA GPU-Accelerated Supercomputers Feature in TOP500 Ranking

The new wave of supercomputers is largely GPU accelerated, the latest TOP500 list of the world’s fastest systems shows. Of the 102 new supercomputers to join the closely watched ranking, 42 use NVIDIA GPU accelerators — including AiMOS, the most powerful addition to the list, which was released this week. Coming in at No. 24,… Continue reading Record 136 NVIDIA GPU-Accelerated Supercomputers Feature in TOP500 Ranking

KARMA GROUP UNVEILS GROWING BUSINESS STRATEGY AT AUTOMOBILITY LA, DEBUTS NEW PRODUCTS, AND TECH-FOCUSED ECOSYSTEM TO DRIVE ITS CONTINUED GROWTH

VIEW ALL () x Nov 19,2019 Karma Group Assigns Five Dedicated Business Units to Grow Business and Brand Value Beyond Retail Sales Alone Performance Oriented Revero GTS On Sale Q1 2020; SC2 Concept Previews Future Karma Design Direction Karma Demonstrates EREV Conversion Opportunities in Larger Vehicle Platforms LOS ANGELES (Nov. 19, 2019) – Karma… Continue reading KARMA GROUP UNVEILS GROWING BUSINESS STRATEGY AT AUTOMOBILITY LA, DEBUTS NEW PRODUCTS, AND TECH-FOCUSED ECOSYSTEM TO DRIVE ITS CONTINUED GROWTH

KARMA AUTOMOTIVE UNVEILS TECH-FOCUSED SC2 CONCEPT AT AUTOMOBILITY LA AND LOS ANGELES AUTO SHOW 2019

VIEW ALL () x Karma-Debuts-SC2-at-AutoMobility-LA-and-2019-LA-Auto-Show Previous Next Nov 19,2019 Karma’s SC2 Concept Car Previews Future Design Direction and Company’s Emergence as Technology Incubator SC2 to Deliver 1,100 HP and 0-60 mph in less than 1.9 Seconds Karma’s Drive and Play® Technology Integrates Racing Simulation and Gaming Experience into SC2 IRVINE, Calif. (November 19, 2019) – Karma… Continue reading KARMA AUTOMOTIVE UNVEILS TECH-FOCUSED SC2 CONCEPT AT AUTOMOBILITY LA AND LOS ANGELES AUTO SHOW 2019

Lyft: Self-Driving Research in Review: ICCV 2019

By the Level 5 Research Team: Peter Ondruska, Luca Del Pero, Qi Dong, Anastasia Dubrovina and Guido Zuidhof ICCV, along with CVPR, is one of the main international computer vision conferences. It happens bi-annually, with this year’s edition taking place in Seoul, South Korea. Out of the 1076 papers presented, we summarized the most relevant… Continue reading Lyft: Self-Driving Research in Review: ICCV 2019

Hella dials into e-mobility, hives off relay business to China’s Hongfa

Hella to sell relay business to China’s Hongfa group In sync with the buzzing electric mobility and autonomous driving trends, global automotive supplier, Hella is hiving off its relay business. Hella will now sell its business to the Chinese relay manufacturer Hongfa for 10 million euro and the transaction is set to close by year-end.… Continue reading Hella dials into e-mobility, hives off relay business to China’s Hongfa

False Positive

skip to Main Content Human drivers confront and handle an incredible variety of situations and scenarios—terrain, roadway types, traffic conditions, weather conditions—for which autonomous vehicle technology needs to navigate both safely, and efficiently. These are edge cases, and they occur with surprising frequency. In order to achieve advanced levels of autonomy or breakthrough ADAS features, these edge cases must be addressed. In this series, we explore common, real-world scenarios that are difficult for today’s conventional perception solutions to handle reliably. We’ll then describe how AEye’s software definable iDAR™ (Intelligent Detection and Ranging) successfully perceives and responds to these challenges, improving overall safety.

Download AEye Edge Case: False Positive [pdf]

Challenge: A Balloon Floating Across The RoadA vehicle equipped with an advanced driver assistance system (ADAS) is traveling down a residential block on a sunny afternoon when the air is relatively still. A balloon from a child’s birthday party comes floating across the road. It drifts down and ends up suspended almost motionless in the lane ahead. If the driver of an ADAS vehicle isn’t paying attention, this is a dangerous situation. Its perception system must make a series of quick assessments to avoid causing an accident. Not only must it detect the object in front of it, it must also classify it to determine whether it’s a threat. The vehicle’s domain controller can then decide that the balloon is not a threat and drive through it.

How Current Solutions Fall ShortToday’s advanced driver assistance systems (ADAS) will experience great difficulty detecting the balloon or classifying it fast enough to react in the safest way possible. Typically, ADAS vehicle sensors are trained to avoid activating the brakes for every anomaly on the road because it is assumed that a human driver is paying attention. As a result, in many cases, they will allow the car to drive into them. In contrast, level 4 or 5 self-driving vehicles are biased toward avoiding collisions. In this scenario, they’ll either undertake evasive maneuvers or slam on the brakes, creating an unnecessary incident or causing an accident.

Camera. It is extremely difficult for a camera to distinguish between soft and hard objects; everything is just pixels. In this case, perception training is practically impossible because in the real world, soft objects can appear in an almost infinite variety of shapes, forms, and colors—possibly even taking on human-like shapes in poor lighting conditions. Camera detection performance is completely dependent on proper training of all possible permutations of a soft target’s appearance in combination with the right conditions. Sun glare, shade, or night time operation will negatively impact performance.

Radar. An object’s material is of vital significance to radar. A soft object containing no metal or having no reflectivity is unable to reflect radio waves, so radar will miss the balloon altogether. Additionally, radar is typically trained to disregard stationary objects because otherwise it would be detecting thousands of objects as the vehicle advances through the environment. So, even if the balloon is made from reflective metallic plastic, because it’s floating in the air, there might not be enough movement for the radar to detect it. Therefore, radar will provide little, if any, value in correctly classifying the balloon and assessing it as a potential threat.

Camera + Radar. Together, camera and radar would be unable to assess the scenario and react correctly every time. The camera would try to detect the balloon. However, there would be many scenarios where the camera will identify it incorrectly or not at all depending on lighting and perception training. The camera will frequently be confused—it might identify the balloon as a pedestrian or something else for which the vehicle needs to brake. And radar will be unable to eliminate the camera confusion because it typically won’t detect the balloon at all.

LiDAR. Unlike radar and camera, LiDAR is much more resilient to lighting conditions, or an object’s material. LiDAR would be able to precisely determine the balloon’s 3D position in space to centimeter-level accuracy. However, conventional low density scanning LiDAR falls short when it comes to providing sufficient data fast enough for classification and path planning. Typically, LiDAR detection algorithms require many laser points on an object over several frames to register as a valid object. A low density LiDAR that passively scans the surroundings horizontally can experience challenges achieving the required number of detects when it comes to soft, shape-shifting objects like balloons.

Successfully Resolving the Challenge with iDARIn this scenario, iDAR excels because it can gather sufficient data at the sensor level for classifying the balloon and determining its distance, shape, and velocity before any data is sent to the domain controller. This is possible because as soon as there’s a single LiDAR detection of the balloon, iDAR will immediately flag it with a Dynamic Region of Interest (ROI). At that point, the LiDAR will generate a dense pattern of laser pulses in the area, interrogating the balloon for additional information. All this takes place while iDAR also continues to track the background environment to ensure it never misses new objects.

Software Components and Data TypesComputer Vision. iDAR is designed with computer vision that creates a smarter, more focused LiDAR point cloud. In order to effectively “see” the balloon, iDAR combines the camera’s 2D pixels with the LiDAR’s 3D voxels to create Dynamic Vixels. This combination helps iDAR refine the LiDAR point cloud on the balloon, effectively eliminating all the irrelevant points.

Cueing. For safety purposes, it’s essential to classify soft targets at range because their identities determine the vehicle’s specific and immediate response. To generate a dataset that is rich enough to apply perception algorithms for classification, as soon as LiDAR detects an object, it will cue the camera for deeper information about its color, size, and shape. The perception system will then review the pixels, running algorithms to define the object’s possible identities. To gain additional insights, the camera cues the LiDAR for additional data, which allocates more shots.

Feedback Loops. Intelligent iDAR sensors are capable of cueing each other for additional data, and they are also capable of cueing themselves. If the camera lacks data (due to light conditions, etc.), the LiDAR will generate a feedback loop that tells the sensor to “paint” the balloon with a dense pattern of laser pulses. This enables the LiDAR to gather enough data about the target’s size, speed, and direction to effectively aid the perception system in classifying the object without the benefit of camera data.

The Value of AEye’s iDARLiDAR sensors embedded with AI for intelligent perception are very different than those that passively collect data. When iDAR registers a single detection of a soft target in the road, it’s priority is classification. To avoid false positives, iDAR will schedule a series of LiDAR shots in that area to determine that it’s a balloon, or something else like a cement bag, tumbleweed, or a pedestrian. iDAR can flexibly adjust point cloud density on and around objects of interest and then use classification algorithms at the edge of the network. This ensures only the most important data is sent to the domain controller for optimal path planning.

False Positive —AEye Named to Forbes AI 50AEye Team Profile: Indu VijayanAEye Team Profile: Jim RobnettAEye Advisory Board Profile: Adrian KaehlerAEye Team Profile: Aravind RatnamAEye Team Profile: Dr. Allan SteinhardtAEye Team Profile: Vivek ThotlaCargo Protruding from VehicleFlatbed Trailer Across Roadwayprevious post: Previousnext post: Next ← Abrupt Stop Detection ← Cargo Protruding from VehicleAboutManagement TeamAdvisory BoardInvestorsiDAR Agile LiDAR Dynamic Vixels AI & Software Definability iDAR in ActionProducts AE110 AE200 iDAR Select Partner ProgramNewsPress ReleasesAEye in the NewsEventsAwardsLibraryTechnologyNews & ViewsProfilesVideosBlogCareersSupportContact Back To Top

Cargo Protruding from Vehicle

skip to Main ContentHuman drivers confront and handle an incredible variety of situations and scenarios—terrain, roadway types, traffic conditions, weather conditions—for which autonomous vehicle technology needs to navigate both safely, and efficiently. These are edge cases, and they occur with surprising frequency. In order to achieve advanced levels of autonomy or breakthrough ADAS features, these edge cases must be addressed. In this series, we explore common, real-world scenarios that are difficult for today’s conventional perception solutions to handle reliably. We’ll then describe how AEye’s software definable iDAR™ (Intelligent Detection and Ranging) successfully perceives and responds to these challenges, improving overall safety.

Download AEye Edge Case: Cargo Protruding From Vehicle [pdf]

Challenge: Cargo Protruding from VehicleA vehicle equipped with an advanced driver assistance system (ADAS) is driving down a road at 20 mph. Directly ahead, a large pick-up truck stops abruptly. Its bed is filled with lumber, much of which is jutting out the back and into the lane. If the driver of an ADAS vehicle isn’t paying attention, this is a potentially fatal scenario. As the distance between the two vehicles quickly shrinks, the ADAS vehicle’s domain controller must make a series of critical assessments to identify the object and avoid a collision. However, this is dependant on its perception system’s ability to detect the lumber. Numerous factors can negatively impact whether or not a detection takes place, including adverse lighting, weather, and road conditions.

How Current Solutions Fall ShortToday’s advanced driver assistance systems (ADAS) will experience great difficulty recognizing this threat or reacting appropriately. Depending on their sensor configuration and perception training, many will fail to register the cargo before it’s too late.

Camera. In scenarios where depth perception is important, cameras run into challenges. By their nature, camera images are two dimensional. To an untrained camera, cargo sticking out of a truck bed will look like small, elongated rectangles floating above the roadway. In order to interpret this 2D image in 3D, the perception system must be trained—something that is difficult to do given the innumerable permutations of cargo shapes. The scenario becomes even more challenging depending on time of day. In the afternoon, sunlight reflecting off the truck bed or directly into the camera can create blind spots, obscuring the cargo. At night, there may not be enough dynamic range in the camera image for the perception system to successfully analyze the scene. If the vehicle’s headlights are in low beam mode, most of the light will pass underneath the lumber.

Radar. Radar detection is quite limited in scenarios where objects are small and stationary. Typically, radar perception systems disregard stationary objects because otherwise, there would be too many objects for the radar to track. In a scenario featuring narrow, non-reflective objects that are surrounded by reflections from the metal truck bed and parked cars, the radar would have great difficulty detecting the lumber at all.

Camera + Radar. Due to the above explained deficiencies, in most cases, a system that combines radar with a camera would be unable to detect the lumber or react quickly. The perception system would need to be trained on an almost infinite variety of small stationary objects associated with all manner of vehicles in all possible light conditions. For radar, many objects are simply less capable of reflecting radio waves. As a result, radar will likely miss or disregard small, non-reflective stationary objects. In addition, radar would be incapable of compensating for the camera’s lack of depth perception.

LiDAR. Conventional LiDAR doesn’t struggle with depth perception. And its performance isn’t significantly impacted by light conditions, nor by an object’s material and reflectivity. However, conventional LiDAR systems are limited because their scan patterns are fixed, as are their Field-of-View, sampling density, and laser shot schedule. In this scenario, as the LiDAR passively scans the environment, its laser points will only hit the small ends of the lumber a few times. Typically, LiDAR perception systems require a minimum of five detections to register an object. Today’s 4-, 16-, and 32-channel systems would likely not collect enough detections early enough to determine that the object was present and a threat.

Successfully Resolving the Challenge with iDARAccurately measuring distance is crucial to solving this challenge. A single LiDAR detection will cause iDAR to immediately flag the cargo as a potential threat. At that point, a quick series of LiDAR shots will be scheduled directly targeting the cargo and the area around it. Dynamically changing both LiDAR’s temporal and spatial sampling density, iDAR can comprehensively interrogate the cargo to gain critical information, such as its position in space and distance ahead. Only the most useful and actionable data is sent to the domain controller for planning the safest response.

Software ComponentsComputer Vision. iDAR combines 2D camera pixels with 3D LiDAR voxels to create Dynamic Vixels. This data type helps the system’s AI refine the LiDAR point cloud on and around the cargo, effectively eliminating all the irrelevant points and creating information from discrete data.

Cueing. As soon as iDAR registers a single detection of the cargo, the sensor flags the region where cargo appears and cues the camera for deeper real-time analysis about its color, shape, etc. If light conditions are favorable, the camera’s AI reviews the pixels to see if there are distinct differences in that region. If there are, it will send detailed data back to the LiDAR. This will cue the LiDAR to focus a Dynamic Region of Interest (ROI) on the cargo. If the camera lacks data, the LiDAR will cue itself to increase the point density on and around the detected object creating an ROI.

Feedback Loops. A feedback loop is triggered when an algorithm needs additional data from sensors. In this scenario, a feedback loop will be triggered between the camera and the LiDAR. The camera can cue the LiDAR, and the LiDAR can cue additional interrogation points, or a Dynamic Region of Interest, to determine the cargo’s location, size, and true velocity. Once enough data has been gathered, it will be sent to the domain controller so that it can decide whether to apply the brakes or swerve to avoid a collision.

The Value of AEye’s iDARLiDAR sensors embedded with AI for intelligent perception are very different than those that passively collect data. As soon as the perception system registers a single valid LiDAR detection of an object extending into the road, iDAR responds intelligently. The LiDAR instantly modifies its scan pattern, increasing laser shots to cover the cargo in a dense pattern of laser pulses. Camera data is used to refine this information. Once the cargo has been classified, and its position in space and distance ahead determined, the domain controller can understand that the cargo poses a threat. At that point, it plans the safest response.

Cargo Protruding from Vehicle —AEye Team Profile: Jim RobnettSAE's Autonomous Vehicle Engineering on New LiDAR Performance MetricsAEye Team Profile: Aravind RatnamAEye Team Profile: Amy IshiguroAEye’s New AE110 iDAR System Integrated into HELLA Vehicle at IAA in FrankfurtAbrupt Stop DetectionLeading Global Automotive Supplier Aisin Invests in AEye through Pegasus Tech VenturesAEye Team Profile: Vivek ThotlaRethinking the Three “Rs” of LiDAR: Rate, Resolution and Rangeprevious post: Previous ← False PositiveAboutManagement TeamAdvisory BoardInvestorsiDAR Agile LiDAR Dynamic Vixels AI & Software Definability iDAR in ActionProducts AE110 AE200 iDAR Select Partner ProgramNewsPress ReleasesAEye in the NewsEventsAwardsLibraryTechnologyNews & ViewsProfilesVideosBlogCareersSupportContact Back To Top

Veoneer Joins Autonomous Vehicle Computing Consortium to Accelerate Development of Autonomous Vehicles

STOCKHOLM, Nov. 19, 2019 /PRNewswire/ — Veoneer, Inc. (NYSE: VNE and SSE: VNE SDB), the world’s largest pure-play company focused on Advanced Driver Assistance Systems (ADAS) and Automated Driving (AD), has joined the Autonomous Vehicle Computing Consortium (AVCC) to speed autonomous driving vehicle development. Veoneer joins leading OEMs, Tier 1 suppliers and semiconductor companies, such… Continue reading Veoneer Joins Autonomous Vehicle Computing Consortium to Accelerate Development of Autonomous Vehicles

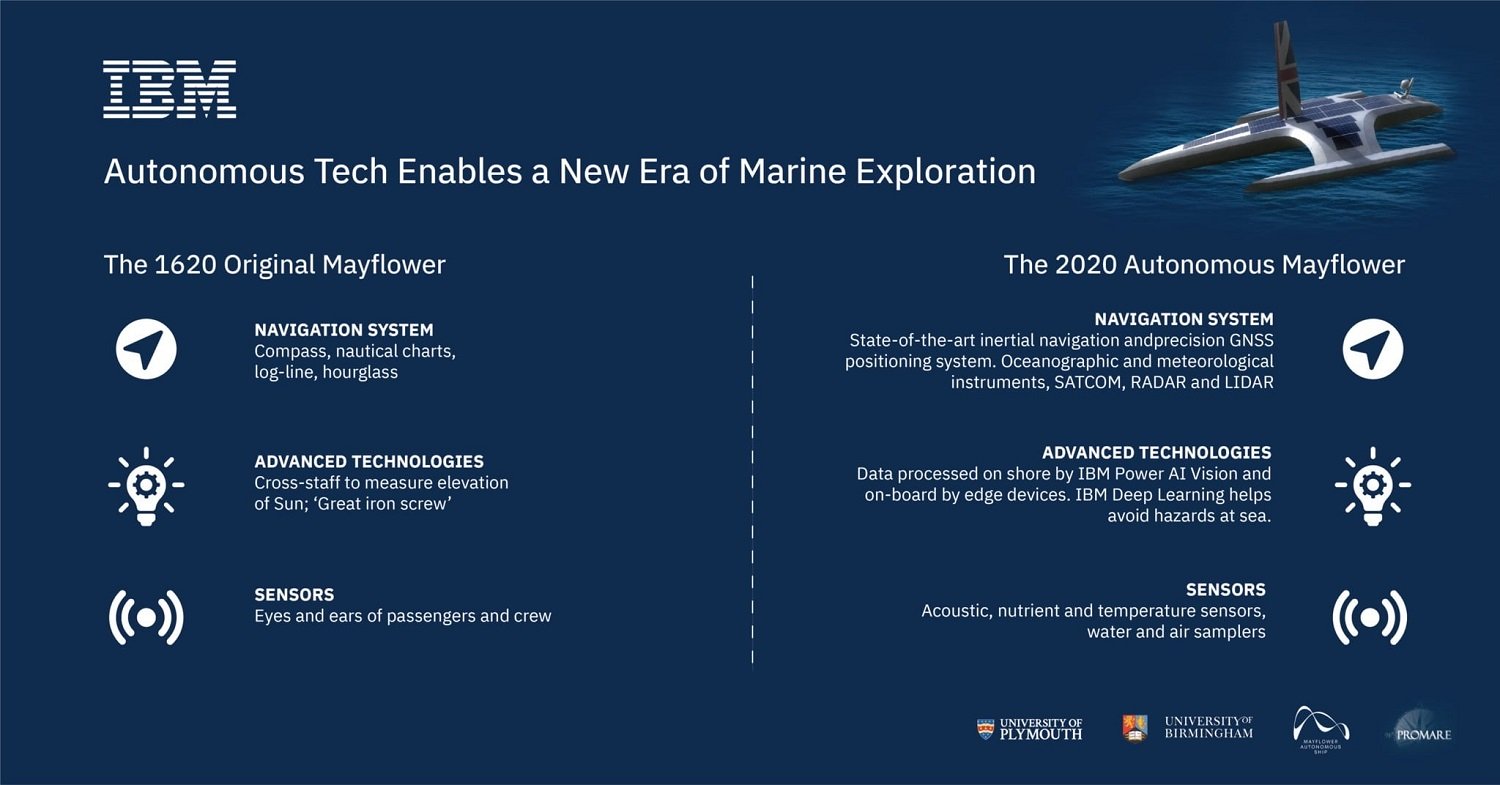

The age of autonomous exploration

When the original Mayflower left one Plymouth to find another, it had 130 people on board. The next will have none. A romantic could conclude that America was founded on exploration. One could draw a line from the Moon landing back through westward expansion and ultimately the landing of the Mayflower at Plymouth rock. The… Continue reading The age of autonomous exploration