A pair of massive Boston-area investments from the Japanese SoftBank Group’s $100 billion Vision Fund, a moonshot power storage spinout from Alphabet’s X labs, and some local robotics moves are found in this week’s Boston technology news. —Underscore VC, a Boston-based venture capital firm focused on early-stage tech startups, is capping off its second fund… Continue reading Boston Tech Watch: Underscore VC, Piaggio, SoftBank & Sea Machines

Tag: Autonomous

Future Tesla Autopilot chips may come from Samsung self-driving push

2017 Tesla Model S testing at Consumer Reports

The race to build self-driving cars is heating up, with Google (Waymo), Tesla, Uber, Lyft, Ford, GM (Cruise), and others all vying to build the first reliable self-driving system.

Now add Korean electronics giant Samsung to that list—with a twist. Its first client could be one of the major competitors in the business, Tesla.

Tesla once had a partner in developing self-driving software, Israel's Mobileye, when it first launched its Autopilot system to great fanfare in 2014.

DON'T MISS: Teslas to get new self-driving, Autopilot chip in spring 2019

After a widely reported fatal accident in Florida tied to the company's original Version 1 Autopilot system, which used the Mobileye hardware, Tesla has been on its own to develop the next-generation Autopilot 2 hardware.

In October, the company introduced new software that enabled full on-ramp to off-ramp self-driving in the system it calls Navigate on Autopilot, on existing Autopilot chips. CEO Elon Musk has since tweeted that with the upcoming faster chips, the system will be able to handle commuting from home to work without human intervention.

Industry sources told the Investors Business Times that Samsung has been on a hiring spree for electronics engineers with automotive experience.

CHECK OUT: Consumer Reports tests Tesla's Navigate on Autopilot

Musk announced in October that the company would roll out new, faster chips in the spring that will enable the company's long-promised Full Self-Driving Mode features, which many customers have pre-paid for. He tweeted that the new chip will be 50 to 200 times faster than the current hardware.

IBT sources say that Samsung has already begun assembling the chips expected to be rolled out to the Model 3 in March.

Central to Musk's plan to have Navigate on Autopilot work in more situations and on surface streets is having more drivers use the system and feed driving data back to Tesla's artificial intelligence servers. If a new chip is also on horizon, however, more data may not be enough.

READ MORE: Tesla drivers log 1 billion miles on Autopilot

Samsung has denied the rumors that it is forming a new self-driving car division.

The insiders noted that Samsung's practice is to line up a leading customer before launching new business lines.

With the Consumer Electronics Show on the horizon in Las Vegas early next month, it wouldn't be surprising to see the electronics giant introduce such a system there.

Green Car Reports reached out to Tesla for comment on this story, but had not heard back before publication.

AEye Introduces Advanced Mobility Product for the Autonomous Vehicle Market

The AE110 Brings Together Agile Sensors, Artificial Intelligence, and Software Definability to Capture Better Information with Less Power, Raising the Bar on Performance and Safety

“This is a breakthrough for perception systems. iDAR can do what has been previously unachievable. Combining industry leading range, resolution, scan rate, and intelligence – we are able to capture critical data about objects such as acceleration and velocity vectors within the same frame. Our approach has superior characteristics to simple FMCW not only in reliability of acquiring closing velocity, but also as the most dangerous objects enter the scene from the side where FMCW alone is ineffective.”

Pleasanton, CA – December 20, 2018 – AEye, a world leader in artificial perception systems and the developer of iDAR™, today announced the AE110, its most advanced product for the automotive mobility market. Built on the world’s most extensive solid-state LiDAR patent portfolio (with over 1000 claims filed), the AE110 features the industry’s only software-definable LiDAR, creating an open platform for perception innovation that lets software engineers optimize data collection to best meet their needs.

A New Kind of Perception

The AE110 is based on iDAR, a new form of data collection that emulates how the visual cortex pre-processes and customizes information sent to the brain. It does this by fusing 1550 nanometer (nm), solid-state agile MOEMS LiDAR, a low-light HD camera, and embedded AI to intelligently capture data at the sensor level. iDAR integrates 2D camera pixels and 3D LiDAR voxels at the point of data acquisition to create “true color” Dynamic Vixels – a new real-time sensor data type that delivers more accurate, longer range and more intelligent information faster to the car’s “brain”, or its path-planning system.

The product has two major architectural advantages: the camera and LiDAR are fused and boresighted, and it’s the only LiDAR system that’s entirely software-defined. As a result, the AE110 delivers high quality information faster to path planning systems, using significantly less power. Further, its straightforward API and powerful SDK transforms iDAR into a unique open system for perception innovation, allowing perception engineers to easily customize and dynamically control how scenes are interrogated. In addition, the product is under ASIL-B functional safety certification, ensuring it meets the automotive industry’s stringent safety standards.

“The AE110 sets a new bar for what perception systems are capable of,” said Aravind Ratnam, VP of product management at AEye. “By using an agile, software-definable LiDAR, the AE110 is able to collect 4-8 times the information of a fixed pattern LiDAR, using an eighth of the points. If a child walks into a scene, we can revisit that image within microseconds to help the vehicle take evasive action. Efficiency, speed and accuracy are key to perception, and the AE110 delivers all three at unprecedented levels at an extremely attractive price point.”

Key features of the AE110 include:

Software-definable LiDAR with high resolution RGB (red, green & blue) camera mechanically fused to deliver both true color and geometry as Dynamic Vixels

High resolution RGB camera with high speed interface

Onboard computer vision and embedded feedback loops for enhanced machine learning

Designed to meet ISO 26262 functional safety requirements; eye safe in all modes

Automotive SWaP optimized with documented field and environmental testing

Software Development Kit

Automotive deployment optimized with minimal crosstalk, utilizing four layers of interference mitigation and anti-spoofing technology

Enhanced adverse weather capabilities

Performance

The AE110’s unique architecture provides for the highest performance in resolution, range and refresh rate per dollar, while delivering unparalleled power savings. For example, its pseudo-random beam distribution search option makes the system eight times more efficient than fixed pattern LiDARs. The AE110 also achieves 16 times greater coverage of the entire field-of-view at 10 times the frame rate (up to 100 Hz) due to its ability to support multiple regions of interest for both LiDAR and camera.

Conventional LiDAR systems are designed to provide basic search capabilities – passively capturing limited data and treating it all equally without distinguishing critical threats or objects in the scene. In contrast, AEye’s iDAR performs multi-modal intelligent search which can then acquire, pre-classify, and track multiple objects quickly. It’s also designed to allow the system to pre-classify the objects faster than the frame-rate by allowing for a much more rapid object revisit rate.

“This is a breakthrough for perception systems,” said Allan Steinhardt, Chief Scientist at AEye and former Chief Scientist at DARPA. “iDAR can do what has been previously unachievable. Combining industry leading range, resolution, scan rate, and intelligence – we are able to capture critical data about objects such as acceleration and velocity vectors within the same frame. Our approach has superior characteristics to simple FMCW not only in reliability of acquiring closing velocity, but also as the most dangerous objects enter the scene from the side where FMCW alone is ineffective.”

Setting New Benchmarks

Recently, AEye announced that its iDAR system set a new benchmark for both range and scan rate performance for automotive-grade LiDAR. In a test monitored and validated by VSI Labs, The system detected and tracked a truck at 1,000 meters (or one kilometer). AEye also achieved a scan rate of 100Hz, setting a new speed record for the industry. This comes on the heels of the close of AEye’s series B funding, led by Taiwania Capital, bringing the company’s total funding to over $61 million.

The AE110 will be available to automotive and mobility OEMs and Tier 1s in Spring, 2019. For more information about AEye, the AE110, and the company’s innovative approach to artificial perception, please visit CES Booth #2100 at the Westgate Convention Center in Las Vegas, January 8th – 11th, 2019, For a private car demo, please contact [email protected].

Media Contact:

AEye, Inc.

Jennifer Deitsch

[email protected]

925-400-4366

AEye Introduces Advanced Mobility Product for the Autonomous Vehicle Market — The Future of Autonomous Vehicles: Part I – Think Like a Robot, Perceive Like a HumanAEye Announces the AE100 Robotic Perception System for Autonomous VehiclesAEye Announces Addition of Aravind Ratnam as Vice President of Product ManagementGartner Names AEye Cool Vendor in AI for Computer VisionAutoSens Names AEye Most Exciting Start-Up in the Automotive Imaging SectorAEye’s iDAR Shatters Both Range and Scan Rate Performance Records for Automotive Grade LiDARAEye Introduces Next Generation of Artificial Perception: New Dynamic Vixels™The Future of Autonomous Vehicles: Part II – Blind Technology without Compassion Is RuthlessAEye’s $40M Series B Includes Numerous Automotive Leaders Including Subaru, Hella, LG, and SKAEye Granted Foundational Patents For Core Solid-State MEMs-Based Agile LiDAR And Embedded AI Technology

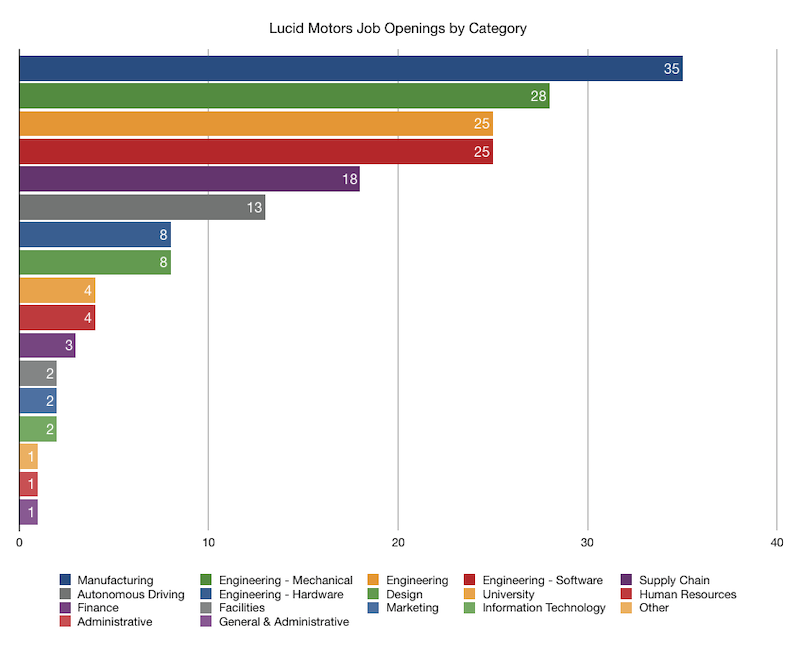

Tesla-rival Lucid Motors hiring is up 300% since Saudi investment – Thinknum Media

Last fall, California-based electric vehicle startup Lucid Motors ($LUCIDMOTORS) picked up a massive billion-dollar investment from the Public Investment Fund of Saudi Arabia. We reported on the deal last month, nothing that Lucid had embarked on a massive hiring spree. At the time, Lucid had already doubled the number of openings at the company. As of… Continue reading Tesla-rival Lucid Motors hiring is up 300% since Saudi investment – Thinknum Media

‘Waymo’s not on our radar’ – Henrik Fisker on the future of premium shared mobility – Automotive World

At the tap of a button, your ride from the coffee shop to the airport has been confirmed – a ticker on the smartphone displays an approximate arrival time, and a pin highlights the exact pickup location. With little more than a whirr from its electric motors, a shuttle soon glides to the side of… Continue reading ‘Waymo’s not on our radar’ – Henrik Fisker on the future of premium shared mobility – Automotive World

Lyft is getting more serious about autonomous vehicle safety with new hire

Lyft today announced the hiring of John Maddox, founder of the American Center for Mobility and previous associate administrator of vehicle safety research at the US Department of Transportation, to lead its autonomous vehicle safety and compliance efforts. At Lyft, Maddox will be the company’s first senior director of autonomous safety and compliance. “I’ve dedicated… Continue reading Lyft is getting more serious about autonomous vehicle safety with new hire

Lyft Welcomes First Senior Director of AV Safety and Compliance

Lyft is on a mission to build the world’s best transportation ecosystem, and as part of that, our ongoing investment in self-driving is critical. That’s why we’re excited to announce that we’ve brought on a world-class executive to lead our AV safety efforts. John Maddox joins Lyft as Senior Director, Autonomous Safety and Compliance, where… Continue reading Lyft Welcomes First Senior Director of AV Safety and Compliance

BMW Group at the CES 2019 in Las Vegas. Virtual drive in the BMW Vision iNEXT.

Munich/Las Vegas. At this year’s Consumer Electronics Show (CES) in Las Vegas, the BMW Group will showcase the future of driving pleasure and the potential of digital connectivity in a variety of different ways. From January 8-11 2019, visitors will have their first chance to take a virtual drive in the BMW Vision iNEXT, accompanied… Continue reading BMW Group at the CES 2019 in Las Vegas. Virtual drive in the BMW Vision iNEXT.

First self-driving Uber returns since pedestrian death

First self-driving Uber returns since pedestrian deathUber Technologies Inc., which halted its self-driving car program after the death of a pedestrian in March, will return one or two self-driving cars to public roads in Pittsburgh on Thursday, the company said.

Uber said it received the all-clear from the Pennsylvania Department of Transportation this week. The self-driving car program has been suspended as the National Transportation Safety Board investigated the pedestrian fatality in Tempe, Arizona, and as state officials reviewed Uber’s authorization.

This is the first time Uber is returning autonomous vehicles to public roads since the accident. In order to collect data, Uber had previously deployed some autonomous vehicles in manual mode, meaning that human drivers were operating them at all times. On Thursday, Uber will also deploy cars in San Francisco and Toronto in the manual, human-driven mode.

True autonomous testing will resume in Pittsburgh, but it will be more limited than before. Uber’s cars will drive at speeds of 25 miles per hour or below, the company said. Uber announced a number of other safety measures, including improvements to braking, operator training and driver monitoring.

“Over the past nine months, we’ve made safety core to everything we do,” said Eric Meyhofer, head of Uber’s Advanced Technologies Group.

Read or Share this story: https://www.detroitnews.com/story/business/autos/mobility/2018/12/20/first-self-driving-uber-returns-since-pedestrian-death/38773691/

Connected cars are the eyes of the (automotive) future

When you can’t see past that truck, through the fog or around the corner, connected cars will provide all the information we need. It’s becoming clearer every day: autonomous cars will necessarily be connected cars. Of course, it is within the industry’s reach to build a completely self-contained computer-controlled car equipped with the full bulk… Continue reading Connected cars are the eyes of the (automotive) future